Howdy, I’m designing a workstation for general GPU compute and would love feedback and answers to a few tricky questions from the folks here.

My primary constraints are that I want a 3975x Threaripper Pro and support for up to 4x GPUs ~300W TDP w/ 2x 8 pin PCIE connectors each (e.g., NVIDIA A100).

I’m trying to structure everything else (case, cooling, mobo, PSU, etc.) around this goal.

CPU:

3975x 32-core Threaripper Pro

GPUs:

Support for up to 4x GPUs ~300W TDP w/ 2x 8 pin PCIE connectors each (e.g., NVIDIA A100).

Cooling

Custom water cooling loop for CPU + 4x GPUs from EKWB w/ 2x 420mm EK PE radiators + dual d5 pump. I will be using the EK Quick Disconnect system as I will be swapping out GPUs frequently.

Storage

Intel Optane P5800X 800GB boot drive + 2TB Firecuda 530 NVME.

PSU

Obviously need 1600W+ PSU (though going to 2000W on a 220V is on the table).

The choice of PSU is complicated by the choice of motherboard. See below.

Case

Leaning towards Define 7 XL, but depends on choice of Mobo + PSU (i.e., if I need two PSUs) to ensure everything will fit.

I’m a little nervous about fitting the 2x 420mm radiators + 4 GPUs + an EATX motherboard.

Motherboard

Here’s where things get complicated when taken in consideration with the available PSUs.

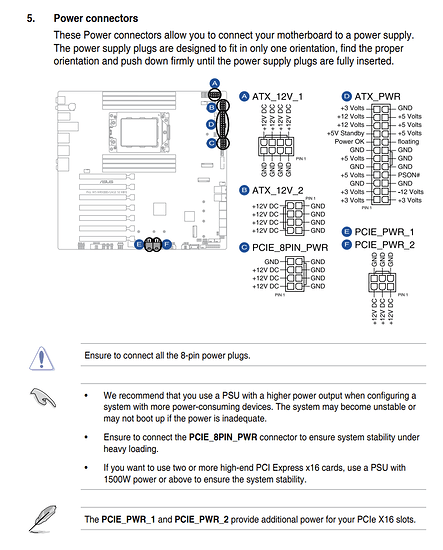

Asus PRO WS WRX80E-SAGE SE WIFI

- Explicitly states it supports 4x GPUs.

- Requires 2x 8pin ATX + 1x 8pin PCIE + 2x 6pin PCIE connectors

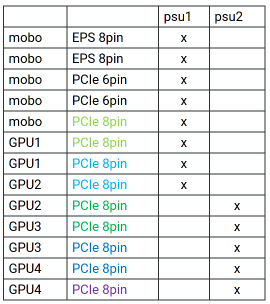

This means that I need 5x 8pin power connectors for the motherboard alone. The GPUs would need another 8x 8pin connectors, meaning I would need 13 total 8pin power connections. As far as I know, there aren’t any PSUs with this many connectors, and I’d need two. Corsair AX1600i has 10 and Super Flower Leadex Platinum has 11.

As I’ll describe below, the ASUS board is the only WRX80 board that has these two 6pin connectors. Does anyone know why ASUS has these two additional 6pin PCIE power connectors?

Gigabyte WRX80-SU8-IPMI

- Requires 3x 8pin ATX connectors

From a connector stand point, this motherboard would work with the Super Flower Leadex.

The Gigabyte documentation doesn’t mention multi-GPU support. And I’ve seen several forum posts about issues with getting 4 GPUs to work (something in the UEFI settings).

This mobo doesn’t seem to have stock anywhere either.

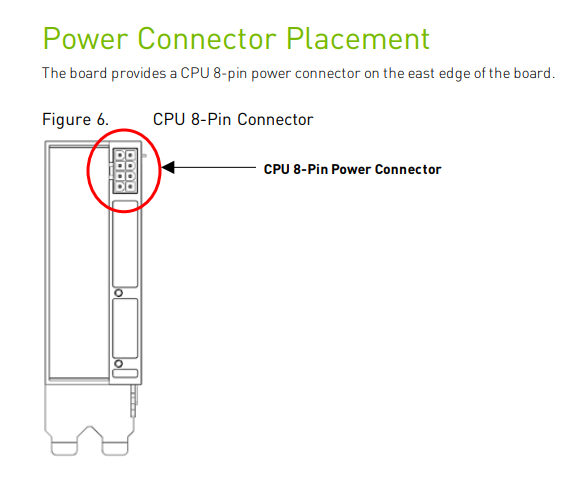

Supermicro M12SWA-TF

- Requires 3x 8pin ATX connectors

Not a lot of good information out there about this board. I’ve been eagerly waiting for @wendell to review.

The thermal design seems lackluster (e.g., VRM heatsink) and dependent on a very high airflow chassis (i.e., depends on living in a Supermicro chassis).

Summary

So my main conundrum is the choice of Mobo + PSU + Case.

The ASUS seems like it would require 2x PSUs if those 2x 6 pin connectors are required. This would also mean a case big enough to hold two PSUs.

If I go with the Gigabyte or Supermicro, I can get the Super Flower Leadex and everything should fit in the Define 7 XL. But I have reservations about the 4x GPU support on the Gigabyte, and the overall lack of reviews on the Supermicro as well as the seemingly lackluster thermals.

I’d appreciate any feedback or thoughts, especially about the motherboard/PSU/case choices.

).

).