how would I connect CPU to ethernet controller, drive controllers, etc… is it I2C , SPI , etc… ?

Most devices on a modern x86 PC are connected, directly or indirectly, via PCIe physically, but much of the older PCI software standard still remains in use, really all that changed between PCI and PCIe is the electrical interface.

Like you mention, some devices are connected by different busses, but are via PCI devices, which the processor communicates with.

For example, my machine has a PCI device which acts as an SMBus (a subset of I2C) interface:

00:14.0 SMBus: Advanced Micro Devices, Inc. [AMD] FCH SMBus Controller (rev 61)

On most small/cheap machines (like early Raspberry PIs), there is no PCI interface at all - devices use a mixture of different interfaces which do not have plug-n-play / autodetection standards defined. These usually use a data structure called a Devicetree which is made specifically for the particular board. The devicetree binary may be passed from the bootloader to the kernel at boot time to dynamically configure the kernel for the hardware, or be staticly compiled into the kernel.

after the system gets power and make the POST how does it know that this device on PCIe slot is a graphics card and the other one is a USB controller and the third is a 10 Gbps ethernet etc… ?

(about the best resource for this is PCI - OSDev Wiki)

PCI can be implemented on any architecture, but there are parts which are architecture specific.

The standard part begins with a PCI bus scan. Every PCI device has a bus number, device number and function number. You see this in Linux lspci output, like the example above. 00:14.0 is bus 0, device 14h (hex), function 0.

The BIOS or UEFI firmware configures the PCI controller (in PCIe nomenclature - “root complex”) to assigns device numbers to physical slots, or partial slots (like for PCI bifurcation) at system boot, using its board-specific configuration along with a probe of the physical slots, at the electrical level, to determine devices that are physically connected and which PCI capabilities they have.

When the operating system / kernel boots, it performs a PCI scan using the software interface.

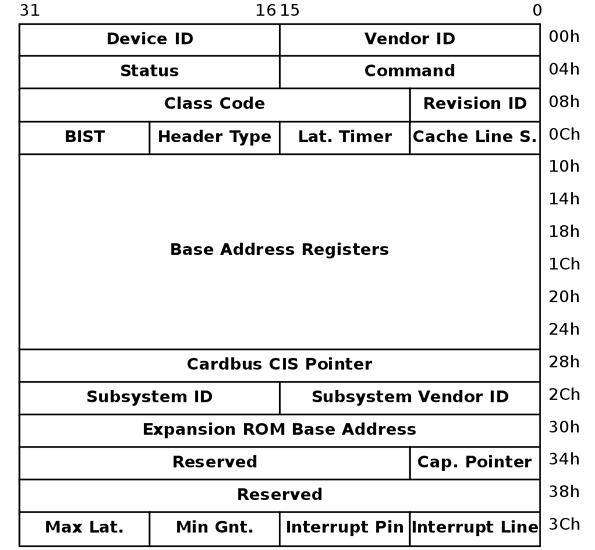

For every possible bus/device/function number, the kernel reads the BAR (Base Addres Registers) from the PCI bus. The first 16-bits of the BAR is the vendor code of the PCI device.

If a device doesn’t exist, it comes back as all 1’s (legacy PCI would physically have the bus floating, so in the high-impedance state reads would be binary 1), so a vendor code of 0xffff indicates a device is not present, so is skipped.

If the vendor code is valid, then the rest of the BAR is read to get the 16-bit device code, which is allocated by the device vendor to indicate a particular model of device, then the PCI standard device code, which indicates any particular PCI standards which the device implements (such as a PCI bridge, VGA controller, network device).

Once the list of devices is discovered, then the task of resource allocation must be done.

For each PCI address found, a 32 or 64-bit all-1’s value is written to the memory BAR base address register, which is a register that indicates where in memory this device is mapped to. The device will clear all the least significant bits which it uses to decode an address, indicating the size of the address range which the device uses.

The OS builds a list of the memory sizes of all the devices (which may have multiple different types of memory), and decides how to map them in RAM. Once the OS has allocated resources, it writes the base address to each BAR of each device, configures memory paging to make sure combining-writes or caching is set appropriately (some types of memory might be fine to cache, some types may be used purely as MMIO, which would be a disaster to cache)

To actually implement this in the kernel for a specific architecture depends on the architecture.

On x86, before UEFI and ACPI, the BIOS and OS would use I/O port 0xcf8 to select a device address, then read/write from I/O port 0xcfc to configure the device.

Since UEFI and ACPI (and PCIe), an special ACPI table called MCFG is created by the firmware then made available to the kernel, which contains a physical address of the MMIO interface of the PCI controller (PCI Express - OSDev Wiki). The ACPI is 64-bit capable, and available on arhitectures which do not implement port I/O (some RISCs had to emulate port I/O in the PCI controller for legacy PCI).

To get started for examples for Linux, check pci_legacy_init (arch/x86/pci/legacy.c), which calls PCI functions common to all architectures in pci_scan_root_bus/pci_scan_child_bus/pci_scan_child_bus_extend in drivers/pci/probe.c