Latest update! 2 pieces of good news, 1 piece of (potentially awful) bad news

Good news:

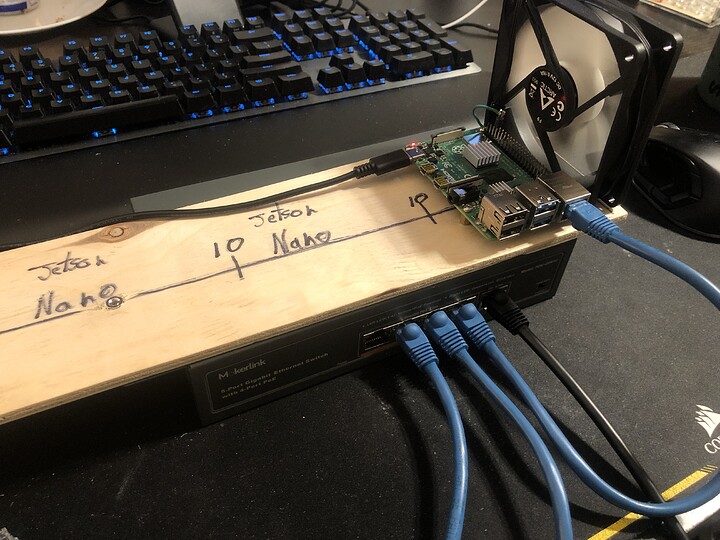

GN1: My 2 jetsons arrived (Yay!) and I’ve b

egun testing on them.

GN2: when using the transcoding blocks, memory is preemtively moved and worked on in GPU memory! so my concern about having to copy things back and forth to use cuda looks like a non factor!

Bad News

Unfortunately I’ve come up against a (potentially huge) roadblock.

BN1:

From RTFM’ing, It seems that nvidia doesnt exactly document how their own decode/encode blocks work.

Specifically, the issue is that the documentation provides contradicting information. Per the Developer guide here the Nano is listed as only supporting 8 bit formats of HEVC, VP9 and H264.

However per the nvidia gstreamer documentation, HW accelerated decode and encode are supported all the way to 12 bit. It even mentions special flags to increase performance on low memory devices, such as the jetson nano series.

My main questions that I need to explore (or if you know anything please share!!) are:

1 Which of these documents should I consider to be correct

2 In the case where the first document is correct, how is gstreamer supporting higher bit depth content then the native decode/encode blocks.

3 In the case where the second document is correct, how would I access this capability outside of Gstreamer?

4 In the case where the Second document is correct, what is the performance penalties for using higher bit depth content?

5 In the case where both documents are correct, what is the expected return? a file that has the “extra” bit depth truncated?

Ideally the 5th option is correct and handled internaly by an api call. This would mean that I can assume that regardless of source content, I will always receive yuv420 content.

Otherwise, I’ve managed to get 1.3* real time performance when transcoding 75mbps HEVC 8 bit to pretty much any form of HEVC/H264!

And since everything is still on gpu, it should mean that the cuda overhead for tonemapping filters shouldn’t be a problem!

I think that the way to go would be to always scale first, since that lowers the memory requirements for everything following it in the chain.

I think that the way to go would be to always scale first, since that lowers the memory requirements for everything following it in the chain.