Thank you so much sir. It’s a little slow going.

I had to re-install Fedora 34 (Did workstation this time then added packages for cockpit as well as libvirt and QEMU for virtualization) because of fore mentioned network mess up… I did a network “Bond”… so I need to set up a different connection so VM’s can access LAN.

I still need to install the cockpit package for virtualization and VM management.

The advantage of this install is I can set up storage via desktop and explore the file structure for directories because I cant remember them by heart.

I do need to figure out the way to securely log in, NGINX, I will need to move DHCP from the node I’m using as a router thats part of the mesh. The network switch I have is managed… there are so many options there with the cisco router. It’s just default for now.

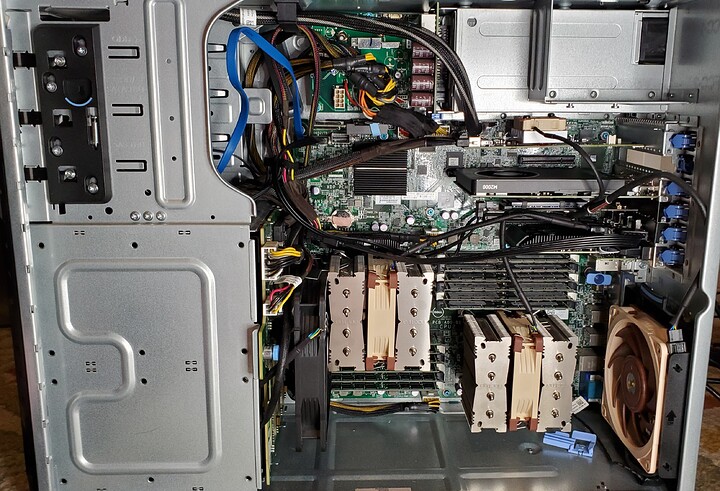

I think the first thing I want to setup is TrueNAS just to manage a secondary datastore with a rsync once I figure that out. I can also use it for a dataset for a steam cache server. I’m going to try your suggested security setup with pi-hole on the test server them migrate it or redo the installation on the “production” server. Then implement some more security. TrueNAS may not be needed if nextcloud replaces it.

Currently on the production server I have ZFS set up natively with proxmox and a samba share. I’m however not happy with the share speed to linux machines. It’s about half the speed (50MB/s) of my windows connections (100MB/s). I’m sure there is some samba tuning I can do so I want to play with that on the test server as well to see what I need to do to tune the default settings. I also need to plug some security holes there because I believe I had to do the terrible chmod 777 to be able to access and modify data on those shares so that NEEDS to be fixed asap.

I have a long laundry list. It takes me some time because my brain is slow and I have to take extensive notes to not lose my place. I’m just happy the system is running and I’m not getting errors on cockpit like I was before.

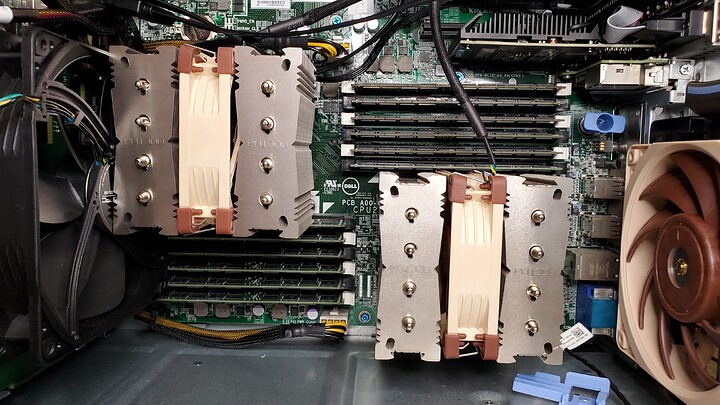

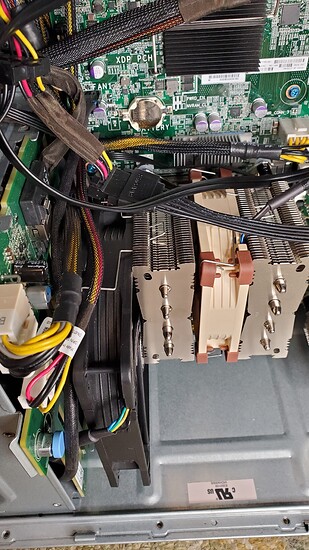

Then later I will mess with the NVIDIA GRID K2. Now I just have the Quadro M2000 for a pass through, and GT 710 for the desktop.

The other machine is running a gtx 1650 super. I’m fairly sure is being used properly for transcodes in a LXC container…

I also need to figure out how to automate tasks such as updates and such on the proxmox server…

SOOOOOO much I’d like to get setup and done. One item at a time.

Also, setting up domain, changing my default IP’s…the list goes on and on…lol

EDIT: I dont know where I talked about Barrier not working but, I had solved one issue. I was able to install the NVIDIA drivers on fedora 34 correctly. Because barrier has to use video in some way for the mouse cursor I installed it to try it again and BINGO… it works properly. So I guess barrier needs the correct video drivers with fedora 34 at least for the cursor to work correctly so you can see it properly.