PC Repair Project

Problem: Certain games, not necessarily on the higher end, appear to be causing the PC to freeze up. Whilst the freeze takes place the monitors go black, audio continues to play for a while. The GPU fans ramp up to 100% and the pc is irresponsive aside from audio (Plays for 10-12 seconds then cuts out).

PC Specifications:

• CPU: Intel Core i7-7700K 4.2GHz (Kaby Lake) Socket LGA1151 Pre-Binned Processor - OEM

• Motherboard: Asus Maximus IX Formula

• System Memory: Team Group Xtreem “8Pack Edition” 32GB (4x8GB) DDR4

• GPU: EVGA GTX 1080 Ti Kingpin Edition (11GB)

• Water Cooler: Corsair Hydro Series H150i PRO RGB Performance Liquid Cooler - 360mm

• PSU: 850W be quiet! Dark Power Pro 11, Hybrid Modular, 80 PLUS Platinum

• Case: Cooler Master COSMOS C700P RGB, Black/Metal

• Case Fans: 3 x Corsair ML120 Pro RGB 120mm (Radiator), 3 x Corsair ML120 (Front Intake) 1 x Corsair ML140 Pro RGB 140mm (Rear Exhaust)

• Monitors: Asus ROG PG278QR x 2

• Windows 10 Pro (Build 19045.3324)

Solutions Carried out to Solve Issue:

• Thorough Clean (Fans, Radiator and Case)

• Replacement Thermal Paste (CPU)

• Complete Nvidia Driver Refresh (DDU)

• BIOS FLASH

• Cleared CMOS

• Underclocked Hardware

• AIDA64 & 3DMark Stress Tests (Passed)

• Switched PCIE slot

• Replaced modular GPU PCIE power cable (2x8)

• Added a stock Intel Cooler

• Ran PC in “test bench” Configuration

• Dismantled, cleaned and resat thermal pads of GPU

• Replaced GPU Thermal Paste

• Ran MemTest (Passed)

• Adjusted GPU and CPU Fan Curves to Aggressive modes

• Reinstalled DirectX 10-12

• Reinstalled VCREDIST (2008-2022)

• Enabled High Precision Event Timer (via CMD)

• Plugged PC directly into Wall Socket (previously used extension cord)

• Utilised single PCIe Power cables instead of daisy chained config for GPU

• Reduced Mhz Drawn by GPU in MSI Afterburner/EVGA Precision X1

The problem is still persisting in certain games. Some notable mentions are The Sims 4, MW2 (2009) and Two Point Campus. In certain games like Super Mario Odyssey, the moment of the crash is predictable in a certain area. This was the case with MW2 (2009) also. The PC can run other AAA titles with no issues. Examples of these would be Battlefield, Fallout 4, Cyberpunk and Doom etc for hours. If anyone can think of other solutions it would be much appreciated. Happy to provide any related information that would assist with solving this problem. I have a friend dropping off a R9 270x and a GTX 1050ti tonight. I’ll run through the older games I’m having issues with and report back.

Big fan of the channel btw, just joining the forum community now. You guys seem like a great bunch.

Update

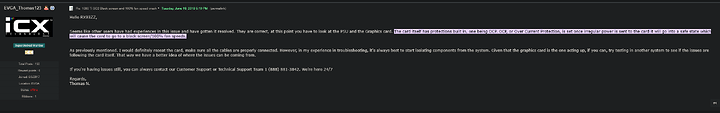

After many hours of trying and testing solutions we have found that the cause of my problem was due to the amount of Mhz my GPU was drawing. Creating a slight negative offset in Mhz (in my case) within MSI Afterburner likely reduced the power being consumed by the GPU. The symptoms of the crash matched EVGA’s Over Current Response (OCP) protocol. This happens when the GPU receives an irregular amount of power from the PSU.

I hope this thread may assist others going through the same issues that I have experienced. Big thanks again to everyone who offered support!

1 Like

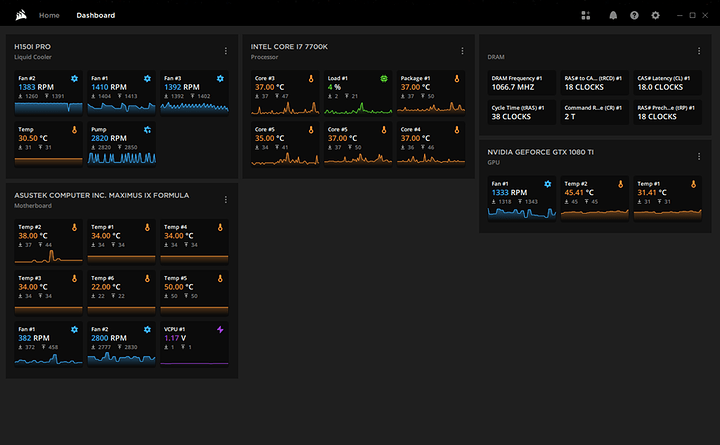

Idle Temperatures:

From my testing since cleaning, the CPU barely hits 80C and my GPU peaks at around 72C now in games, hot spots hit 80C briefly.

After taking the GPU apart I found that there was a build up of thermal paste covering some of the soldering surrounding the chip. I cleared this up with alcohol wipes and replaced the paste with Akasa Essential T5 (4.0 W/mK). I’ve heard that this has been the root cause of similar issues.

After reassembly the system was tested with MW2 (2009). This is a title I know consistently causes the issue. After about 15 minutes of gameplay the system froze and the fans kicked in. Overall temps seemed to be within the 37-80C window. The GPU overall appears to stabilise at around 65-75C under load.

this is typically an issue with hpet being disabled in bios.

hpet=high precision event timer…

so go into bios and make sure its enabled. (its an asus board so may not have a setting for it, if so then check in windows to see if its enabled in windows… if it is enabled, disable it (it will remain enabled for bios regardless of the windows setting)

open an elevated cmd and type

bcdedit /enum

you should see useplatformclock set to Yes or No

if its no then its disabled in windows, if its yes enabled…

to disable it in windows use

bcdedit /deletevalue useplatformclock

to enable it in windows

bcdedit /set useplatformclock true

the latter will make the hpet clock run at way higher speed than if set to disable and as a result will shorten your motherboards battery life dramatically (by up to 80% meaning if its a 5 year battery it will last a year before you need to replace it)

and yes you will find posts claiming on in bios off in windows is bad… its not, its the most stable way ive found of running it. and have ran it this way since i figured out it was the problem on my old i7920/hd5870 windows 7 build.

lastly install direct x june 2010 and then direct x 11 and finally run the direct x installer that will update dx12.

then install vcredist libraries from 2008 to present…

this will maximise game compatibility for a lot of old games and fix a lot of issues caused by missing old files.

1 Like

I’ll make a start on this now. Thanks for the support. I was looking at my redistributables as my next step also due to the consistency of the crashes.

I’ll report back in an hour or 2 with the results. I’ll need to run some tests which may take some time.

Thanks Again!

1 Like

I had to head out for a little bit but I’m just testing now.

I have now installed the DirectX packages dx10-12. I’ve also completed fresh reinstalls of my VCRedist libs (2008-2022). Both downloads were available via Microsoft’s Documentation section.

I enabled the high precision event timer via the CMD. Restated the PC also. @anon7678104 You were right in stating that it is not an option in my bios. Even Asus ROG boards appear to be missing a lot of options I’m seeing on MSI etc.

Going to begin testing now. I will report back with my findings. Thanks again.

@anon7678104 My PC appears to be a bit sluggish after doing all that. Getting good framerates in game but it seems to stutter every 3-4 seconds. Any ideas? No signs of overheating or devices being under utilised. Most animations within Windows and my browser seem to be lagging a little too.

UPDATE

Sluggish behaviour appears to be related to the High Precision Event Timer being enabled. Turning it off solved this immediately after a restart. It seems that with that option on I get a consistent stutter and overall lag in my OS. If this is telling of something then I’m open to hearing suggestions. My brief research on the setting seems to show that some people are affected and some aren’t. After testing a game that crashes with the DirectX and VCREDIST packages installed I found that I was still experiencing crashes.

like i said mate i had best results with it disabled in windows but on in bios.

the good news is that its on by default, in bios but no option to disable it.

disabling it in windows will get rid of the stutter and save your motherboard battery.

but here in lies the problem… it was on in bios and off in windows when you got the hardware clock crash.

so its likely not the hpet  causing it.

causing it.

so all i can recommend is use ddu and fresh install your gpu/motherboard drivers.

so before you go any further run

bcdedit /deletevalue useplatformclock

to make sure its off in windows as it was previously.

and hope fresh drivers fix the issue.

1 Like

Thanks, I’ve already run the mentioned command. I’ll give it another go, maybe see if I’ve missed anything. I’ve got a R9 270x and a GTX 1050ti to try out also. I’m so confused with this one…

Bit of a shot in the dark but my PC is connected through an extension lead. Could plugging it directly into the wall socket potentially help? I’m trying to think out of the box at this point as I’m running out of options.

UPDATE

Switching to direct socket power did not have any affect on the crashes. I can now predict the exact moment in one of my test games where the crash takes place. I’ve tried to find relevant errors in the Event Viewer but nothing stands out. (I don’t have a tonne of experience with the Event Viewer).

I performed the “sfc /scannow” command and a few files were restored. Continuing testing but I have a feeling this is software related.

UPDATE

I’ve tried out the GTX 1050ti and I didn’t appear to get the crashes after the switch. It seems that this is somehow tied to my EVGA GTX 1080 Ti Kingpin. I’m thinking about buying new thermal pads and a better quality thermal paste? Maybe there’s a hot spot in my monitor I’m not seeing that’s shutting it down. Still odd about the consistency of the crashes though. If anyone else can think of anything please do let me know.

I use EVGA Precision X but only for the fan curves. I’ve not overclocked that GPU as it didn’t feel necessary. I’ve got an 850W Platinum PSU and the minimum for that card is 600W. So I don’t think a lack of power is the issue.

I played a few hours of Cyberpunk last night at 1440p, high to medium settings. The 1080TI and i7 7770k didn’t go above 73C at their hottest points throughout that period. I was getting a stable 65-100fps with some mods.

The power would be my suspicion too. I assume you feed the card with two distinct powercables instead of a single daisy chained?

Just a shot in the dark here…

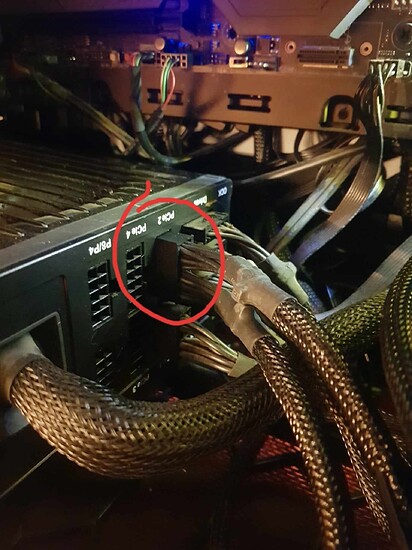

Hey @wertigon, thanks for the reply. It seems that I have a 2 x 8 PCIe connector coming from the single “PCIe2” port on the PSU. Do you think this could be an issue for my card?

1 Like

I’ve added an extra power cable and plugged them in so there’s no chain. Testing now.

UPDATE

After running MW2 again after swapping the daisy chained cables for 2 individual PCIe’s, I’m still running into the same issue. Thanks for the idea though, it did make sense.

It shouldn’t be an issue to split at the PSU side as the standard 150w PCIe connection doesn’t use two of the power pins. I’m sure someone will correct me if I’m mistaken, but adding the two power pins should turn a 150w 6+2 PCIe connection into a 300w 8-pin EPS connection. If the dual PCIe connector splits at the PSU side, it should be able to use all of the power pins making it 150w + 150w for 300w total. What they are talking about above is daisy chaining two 6+2 PCIe off of one 150w source from the PSU.

(This is all speculation based on observation and I have no sources to back anything up. If incorrect I will delete the post.)

1 Like

I would consider my PSU to be relatively high end (especially for 2018). The cables included imply that you would use the daisy chained cable for the GPU. In order to get this to work I am using 2 cables with split ends. Leaving the extra 2 x 8 pins to the side. If that makes sense…

But yeah, same issue persists. black screen and ramped up GPU fans

The red circle in your picture seems to imply that there are two distinct 6+2 PCIe cable’s coming right off the PSU side. “Daisy chaining” is when there is a pigtail coming right off the end of the first connector.

If you still want to go down the PSU route, we can look into whether yours is single or multi rail on the 12v, but what I was trying to say above is that I don’t think it’s a power issue spec-wise.

Edit: Found some pictures.

Daisy chain (above)

Split (pretend this is an OEM cable that plugs into the PSU)

1 Like

You’re correct, that is the configuration in the picture (bottom example). I can have a look at the documentation for the PSU. I’ll see if there’s anything that stands out

Tom’s Hardware has the following table describing the power distribution:

This does not seem like an issue with the hardware since temps and power are well within specs. Still good to exhaust the basics youd be amazed at the times a jank cable or badly seated PCIe card was the answer…

Anyway, sounds like one of two things are happening then. Either the 1080 Ti is simply worn out, or the issue is with the drivers or the card firmware.

Since you have found a reliable way to reproduce it, maybe contact EVGA with a screencasted video via OBS Studio or something?

I could start a support ticket with them. Is the GPU firmware something that I can update separately from the regular drivers? I’ve done a DDU a couple of times now with no success.

I’ve found this on an EVGA forum about a very similar card:

I wonder if my PSU is maybe over powering the card at this point?