Ok, so, Aspie ZFS rant time and I don’t have an English degree, so buckle up.

So, for the most part, for drives over 2TB, just don’t use RaidZ1, move on to RaidZ2, unless you like to live dangerously. I have done it, but I have access to a good data recovery lab but it nagged in the back of my mind for years until I spun up a new pool in RaidZ2 and migrated all the data from the old pool to the new pool.

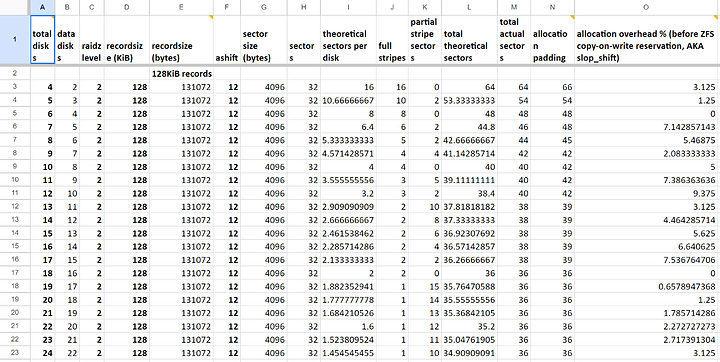

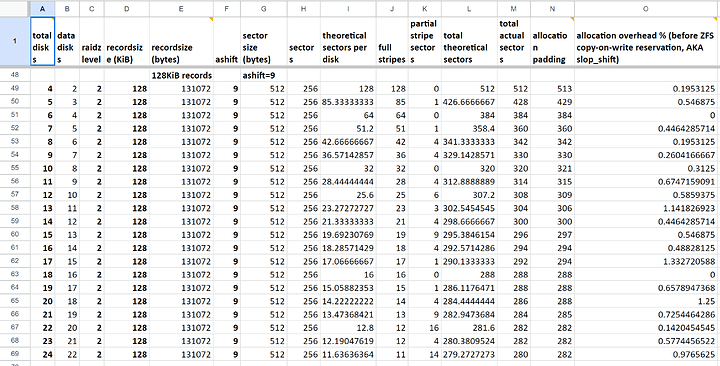

Generic advice for vdev sizing gets a bit fun, but usually for RaidZ2 I stick to 6 disks per vdev and stripe vdevs as I need. This means if I want more space down the road and drives are way bigger then, think 8TB > 18TB then I “only” have to replace 6 drives at a time if I do not have empty HDD bays to just add more vdevs too. Example gsheet below.

4k sectors

512 sectors

NOW, the fun part, if you are using compression (AND YOU SHOULD 99% OF THE TIME) you will have allocations smaller than your record size, this means that you may have some small holes to fill later in the pools life, eg: you write a text file and it compresses to 32KiB of your 128KiB recordsize and you don’t have anything else to fill that record, you will have “fragmentation”, like you come right behind it and write a video file or a large picture or something, well, that leftover space gets skipped by ZFS to write full 128KiB writes sequentially, so you “lose” that 96KiB until later as ZFS is trying to avoid fragmentation. Now lets say you pool is older and been running for a bit and stuff is all over the place over the years, and you are 80+% full, you might start running into having to “find” places to cram data in the pool, and ZFS is smart enough to use what it can as it finds it the vdev, so now you start filling all these little holes everywhere and that data is slower to retrieve than older data that was written contiguously. This is ZFS fragmentation, there are ways to fix it, just takes time. (You have to make a new pool or dataset and move the data from one to the other, then you can move it back if you want after clearing snaps and waiting for ZFS to free the old blocks.)

Now, the way ZFS load balances data across vdevs is whoever the fastest vdev is gets more transaction groups to commit to disk, so if you have a 6 disk 8TB vdev that is 50%+ full and you add a new vdev of 6 18TB disks, the new disks will be faster until they start filling up to ~50% where spinning rust tends to get slower. So maybe the 8TB vdev gets 33-40% of the writes and the 18TB vdev gets 67-60% of the writes, over time it will balance out.

Now, if you have a crapton of disks you can play with something called draid, a new ZFS pool layout/type that more or less chunks up the disks and makes virtual hot spare and all kinds of neat crap.

Ok, now that we have some of the basics out of the way, if you have 3 8TB and 4 16TB disks and you wanted to hermit crab it up at a later date, aka have more space at a later date, the cheapest way would be to make a single RaidZ2 of 4 16TB + 2 8TB then keep that last 8TB handy because that is now your cold spare incase a drive dies. Then when a good deal comes up for 2 16TB drives (hopefully same make/model inside the same vdev, but that’s just for OCD mostly) you snach them up, then replace one 8TB drive at a time and hope the rebuild process does not kill a 2ed disk from the load, but, it is a RaidZ2, so you can lose 2 drives and still rebuild that vdev. Then once that process is done, if the proptery of “autoexpand=on” is set, the pool will swell to it’s new size all on it’s own once the final resilver is done.

Also, if you really want a pool for running VMs and stuff off of, grab a bunch of “cheap” 1-2TB SATA SSDs and make a pool, best practice would in 2x2x2x2xetc, but SATA is garbage half duplex trash, so if you have the cash to burn, grab some SAS2/SAS3 SSDs for the VM pool or some U.2/U.3 drives that are oddly cheap on eBay, and use those, or even like the cheap ASUS Hyper M.2 x16 Gen 4 Card and cram it full of cheap NVMes to make a pool, just make sure your motherboard supports PCI-E bifurcation where you plan on plugging it in.

I think that is most of what I wanted to autism about, sorry for the wall of random train of thought. Let me know if I glossed over something.