It is a issue but you can mount the fans slightly above the heatsinks and it will fit. Looks not as nice but works.

wny chance you can post a picture of it?

wow, just turned on 4 numa nodes per socket and memory bandwidth improved by 13-14% and memory latency improved by almost 10%. as far as I can tell, it hasn’t impacted cpu throughput performance. the linux scheduler seems to be pretty good about not moving tasks between cores on different numa nodes. if you’re on linux and on this board, set the numa mode to NP4.

Bandwidth increases, but latency will also increase, depending on the application if it needs to cross NUMA boundaries.

Required reading:

well, yeah, but with 64GB of RAM per numa domain, that doesn’t happen terribly often. I’ve also started creating little numactl wrappers for lightly threaded applications, so they’re running on the appropriate CCD given their I/O needs. but a lot of my work happily scales to 64 threads, each accessing a dedicated chunk of RAM, so the numa nodes are pretty much always a win for me.

mlc results with numa off:

Intel(R) Memory Latency Checker - v3.9a

Measuring idle latencies (in ns)...

Numa node

Numa node 0

0 83.2

Measuring Peak Injection Memory Bandwidths for the system

Bandwidths are in MB/sec (1 MB/sec = 1,000,000 Bytes/sec)

Using only one thread from each core if Hyper-threading is enabled

Using traffic with the following read-write ratios

ALL Reads : 173380.8

3:1 Reads-Writes : 167018.7

2:1 Reads-Writes : 169705.3

1:1 Reads-Writes : 171710.7

Stream-triad like: 172038.8

Measuring Memory Bandwidths between nodes within system

Bandwidths are in MB/sec (1 MB/sec = 1,000,000 Bytes/sec)

Using only one thread from each core if Hyper-threading is enabled

Using Read-only traffic type

Numa node

Numa node 0

0 173319.8

Measuring Loaded Latencies for the system

Using only one thread from each core if Hyper-threading is enabled

Using Read-only traffic type

Inject Latency Bandwidth

Delay (ns) MB/sec

==========================

00000 454.40 173211.5

00002 453.72 173107.7

00008 459.06 171844.2

00015 465.98 168112.2

00050 397.91 167074.2

00100 370.15 167004.3

00200 310.15 166536.0

00300 234.81 167069.8

00400 143.10 155939.0

00500 124.26 129519.1

00700 109.46 94400.6

01000 103.33 66974.9

01300 100.77 51912.0

01700 98.96 40026.2

02500 97.50 27578.3

03500 96.99 19946.7

05000 96.30 14200.9

09000 95.85 8210.1

20000 95.28 4062.9

Measuring cache-to-cache transfer latency (in ns)...

Local Socket L2->L2 HIT latency 19.5

Local Socket L2->L2 HITM latency 21.4

mlc results with numa on:

Intel(R) Memory Latency Checker - v3.9a

Measuring idle latencies (in ns)...

Numa node

Numa node 0 1 2 3

0 74.4 81.1 87.4 89.3

1 83.0 75.6 89.9 87.5

2 92.7 89.7 75.5 80.9

3 90.0 87.6 81.3 74.7

Measuring Peak Injection Memory Bandwidths for the system

Bandwidths are in MB/sec (1 MB/sec = 1,000,000 Bytes/sec)

Using only one thread from each core if Hyper-threading is enabled

Using traffic with the following read-write ratios

ALL Reads : 197584.6

3:1 Reads-Writes : 189204.1

2:1 Reads-Writes : 190143.6

1:1 Reads-Writes : 191169.8

Stream-triad like: 192187.3

Measuring Memory Bandwidths between nodes within system

Bandwidths are in MB/sec (1 MB/sec = 1,000,000 Bytes/sec)

Using only one thread from each core if Hyper-threading is enabled

Using Read-only traffic type

Numa node

Numa node 0 1 2 3

0 50137.1 49701.3 47833.5 46873.1

1 48939.3 49192.4 46601.1 47654.2

2 47569.5 46526.4 49177.8 48944.1

3 46635.9 47479.7 48889.5 49226.4

Measuring Loaded Latencies for the system

Using only one thread from each core if Hyper-threading is enabled

Using Read-only traffic type

Inject Latency Bandwidth

Delay (ns) MB/sec

==========================

00000 390.43 197451.4

00002 389.66 197494.3

00008 387.75 197569.1

00015 391.24 197550.5

00050 373.16 197982.1

00100 351.22 198478.3

00200 289.55 198254.2

00300 144.82 192632.0

00400 117.81 157979.6

00500 108.17 130175.2

00700 97.33 94440.8

01000 92.97 67108.1

01300 91.43 51970.8

01700 89.88 40165.1

02500 88.94 27654.7

03500 87.89 20015.0

05000 87.52 14228.8

09000 86.99 8270.7

20000 86.77 4135.4

Measuring cache-to-cache transfer latency (in ns)...

Local Socket L2->L2 HIT latency 19.0

Local Socket L2->L2 HITM latency 20.8

this is, in the worst case - between the most distant numa nodes - only 5ns worse latency than the latency when numa nodes per socket is set to NPS1. that’s more than reasonable imo for an almost 15% win in memory bandwidth and almost 10% win in memory latency when the system is loaded.

Unfortunately shifting the fan up on the heatsink does not work in my case. See detailed post here:

I managed to get an order through today for anyone still trying to get hold of one.

@Kish @tristank im thinking if having only one fan (due to the limitation of the board, will be enough due to the quality of the heatsink + having good in/out fans to the case itself

how do you actually use the VNC on this board? IPMI claims to provide it but I can’t actually connect with any clients.

Are you asking how to get to the BNC interface? If so, look at the DCHP record on your router to get the IP. The OUI will be under “ASRock Rack” for the BNC nic. Or you can get the IP from the BIOS, on the BNC tab.

Keep in mind that the BNC takes a while to boot up. So give it a good 5 mins or so after turning on the power switch on the PSU to fully boot.

Or are you asking how to access the remote viewer of the BNC? Go to Remote Viewer tab once you’re logged into the BNC UI. You can use either the HTML5 client or Java.

Note: the viewer only works if you don’t have an external graphics card installed. Or if you have no monitor connected to your external GPU, if you have one installed. I don’t think you can have both a monitor attached and use the remote viewer, as the BNC is unable to redirect the video.

no, I managed to connect via KVM fine but the IPMI settings in the portal claim there’s a VNC service running on port 5901 by default. I was trying to see if I could connect to that to get a bit better interface than the serial console.

Which page on the BNC UI do you see that? I can’t find anything about VNC mentioned on mine.

Edit: found it. It’s under Settings → Services. “VNC” is shown as “active” on port 5901. Yeah, not sure about that. FWIW, the HTML5 remote viewer works great for me, good quality and no latency.

Settings → Services. it’s the last one on the list.

enabling the serial console makes reboots unbearably slow though, and I’m having weird issues accessing ttys/ptys in linux (I think literally all of them are getting allocated to the serial graphics device??). so I was hoping this would be a less intrusive alternative.

I have seen folks claim they got Passmark memtest86 to work. Latest version v10.00 version, and it just hangs at getting memory controller details. I waited for hours. Tried an older version, and it was waiting on gathering memory details. I was able to get memtest86+ to work, but i am more familiar with the Passmark version and the better control.

My intended application is a desktop workstation, so have no need for server or remote access functions. I want to out the computer to sleep, but there is no option to do that in Windows 10 Pro. Power Options just allows shutdown and restart. I can understand no sleep for a server, but can’t find any settings in BIOS to enable sleep. AsRock tech support has been useless, pointing me to windows settings and told to contact Microsoft! They ignore my questions as to whether there are BIOS settings to make. Yeah, thats why i spend $1000 on their products, for this level of support! Has anyone gotten sleep to work with the R2.0 version? Have a single gtx 1660 super installed with latest nvidia drivers.

And finally, how does one control and monitor fans? I have set them to full speed in BIOS and disabled BMC, but they aren’t running at full speed! Dont see anything listed in HWMonitor section, though I seem to recall seeing stuff listed at some point. Also the AiTuning utility in windows shows nothing under systeminfo tab.

TiA for any help!!

how does one control and monitor fans?

For monitoring:

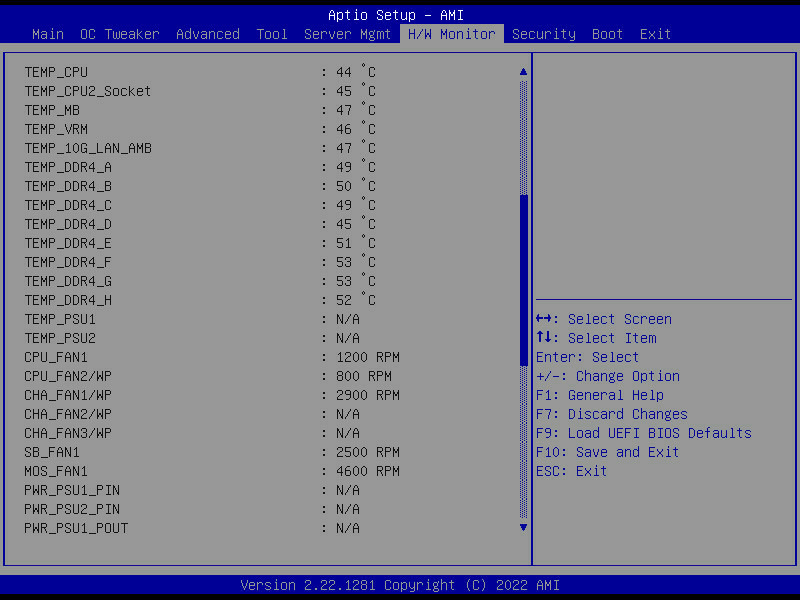

In BIOS. Under H/W Monitor tab:

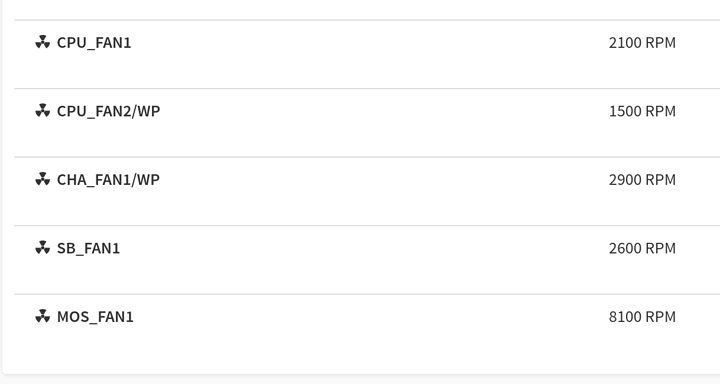

In the BMC. Under Sensor → Normal Sensors:

For control:

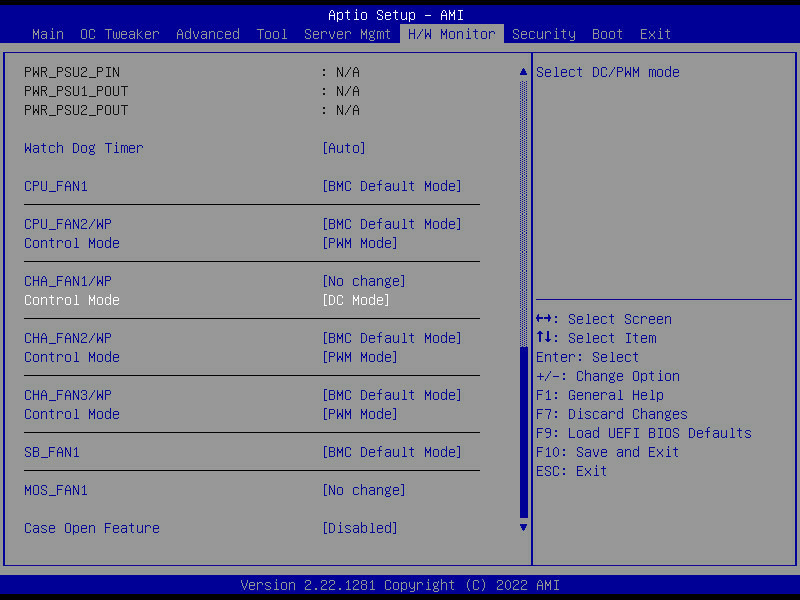

In BIOS. HW Monitor → Scroll down:

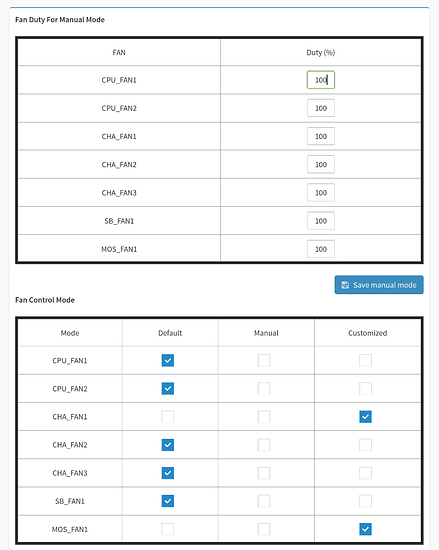

In BMC. Settings → Fan Settings → Fan Mode:

I have set them to full speed in BIOS and disabled BMC, but they aren’t running at full speed!

Make sure you have DC or PWM mode set correctly for each fan header. After that, you can use either BIOS or BMC to control the fan RPM.

Yes this option is there for 8x8x or 4x4x4x4

I also had issues with fans when they were set to Auto. My fans did not spin up under 40% speed and since I got Noctua IndustrialPPC 3000 rpm, they’re already “a bit” loud at 40% so it was really problematic. Switching all fans to PWM mode solved an issue and now everything is quiet. Well… as quiet as industrial 3000 rpm fans running at 1000 rpm can be…

I have the exact same problem with the lack of support for sleep mode.

In the manual it shows 2 options, “Suspend to RAM” and “Deep sleep”, but at least in the current bios 6.09, these 2 options are not present.

I have already contacted support but I am still waiting for their reply.

I even reset CMOS to ensure that I wouldn’t have a random option conflicting with the sleep mode, but it didn’t solved it.

Just got reply from ASrock saying that suspend to RAM is not supported “in hardware by design”.

I would be mindful if you are planning to buy this motherboard. Depending on what type of usage you are expecting to have, this might not be the board for you. If you planning to use it as a workstation (that I would guess it is the prevailing way) then do not buy it.

If you are planning to use it as a server and need to use all x16 slots, I also be careful. I am still waiting for their reply on my issues on slots #5 and #7. My current guess is the the pcie traces are not good enough (since I can run capture cards that are x4 gen2 without issues) to sustain Gen 4 x16 (could be redriver issues?).

If it was now, I would go for the Gigabyte WRX80-SU8-IPMI (if you managed to get one and need TB support).