Because for Ryzen, there aren’t many PCIe channels available… Consumer Ryzen has 24 PCIe channels. After reserving 16 channels available for GPU, there’re only 8 channels left. It makes no sense to split that many PCIe slots as PCIe 3.0 x1 is just 1 Gbps, definitely not enough for fiber channels, HBA cards…

Gen3 x1 is 1 gigabyte/sec, not 1 gigabit/sec—and X570/B550 gives you Gen4, so double that.

So yeah, maybe not enough for a big HBA, but plenty for an extra NIC, USB controller, etc. Anyway, x1 slots tend to hang off the PCH (and share a x4 link the CPU), so they’re not relevant when discussing CPU PCIe lane allocation. The PCH provides 16 lanes on its own for USB, SATA, etc. and these would be counted against that. Many full-sized boards will also provide a third full- or half-length slot that runs at x4 (or x8 on at least one board that I know of) from the PCH.

4 CPU PCIe lanes are reserved for the PCH and 4 are typically allocated to the primary storage device, but the remaining 16 can be divided up a number of ways; they aren’t necessarily reserved for the GPU. Most middle-tier or higher consumer boards will at least let you do x8/x8 and some will let you do x8/x4/x4 or x4/x4/x4/x4. x4 links can be allocated to PCIe expansion or M.2 slots. So lots of flexibility there.

At one point last year I had a full-sized X570 board kitted out with a GPU (CPU x8), SAS HBA (CPU x8), M.2 NVMe (CPU x4), 10GbE NIC (PCH x4), M.2 NVMe (PCH x4), Intel GbE NIC (PCH x1), and a Renesas USB 3.0 controller (PCH x1). So no, it’s not HEDT—and the PCH link was probably bottlenecking I/O under certain conditions—but there’s a lot you could do with a full-sized server board based on this platform.

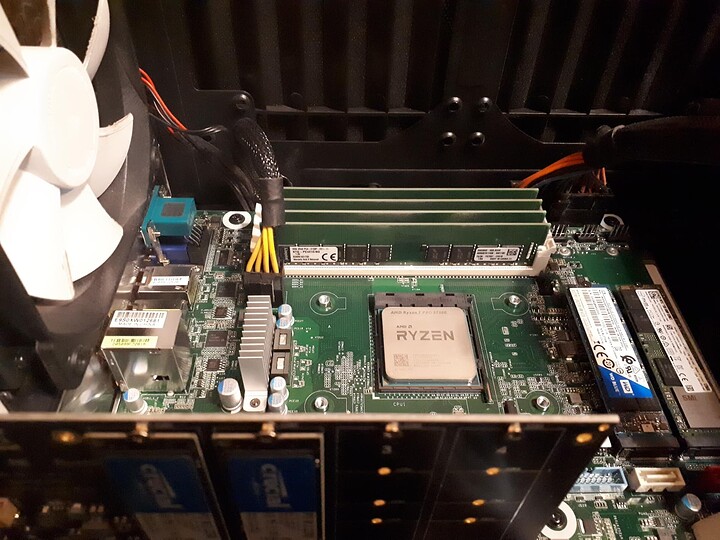

On the (2L)2T versions of the X470D4U/X570D4U, the x4 PCH link that I mentioned above is used for the dual 10GbE NIC. My main beef with the X570D4U non-2L2T is that those four lanes are simply not allocated. I would have liked to see a x4 expansion slot instead of the x1 that’s on there, like they did with the X470D4U non-2T. It’s also frustrating that there aren’t more USB ports, but I think the BMC ties up a number of PCH lanes, so it is what it is.

Hi,

I’m currently experiencing two curious problems with the X470D4U with Proxmox (Debian 11):

- A Hardware Error in the kernel log

- SATA-Problems - Harddisks get lost

The Hardware Error looks like the following:

[Sat Oct 8 14:31:02 2022] mce: [Hardware Error]: Machine check events logged

[Sat Oct 8 14:31:02 2022] [Hardware Error]: Corrected error, no action required.

[Sat Oct 8 14:31:02 2022] [Hardware Error]: CPU:1 (19:21:0) MC25_STATUS[-|CE|-|-|-|-|-|-|-]: 0x8000001a522001e3

[Sat Oct 8 14:31:02 2022] [Hardware Error]: IPID: 0x0000000000000000

[Sat Oct 8 14:31:02 2022] [Hardware Error]: Platform Security Processor Ext. Error Code: 32

[Sat Oct 8 14:31:02 2022] [Hardware Error]: cache level: L3/GEN, tx: INSN, mem-tx: Wrong R4!

This happens only on one of the servers every ~ 2-4 weeks. However, the memory and SATA disks are not of the same type:

On the server without hardware errors but SATA errors:

2* Samsung M391A4G43MB1-CTD

8* TOSHIBA MG07ACA1 (Onboard SATA ports, ZFS pool)

On the server with hardware errors:

4* Samsung M391A4G43AB1-CWE

6* Toshiba MG06ACA800E (Onboard SATA ports, ZFS pool)

The CPU is in both cases a AMD Ryzen 5 5600X.

The SATA error looks like the following:

On the server with the hardware errors:

[Sun Oct 9 11:43:04 2022] ahci 0000:03:00.1: AMD-Vi: Event logged [IO_PAGE_FAULT domain=0x000d address=0x982a0000 flags=0x0000]

[Sun Oct 9 11:43:04 2022] ata1.00: exception Emask 0x10 SAct 0xa0101000 SErr 0x0 action 0x6 frozen

[Sun Oct 9 11:43:04 2022] ata1.00: irq_stat 0x08000000, interface fatal error

[Sun Oct 9 11:43:04 2022] ata1.00: failed command: READ FPDMA QUEUED

[Sun Oct 9 11:43:04 2022] ata1.00: cmd 60/10:60:f1:89:27/00:00:6c:00:00/40 tag 12 ncq dma 8192 in

res 40/00:60:f1:89:27/00:00:6c:00:00/40 Emask 0x10 (ATA bus error)

[Sun Oct 9 11:43:04 2022] ata1.00: status: { DRDY }

→ This happened only once within 3 month. Basically the ZFS pool died, the disks were not accessible. A complete powerdown and powerup solved the problem.

On the server without the hardware errors:

Jul 5 03:37:55 merope kernel: [2813472.599047] ata10.00: invalid checksum 0x38 on log page 10h

Jul 5 03:37:55 merope kernel: [2813472.599051] ata10: log page 10h reported inactive tag 1

Jul 5 03:37:55 merope kernel: [2813472.599067] ata10.00: exception Emask 0x1 SAct 0x20000000 SErr 0x0 action 0x0

Jul 5 03:37:55 merope kernel: [2813472.599081] ata10.00: irq_stat 0x40000008

Jul 5 03:37:55 merope kernel: [2813472.599090] ata10.00: failed command: READ FPDMA QUEUED

Jul 5 03:37:55 merope kernel: [2813472.599101] ata10.00: cmd 60/08:e8:68:d7:6e/00:00:b7:03:00/40 tag 29 ncq dma 4096 in

Jul 5 03:37:55 merope kernel: [2813472.599101] res 40/00:e8:68:d7:6e/00:00:b7:03:00/40 Emask 0x1 (device error)

Jul 5 03:37:55 merope kernel: [2813472.599132] ata10.00: status: { DRDY }

Jul 5 03:37:55 merope kernel: [2813472.600844] ata10.00: n_sectors mismatch 27344764928 != 0

Jul 5 03:37:55 merope kernel: [2813472.600847] ata10.00: revalidation failed (errno=-19)

Jul 5 03:37:55 merope kernel: [2813472.601196] ata10: limiting SATA link speed to 3.0 Gbps

Jul 5 03:37:55 merope kernel: [2813472.601197] ata10.00: limiting speed to UDMA/100:PIO3

Jul 5 03:37:55 merope kernel: [2813472.601198] ata10: hard resetting link

Jul 5 03:37:56 merope kernel: [2813473.078722] ata10: SATA link up 3.0 Gbps (SStatus 123 SControl 320)

Jul 5 03:37:56 merope kernel: [2813473.079002] ata10.00: failed to IDENTIFY (INIT_DEV_PARAMS failed, err_mask=0x80)

Jul 5 03:37:56 merope kernel: [2813473.079005] ata10.00: revalidation failed (errno=-5)

Jul 5 03:37:56 merope kernel: [2813473.079018] ata10.00: disabled

Jul 5 03:37:59 merope kernel: [2813476.199631] sd 9:0:0:0: rejecting I/O to offline device

Jul 5 03:37:59 merope kernel: [2813476.199632] blk_update_request: I/O error, dev sdh, sector 16070326032 op 0x1:(WRITE) flags 0x700 phys_seg 1 prio class 0

→ The scenario seems slightly different, every 3-5 days, one disk dies with this message. So the ZFS pool still works in degraded mode until more than 2 disks die. A complete powerdown and powerup also solves the problem for some time.

I did a system upgrade of Proxomox to the latest version. Since 27 days no disk died anymore, so maybe the problem is in some way Linux Kernel related?

Do you have any advice?

Best Regards,

Hermann

Could be a memory issue. See if adding more RAM would solve the problem? ZFS is a heavy RAM user, RAM is the primary cache for ZFS.

Thanks for your reply, yes, I experienced such behavior before. However, one machine has 64GB of RAM and is for Proxmox Backup only, the other one has 128GB and the VMs consume approx. 32GB of that. So there should be plenty of RAM.

“top” does not indicate that the machine runs out of RAM, so I doubt that this is the reason. Moreover, I can imagine that lack of RAM leads to processes being killed, but it is unclear to me how it could lead to SATA or Hardware errors?

Best Regards,

Hermann

This is a plain ECC error - you had a bit flip that was corrected and logged.

If its happening regularrly I’d try re-seating the dimms and / or the CPU and if that doesn’t help then I would assume that one of the dimms is faulty.

There should be some additional EDAC messages in the syslog that would look like this:

EDAC MC0: 1 CE Cannot decode normalized address on mc#0csrow#3channel#0 (csrow:3 channel:0 page:0x0 offset:0x0 grain:64 syndrome:0x0)

or this:

EDAC MC1: CE page ..., offset ... grain 0, syndrome ..., row 6, channel 0

then just google for “Identify DIMM form EDAC” and you should be able to pinpoint which dimm was it

Edit: I would also check if the dimm is not touching a cpu heatsink or any cables.

Edit2: You may also try to remove dimms, power up, power down, add dimms back to force memory re-training. (or just reset cmos - but that’s a pain if you have a lot of bios option changes)

For the SATA errors i have less confidence: I’d say may be a bad cable, may be a backplane/adapter - Aspecially if you are using any consumer ones - for example Enermax hot swap bays I had were all hot garbage - downgrading to SATA I or SATA II randomly,

I see that you are also getting downgraded to SATA2 (SATA link up 3.0 Gbps)

may also be just a dying drive.

If it’s one enclosure or a single drive I’d try connecting it directly with a new sata cable to perefable a different controller to check if it’s the drive or something else (X470D4U has some SATA slots from PCH and some from a separate Asmedia 1061 chip)

Upgraded Trunas box. Replaced Ryzen 1600 with 5750G.

2 nvme drives on x4x4x4x4 card stopped working in top slot.

Kind of trial and error to figure out. Apparently x8x4x4 means x4x4 for bottom slot.

Idles a bit lower (~14W is router)

Hello all!

I just snagged two of these motherboards to upgrade my existing homelab virtualization setup. I am going to be using them with Ryzen Pro 4750G processors. I tried looking into this but couldn’t find anything conclusive, can anyone confirm if this motherboard does or does not support the secure memory encryption (SME) feature that is provided by the Ryzen pro series processors? According to the manual it doesn’t seem like the option is offered, unless maybe support was added after the manual was created.

If the motherboard doesn’t offer explicit support, has anybody tried using SME on Linux with this motherboard anyway? From my research, it seems like some people are able to get it working on motherboards that don’t offer explicit support. I am going to try to get it working either way, but I am curious if anyone else has barked up this tree.

@AbsolutelyFree yes, SME works on linux with this board, but there is a problem with it. Depending on the kernel arguments the corruption described in those bugs may or may not cause issues but it’s good to keep it in mind.

- FS#65869 : [linux-hardened] Kernel panic caused by non zeroed-free pages

- 206963 – non-zeroed pages when using CONFIG_INIT_ON_FREE_DEFAULT_ON and AMD SME

If you can then please check if you also experience those random panics. 4000-series may have no issues. (+The problems only occur when booting in UEFI mode)

Pre-requisite is a kernel with following option:

CONFIG_AMD_MEM_ENCRYPT=y (check for example in /proc/config.gz)

Then you probably need to make sure you have following kernel argument: mem_encrypt=on

@Remisc Thanks for confirmation! I have the motherboards and one of the processors so far. I did find the option to enable the feature in the latest BIOS. I will test further and report back once I am able to. I am planning on running Proxmox on both of them.

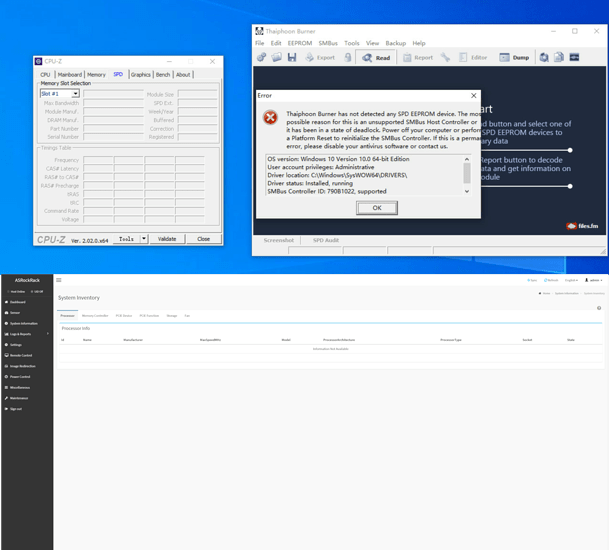

I keep having issues with the ECC RAM that I purchased for these systems throwing errors in MemTest86 and it’s really throwing a monkey wrench in all of my plans. The amount of cash I have tied up in returns right now is infuriating.

Anyone know if this thing is EOL or just really low stock?

I can only find a single shop here (for the last few days none at all) and they are asking absurd prices (~500€ when it was at 230 in the past and 270-ish just a couple months ago).

On the ASRock website it’s not marked as EOL so I wouldn’t think so but who knows…

Was talking a bit with my sister and got in the mood again to finally build this thing buuuuuutttttt not at that price ![]()

Same with the X570 variant by the way.

If it actually is EOL (or generally I guess) anyone got an alternative? I don’t necessarily need IPMI although it would be nice. The X570 variant doesn’t officially support Ryzen 2000 (which is what’s going in it), so there’s that.

Found the Asus Pro B550M-C/CSM, which looks promising. But it’s obviously a B550 chipset and I’m not sure what the impact on a NAS would be. Or maybe an ASRock X570M Pro4, which seems more similar to this one specswise, although it does have a chipset fan.

I had instability on some sticks when running default JADEC frequency.

Try increasing on decreasing it a bit. For example if it defaults to 2133 I’d try to set it to 2400, 2666 or 1866.

yes, OC may be more stable - at least I saw it on my board with 2 dual rank sticks defaulting to 2400 - it showed some ecc errors. Going to either 2133 or 2666 solved it.

But just be aware that the frequencies in the bios are no doubled (Double Data Rate)- so if you want to set it to for example 2400 then you need to select 1200

The cheapest I can find it is locally Poland for ~380 EUR:

but yeah. according to ASRock Rack X470D4U ab € 465,83 (2022) | Preisvergleich Geizhals EU

The price jumped from ~320 EUR just last week

Yeah that’s what I’k looking at as well. That’s usually just when most shops drop off the list though and only the most expensive remain so the graph is skewed a bit.

Anyway, even 380 is wild.

I really hope it’s just a supply shortage and more is just stuck on a container ship somewhere. I’d bite my ass if I waited for this long because of laziness and now missed out…

Good to know that I am not alone with RAM instability on these boards. What led me to believe that the issue was the RAM and not the board was that I could get a successful run of 4 passes of MemTest86 on 2x 16GB 3200Mhz non-ECC sticks but not on the 2x 32GB 3200Mhz ECC sticks that I bought specifically for that individual board. The ECC sticks were Nemix brand which seem to be hit and miss from various past experiences with their products. I found some equivalent Samsung sticks at the same price on ebay which are en route now but I won’t have them until next weekend at the earliest. If these give me issues as well, I will try lowering the frequency.

Oh hey look at that, it’s back to normal prices and in stock in like 20 different shops ![]()

I just checked earlier today and it wasn’t back yet, so must have been a couple hours ago.

edit:

Just checking the Memory QVL and only 2 of the sticks are even available ![]() and those are 100€ a pop

and those are 100€ a pop ![]()

The smallest is 16 gigs and honestly I don’t think I need more? So… thoughts on single-stick? I know Ryzen is technically better with dual channel but considering the CPU isn’t gonna be maxed out anyway, does it really matter?

OK well… after I don’t even know how long I finally pulled the trigger on the regular variant and it should be arriving during next week. We’ll see how it goes.

Haven’t decided on the RAM yet. Feel like 16 gigs is enough but then it’s single channel so… yeah I don’t know.

Same here, have been looking at this board for ages, finally pulled the trigger yesterday.

I have a Supermicro M11SDV-8C-LN4F (Epyc 3251) board in the colo (excellent board, the Epyc 3251 is just amazing for the power draw, Supermicro ofcourse absolutely top server board), but for a home KVM + NAS server that is overkill and way too expensive.

The ASRock Ryzen-based “DYI server board” is the only deal in town for small moneys. ![]()

I have an ASUS Pro-WS-X570-ACE workstation board as my desktop, and it does it all (Ryzen CPUs… ECC… PCIe 4.0 at 8x8x8x [yes, the only mobo I am aware of that does 8x8x8x]) but it has a totally broken quasi IPMI, meaning it needs a GPU or an APU if it is to be used as a server without one… so, no, not a server board.

The CPU question is interesting. My requirement is ECC (always - in the servers and in the workstation, no point in doing anything worth saving if you don’t use ECC) and low TDP for the server. Soooooo, you can mess with the PRO APUs, but they are funky, have fewer PCI lanes and less cache, and they are just odd to find available.

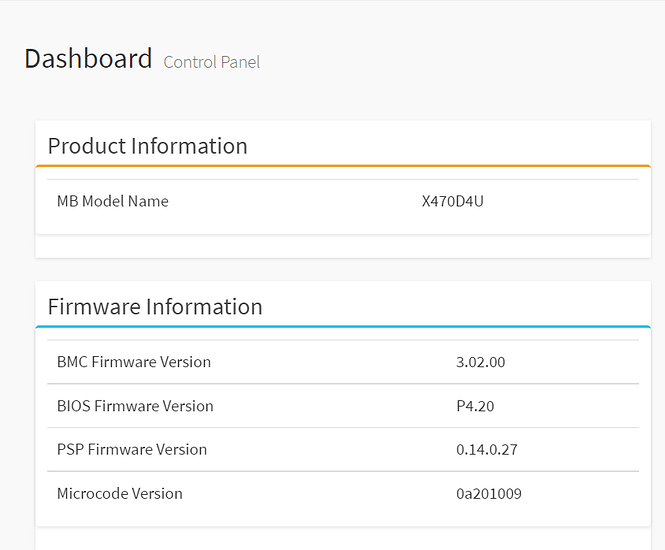

Since the 4.20 BIOS has AGESA ComboV2-AM4_1.2.0.0 the 5000 APUs are possible, right? But that is just the same mess of finding a chip available at a price that is reasonable and hope the X470D4U is happy with it.

I ended up just getting a Ryzen 5 3600 for cheap, and 2x Samsung 16GB ECC uDIMMs, and hopefully happiness ensues.

I have Intel X550T2 10Gbe NICs and LSI SAS3008 controllers for the ZFS pools.

Yeah I ended up getting two of the M391A2K43BB1-CTDQ from the QVL. Thought if I drop like 500$ on drives and another 300 on a board the 100 extra for a second DIMM aren’t a huge deal.

And besides this thing is supposed to last for a while and who knows what I put on it in the future container-wise.