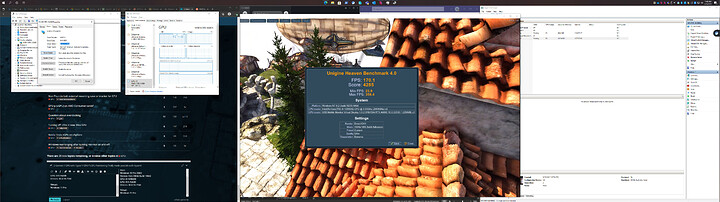

I dwelved intro trying to get Geforce Experience working inside of Hyper-V. Initially I would get and error code when trying to load geforce experience. Then I tried installing drivers via spoofing the hardware and compatible IDs of the GPU into the VM through regedit (Computer\HKEY_LOCAL_MACHINE\SYSTEM\ControlSet001\Enum\PCI). Also having copied over the drivers into the HostDriverStore folder into the VM.

Sometimes it would load Geforce Experience on first driver installation but without Nvidia shield option showing.

After reboot definitely error code when trying to launch geforce experience.

Tried copying registry setting “Computer\HKEY_LOCAL_MACHINE\SOFTWARE\NVIDIA Corporation\NvStream” into the VM

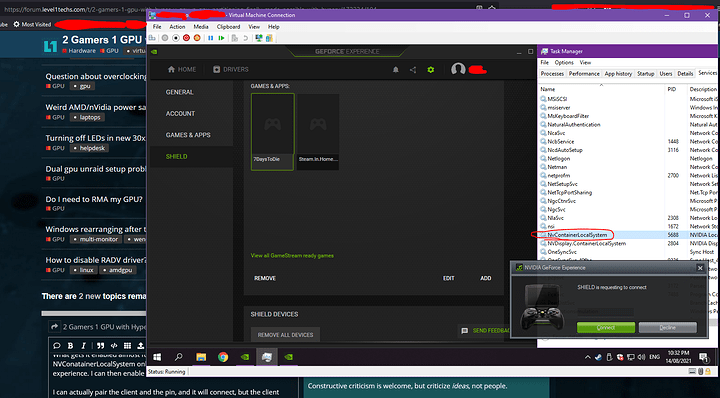

What seems to get Geforce experience opening up is to disable your GPU in the device manager inside of the VM first, then restart both NVContainerLocalSystem and NVDisplay.ContainerLocalSystem services while the GPU is disabled.

From there Geforce Experience loads and states that Drivers have to be downloaded.

Then after enabling the GPU inside the VM’s device manager, and then restarting geforce experience it shows as detecting the driver installed and the settings and general section shows as all detected and supporting all features except VR but no SHIELD option showing. I think after re-enabling the GPU though it removes the spoofed hardware ID, It also gets removed on driver installation.

For whatever reson going into Enhanced session shows the Shield option but inside that menu it shows “information not available” and no option to enable gamestream.

Going back to basic session shield option dissapears.

What gets it enabled almost fully is to then restart NVConatainerLocalSystem only, don’t need to close and re-open geforce experience. I can then enable NVidia Gamestream.

I can actually pair the client and the pin, and it will connect, but the client doesn’t list any games, or the Desktop option that I added as a game.

It shows nothing inside the client for me to connect and start a game streaming session.

Other things to add, I tried using the USB monitor thing, but that doesn’t seem to help.

Perhaps Geforce experience needs an actual monitor connected to the VM GPU.

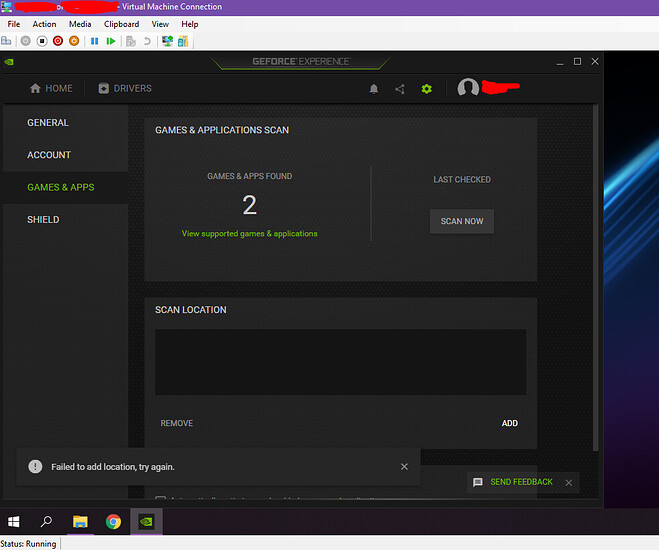

Forgot to write, that Geforce Experience errors and doesn’t seem to list any folders for games after I do the thing to get Shield option showing, it was showing before during the process, as soon as you do the option to get shield showing it loses the folder listings. Trying to add folders gives an error.

I also messed around with trying Win_1337_Apply_Patch_v1.9_By_DFoX on the host and VM but have no idea what that would do lol, or even affect the result.

I made a .cmd file batch script to get Geforce experience loading without Shield enabled:

pnputil /disable-device "PCI\VEN_XXXX&DEV_XXXX&SUBSYS_XXXXXXXXX&REV_XX\X&XXXXXXX&X&X"

net stop NvContainerLocalSystem

net stop NVDisplay.ContainerLocalSystem

net start NvContainerLocalSystem

net start NVDisplay.ContainerLocalSystem

pnputil /enable-device "PCI\VEN_XXXX&DEV_XXXX&SUBSYS_XXXXXXXXX&REV_XX\X&XXXXXXX&X&X"

…and one that has the extra steps to get Shield kind of half working:

pnputil /disable-device "PCI\VEN_XXXX&DEV_XXXX&SUBSYS_XXXXXXXXX&REV_XX\X&XXXXXXX&X&X"

net stop NvContainerLocalSystem

net stop NVDisplay.ContainerLocalSystem

net start NvContainerLocalSystem

net start NVDisplay.ContainerLocalSystem

pnputil /enable-device "PCI\VEN_XXXX&DEV_XXXX&SUBSYS_XXXXXXXXX&REV_XX\X&XXXXXXX&X&X"

start "" /D "%PROGRAMFILES%\NVIDIA Corporation\NVIDIA GeForce Experience" "NVIDIA GeForce Experience.exe"

timeout /t 6

net stop NvContainerLocalSystem

net start NvContainerLocalSystem

You will have to change the Device instance path hat the PNPUTIL app uses as it would be different for each GPU type, you get the path from device manager property details. Hopefully someone else can mess around and actually get Shield streaming working.

”. Now if we can get something like hyper-v on linux that is this easy it would be great.

”. Now if we can get something like hyper-v on linux that is this easy it would be great.