Hi all,

Apologies for the late update to this project log, although the positive side to this is that I’ve completed the entire process of setting up the backup FreeNAS server and it has been running quite well over the past couple months.

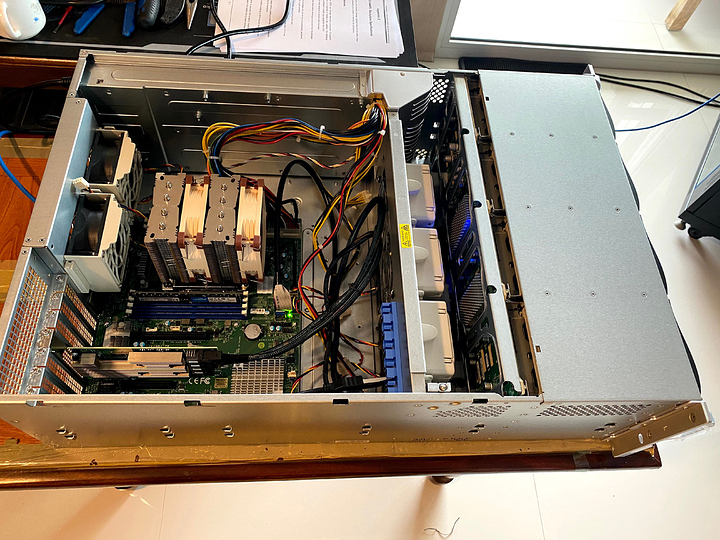

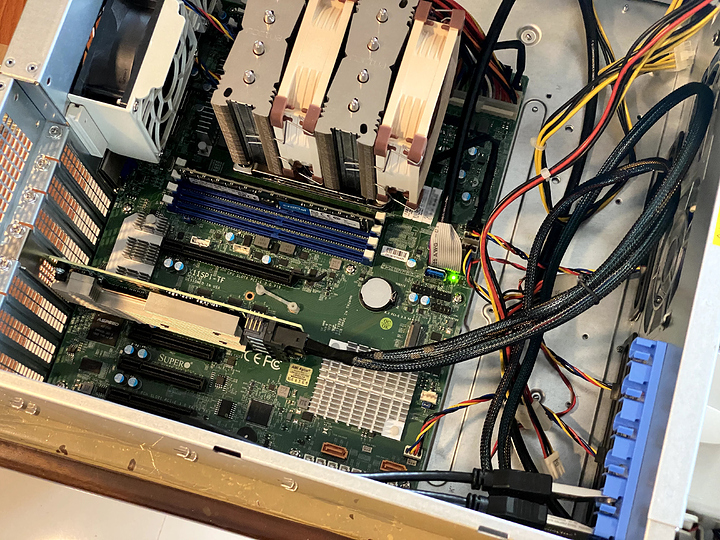

Here are some snaps during the initial build:

Right now the first 8-bays contain the primary backup pool consisting of 8x 12TB WD UltraStar disks.

I’ve filled the remaining 16-bays with an ‘ad-hoc’ collection of WD 4TB Red/Red Pro disks collected from my older Synology Diskstations (5 + 8-bay). I’ve called this the “dead-pool” as many of these disks are on their last legs. It’s a second pool that’s there as a “3rd” redundancy.

The primary pool performs a local-replication to “dead-pool” and interestingly enough, this feature was only just recently released in the new FreeNAS11.3