TLDR: I am creating a storage space of 5x 10tb drives to function as a DAS in single redundancy parity. The Storage space will mostly store large video files and I want to set the allocation unit size larger than 4k to speed up writes. Problem is, I’m getting abysmal write speeds on drives that can sustain ~250MB/s writes each. Write performance will quickly tank below 100MB/s and stall completely when either transferring a folder of mixed files or just simply a few large files.

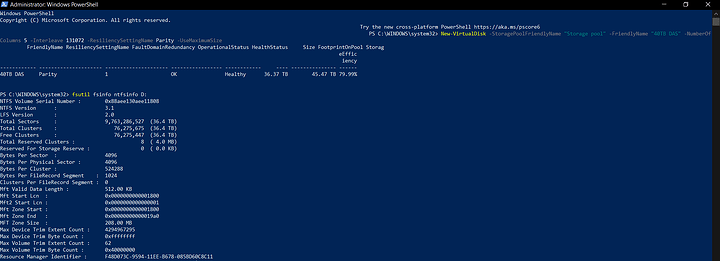

Basic process is as follows

1: create a 5 drive pool using the GUI

2: use powershell to create the storage space with an interleave of 128k using 5 columns. Interleave = AUS/(Columns-1)

3: format the volume in disk management as NTFS AUS 512k (2x the interleave)

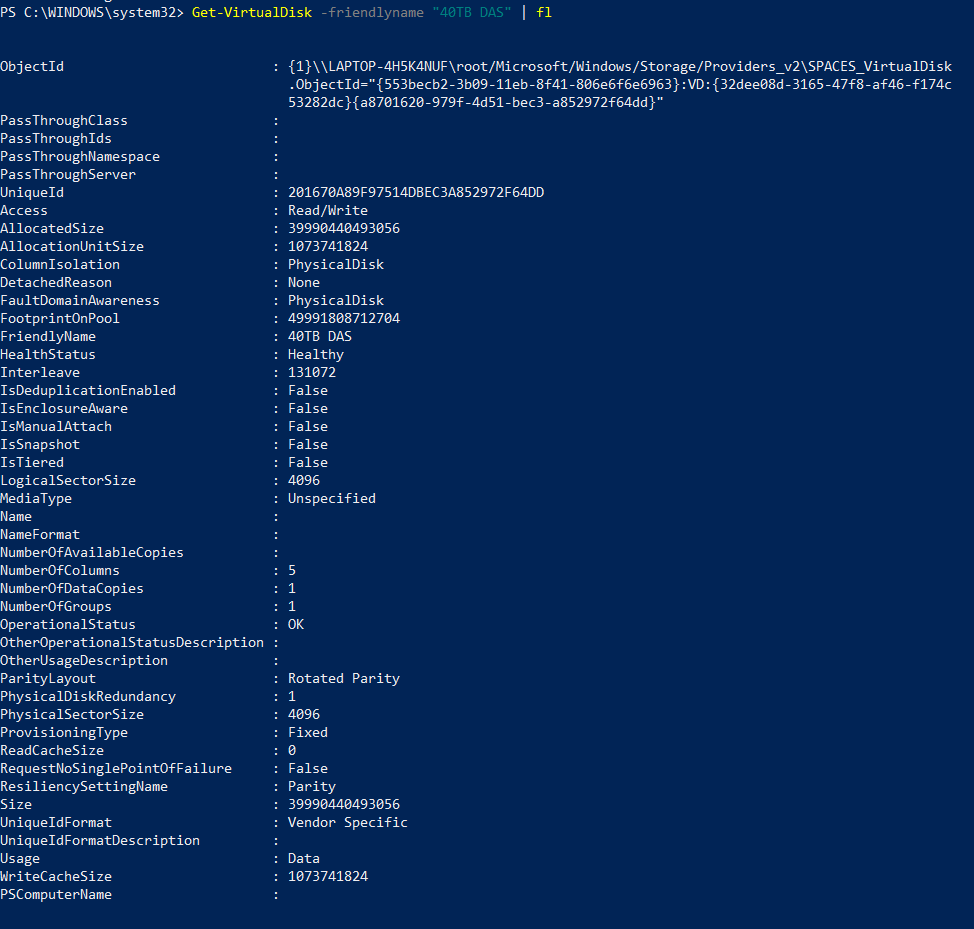

Get-Virtualdisk … | fl

Shows “allocation unit size” as 1073741824 (which I believe is 1GB???) Why is this? I definitely didn’t set that.

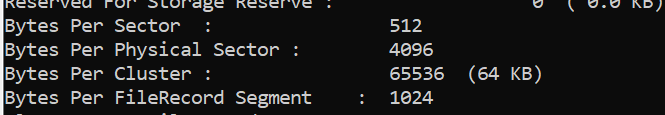

fsutil fsinfo ntfsinfo

shows “bytes per cluster” as 524288 (is this the 512k AUS I set?)

however “bytes per sector” shows 4096

I also tried erasing the volume in disk management and formatting in powershell using the following

Get-VirtualDisk -FriendlyName …| Get-Disk | Initialize-Disk -Passthru | New-Partition -AssignDriveLetter -UseMaximumSize | Format-Volume -FileSystem ntfs -AllocationUnitSize 524288

Assume I’m a total idiot and don’t know what I’m doing, this is my first storage pool and I’m trying to figure it out. Should the “bytes per sector” be reading 4096? Should “allocation unit size” be reading 1GB???

I’m hoping for somewhere in the ballpark of 1GB/s writes on this storage pool, the 4 data drives should be capable of it in theory if the parity calculations don’t take me out to lunch. Any help is appreciated.