Wendell said he was able to 'SATURATE’ 10GbE via spinning drives (using disk shelves). I’m unaware of a how to thread explaining how to accomplished his performance, which includes the trial and error of others, here.

I can think of possible reasons his results differed so drastically.

Is this the difference purpose built NAS/SAN hardware (Netapp) being ‘better’ at this than Generic servers? If so, it’s an HBA in the NetApp, that’s going to raise more questions than it answers.

I’ve seen quite a few people stuck in the same MB/s range with similar equipment, but, there’s a stack of people who get much better results — and in comparing the setups, nothing stands out as the ‘ah ha’ reason.

Was it the disk shelves? Or some other piece of hardware?

• Netapp DS4246

• IOM6 controller (is the IOM12 just SAS-3 ?)

• Was it the quantity of drives? ~ 21 HDs or so?

• Or maybe he was striping the shelves?

• If he’s striping shelves, the speed-per-shelf would be good info

Speaking of the IOM6 vs IOM12:

Are all HD compatible with it as an HBA?

And ‘only requires Netapp approved devices if using a Netapp RAID controller?

Regarding performance that’s reasonable/plausible …

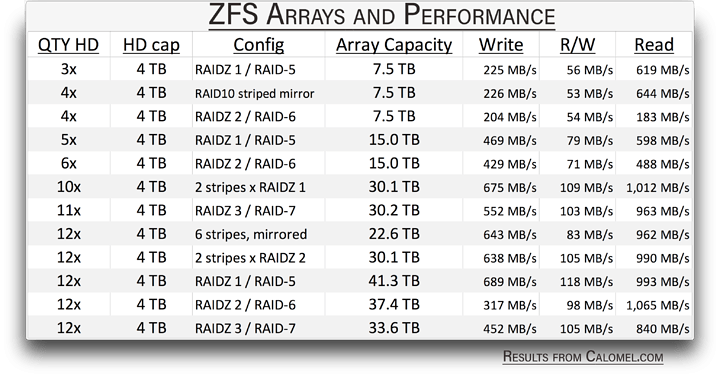

There’s an extensive list of REAL test results which I’d love to even get close to.

calomel (website) … has a page you can google called zfs_raid_speed_capacity

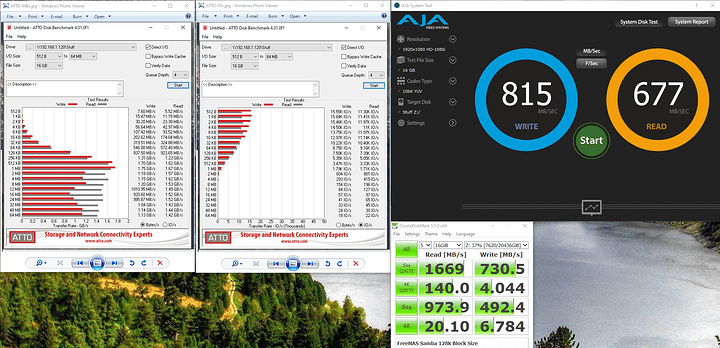

Consistent with the speeds in the list, Wendell says he gets 800 - 1,100 MB/s …

However, in a thread here, a member got a whole 400 MB/s using 4x evo 970 SSDs

And in threads everywhere, people get 150 - 200 MB/s using 4+ 7200 drives.

(Yes, I know rotational speed isn’t supposedly not very important. I’m just not going to omit it).

This is a huge range…

If a customer asked for a storage solution and I quoted that range they’d laugh.

In the last year I’ve considered just giving up and accepting that ~8 HDs in RAIDZ-2 is ~140 MB/s … pending on voodoo, file size, network speed & traffic.

Then I run in to reports of blissful performance – & my optimism overrules my skepticism. So, I’m (again) thinking of wasting another 20 hours trying fix tune the performance.

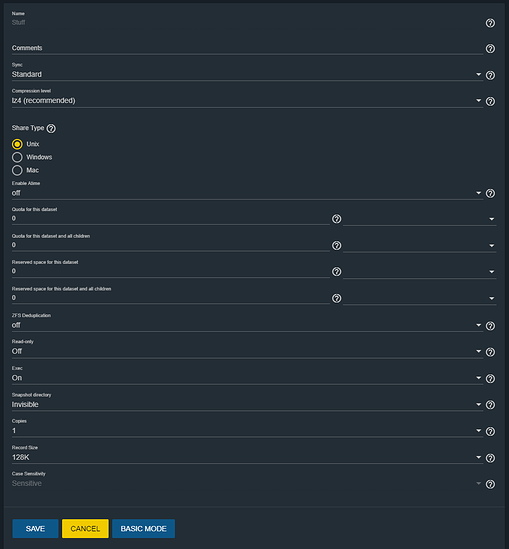

The performance results are attached as a picture to ensure formatting is legible.

One of those results includes RAIDZ-2 of only 6 drives – yet, gets

Writes - 429 MB/s

Mixed - 71 MB/s

Read - 488 MB/s

granted - the mixed speeds aren’t impressive, but I don’t need mixed.

I’d be VERY happy to approximate these 300 - 400 MB/s results.

Right now I get closer to 150 MB/s using 8 drives. This isn’t via Bonnie++ … it’s over 10GbE (I’ve gotten up to 240MB/s, ooooh) … it’s just not even CLOSE to what others are getting.

Dell PowerEdge T320

Xeon E5-2403 v2 Quad 1.80GHz

32GB 1333MHz DDR3 ECC

LSI SAS 9205-8i

8x 7.2K IBM/HGST SAS

Configuration: RAIDZ2

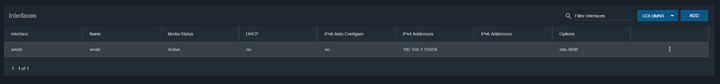

Network - SFP+ (10GbE)

Thanks