I am having trouble with IOMMU passthrough on proxmox using a Threadripper Pro 3975X on an Asus WRX80E-SAGE motherboard.

When I try to pass an Nvidia Quadro P400 to a virtual machine, the virtual machine sits and hangs, and the graphics card puts itself in a state where I cannot reset it. In my research online, it looks similar to the AMD Vega reset bugs.

I think that this issue is likely due to a bios setting in the motherboard, and there is a plethora of different settings related to PCIE that I am not thoroughly versed in. I have performed IOMMU passthrough on other systems before, but not encountered this issue.

First, I’ll print configuration files and lspci before booting the vm. Then, I’ll print the error messages I get from lspci and from dmesg.

Software Versions

BIOS: Version 0405, 2021/04/19 (Newest)

BMC: Version 1.11.0, 2021/05/06 (Newest)

root@pve-01:~# uname -a Linux pve-01 5.4.119-1-pve #1 SMP PVE 5.4.119-1 (Tue, 01 Jun 2021 15:32:00 +0200) x86_64 GNU/Linux

root@pve-01:~# pveversion pve-manager/6.4-8/185e14db (running kernel: 5.4.119-1-pve)

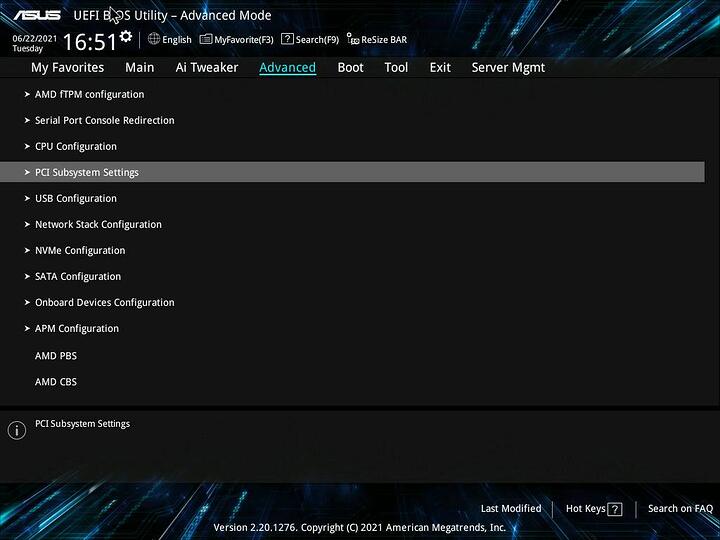

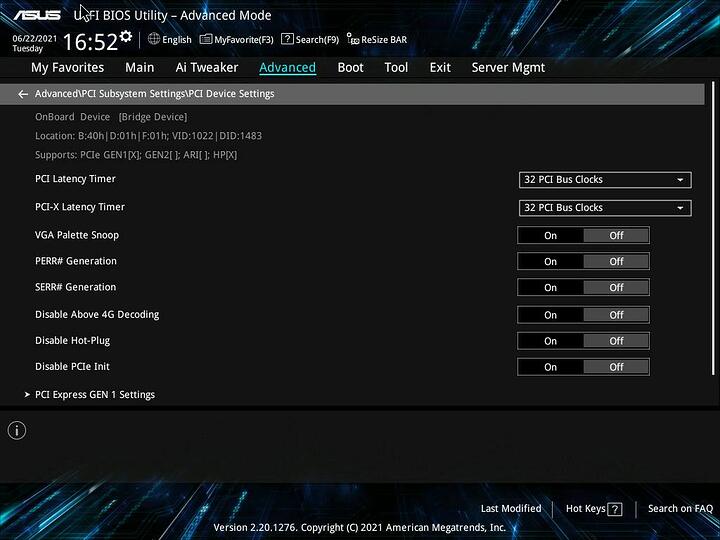

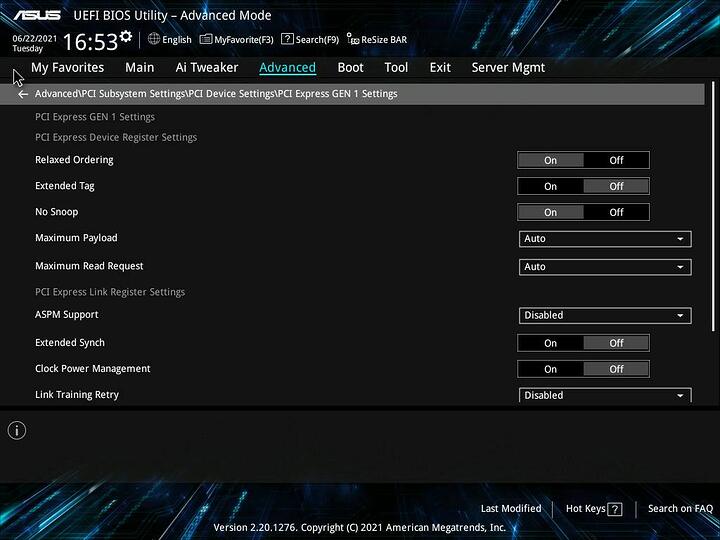

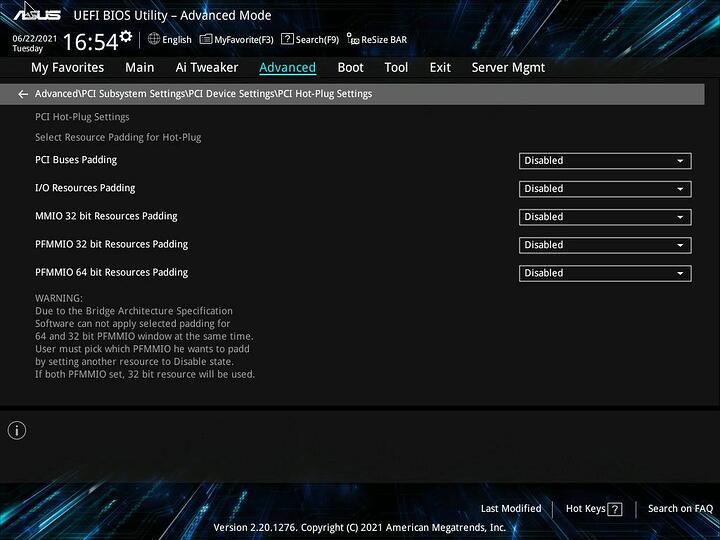

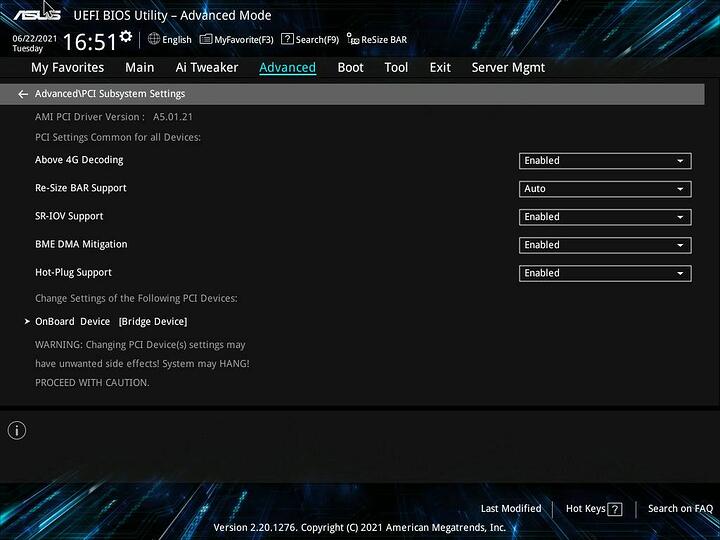

Bios Settings

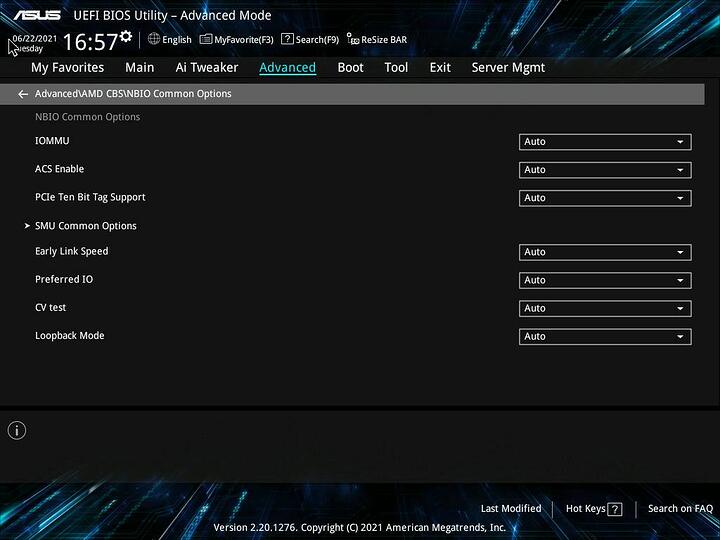

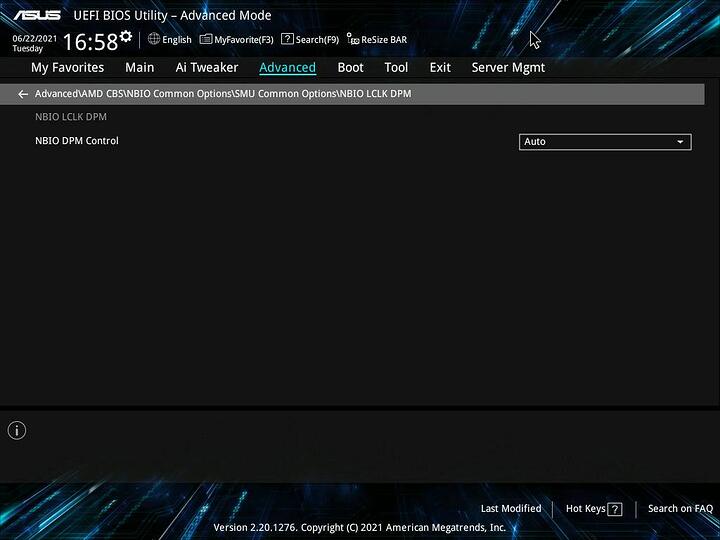

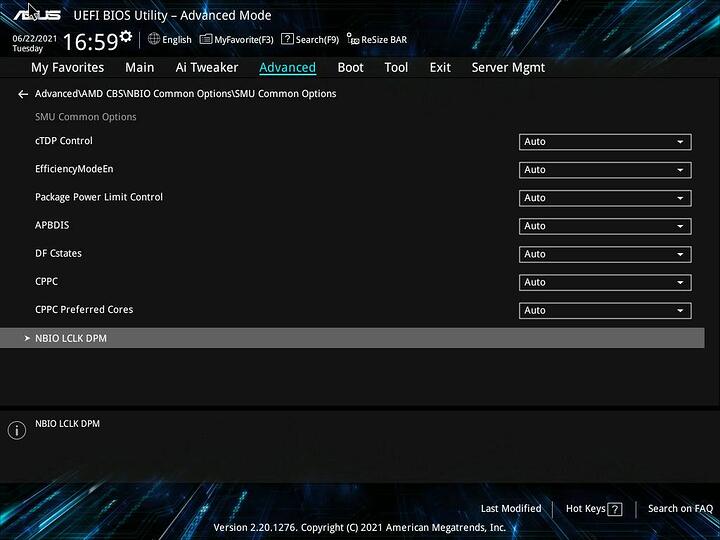

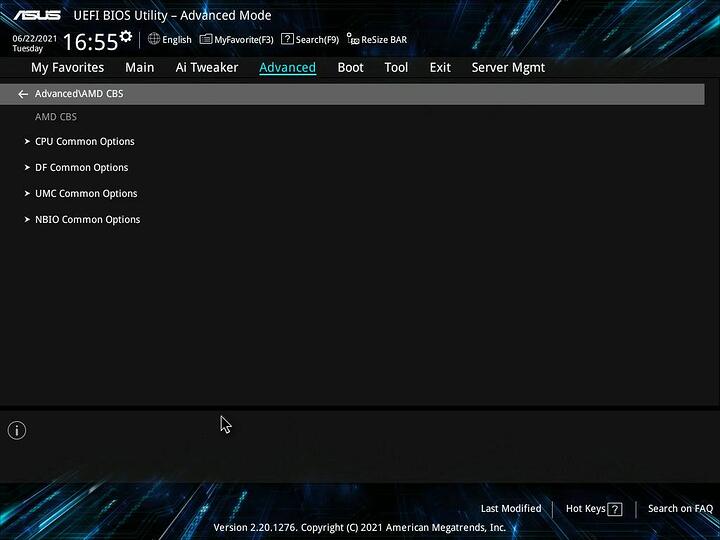

# I've included ALL sub-menu settings from these sections below: Advanced - CPU Configuration Advanced - PCI Subsystem Settings Advanced - AMD CBS - NBIO Common options

Advanced - CPU Configuration - SVM Mode = Enabled Advanced - PCI Subsystem Settings - Above 4G Decoding = Enabled Advanced - PCI Subsystem Settings - Re-Size BAR Support = Auto Advanced - PCI Subsystem Settings - SR-IOV Support = Enabled Advanced - PCI Subsystem Settings - BME DMA Mitigation = Enabled Advanced - PCI Subsystem Settings - Hot-Plug Support = Enabled Advanced - PCI Subsystem Settings - OnBoard Device - PCI Latency Timer = 32 PCI Bus Clocks Advanced - PCI Subsystem Settings - OnBoard Device - PCI-X Latency Timer = 32 PCI Bus Clocks Advanced - PCI Subsystem Settings - OnBoard Device - VGA Palette Snoop = Off Advanced - PCI Subsystem Settings - OnBoard Device - PERR# Generation = Off Advanced - PCI Subsystem Settings - OnBoard Device - SERR# Generation = Off Advanced - PCI Subsystem Settings - OnBoard Device - Disable Above 4G Decoding = Off Advanced - PCI Subsystem Settings - OnBoard Device - Disable Hot-Plug = Off Advanced - PCI Subsystem Settings - OnBoard Device - Disable PCIe Init = Off Advanced - PCI Subsystem Settings - OnBoard Device - PCI Express Gen 1 Settings - Relaxed Ordering = On Advanced - PCI Subsystem Settings - OnBoard Device - PCI Express Gen 1 Settings - Extended Tag = Off Advanced - PCI Subsystem Settings - OnBoard Device - PCI Express Gen 1 Settings - No Snoop = On Advanced - PCI Subsystem Settings - OnBoard Device - PCI Express Gen 1 Settings - Maximum Payload = Auto Advanced - PCI Subsystem Settings - OnBoard Device - PCI Express Gen 1 Settings - Maximum Read Request = Auto Advanced - PCI Subsystem Settings - OnBoard Device - PCI Express Gen 1 Settings - ASPM Support = Disabled Advanced - PCI Subsystem Settings - OnBoard Device - PCI Express Gen 1 Settings - Extended Synch = Off Advanced - PCI Subsystem Settings - OnBoard Device - PCI Express Gen 1 Settings - Clock Power Management = Off Advanced - PCI Subsystem Settings - OnBoard Device - PCI Express Gen 1 Settings - Link Training Retry = Disabled Advanced - PCI Subsystem Settings - OnBoard Device - PCI Express Gen 1 Settings - Link Training Timeout (uS) = 1000 Advanced - PCI Subsystem Settings - OnBoard Device - PCI Express Gen 1 Settings - Disable Empty Links = Off Advanced - PCI Subsystem Settings - OnBoard Device - PCI Hot-Plug Settings - PCI Busses Padding = Disabled Advanced - PCI Subsystem Settings - OnBoard Device - PCI Hot-Plug Settings - I/O Resources Padding = Disabled Advanced - PCI Subsystem Settings - OnBoard Device - PCI Hot-Plug Settings - MIMO 32 bit Resources Padding = Disabled Advanced - PCI Subsystem Settings - OnBoard Device - PCI Hot-Plug Settings - PFMIMO 32 bit Resources Padding = Disabled Advanced - PCI Subsystem Settings - OnBoard Device - PCI Hot-Plug Settings - PFMIMO 64 bit Resources Padding = Disabled Advanced - AMD CBS - NBIO Common options - IOMMU = Auto Advanced - AMD CBS - NBIO Common options - ACS Enable = Auto Advanced - AMD CBS - NBIO Common options - PCIe Ten Bit Tag Support = Auto Advanced - AMD CBS - NBIO Common options - Early Link Speed = Auto Advanced - AMD CBS - NBIO Common options - Preferred IO = Auto Advanced - AMD CBS - NBIO Common options - CV test = Auto Advanced - AMD CBS - NBIO Common options - Loopback Mode = Auto Advanced - AMD CBS - NBIO Common options - SMU Common Options - cTDP Control = Auto Advanced - AMD CBS - NBIO Common options - SMU Common Options - EfficiencyModeEn = Auto Advanced - AMD CBS - NBIO Common options - SMU Common Options - Package Power Limit Control = Auto Advanced - AMD CBS - NBIO Common options - SMU Common Options - APBDIS = Auto Advanced - AMD CBS - NBIO Common options - SMU Common Options - DF Cstates = Auto Advanced - AMD CBS - NBIO Common options - SMU Common Options - CPPC = Auto Advanced - AMD CBS - NBIO Common options - SMU Common Options - CPPC Preferred Cores = Auto Advanced - AMD CBS - NBIO Common options - SMU Common Options - NBIO LCLK DPM - NBIO DPM Control = Auto

Before VM Boot

Modules:

root@pve-01:~# egrep "options|blacklist" /etc/modprobe.d/* /etc/modprobe.d/blacklist.conf:blacklist radeon /etc/modprobe.d/blacklist.conf:blacklist nouveau /etc/modprobe.d/blacklist.conf:blacklist nvidia /etc/modprobe.d/kvm.conf:options kvm ignore_msrs=1 # Tried with and without `options kvm ignore_msrs=1` /etc/modprobe.d/pve-blacklist.conf:blacklist nvidiafb /etc/modprobe.d/vfio_iommu_type1.conf:options vfio_iommu_type1 allow_unsafe_interrupts=1 # Tried with and without `options vfio_iommu_type1 allow_unsafe_interrupts=1` /etc/modprobe.d/vfio-pci.conf:options vfio-pci ids=10de:1cb3,10de:0fb9 disable_vga=1 disable_idle_d3=1 # Tried with and without `disable_idle_d3=1`

Boot:

#Using systemd-boot instead of grub. Needed for ZFS Raid 1 Boot Disk(s) root@pve-01:~# cat /etc/kernel/cmdline root=ZFS=rpool/ROOT/pve-1 boot=zfs amd_iommu=on iommu=pt # Tried with and without `rd.driver.pre=vfio-pci` root@pve-01:~# update-initramfs -u root@pve-01:~# pve-efiboot-tool refresh root@pve-01:~# reboot

Virtual Machine:

root@pve-01:~# cat /etc/pve/qemu-server/101.conf bios: ovmf boot: order=scsi0;ide2;net0 cores: 64 cpu: host efidisk0: local-zfs:vm-101-disk-1,size=1M hostpci0: 0000:41:00.0,pcie=1,x-vga=1 hostpci1: 0000:41:00.1 ide2: local:iso/manjaro-xfce-21.0.7-210614-linux510.iso,media=cdrom machine: q35 memory: 2048 name: workstation net0: virtio=6E:D8:D5:EF:27:98,bridge=vmbr0,firewall=1 numa: 0 ostype: l26 scsi0: local-zfs:vm-101-disk-0,size=32G scsihw: virtio-scsi-pci smbios1: uuid=9ef0b069-4125-4a78-bca2-d1185c2fb4a2 sockets: 1 vga: none vmgenid: e6429e56-559f-4384-8a93-8fc51c3f6b9f

PCIE Device:

root@pve-01:~# lspci -s 41:00 -v 41:00.0 VGA compatible controller: NVIDIA Corporation GP107GL [Quadro P400] (rev a1) (prog-if 00 [VGA controller]) Subsystem: Dell GP107GL [Quadro P400] Physical Slot: 6 Flags: fast devsel, IRQ 255 Memory at ce000000 (32-bit, non-prefetchable) [size=16M] Memory at 18030000000 (64-bit, prefetchable) [size=256M] Memory at 18040000000 (64-bit, prefetchable) [size=32M] I/O ports at 9000 [size=128] Expansion ROM at cf000000 [disabled] [size=512K] Capabilities: [60] Power Management version 3 Capabilities: [68] MSI: Enable- Count=1/1 Maskable- 64bit+ Capabilities: [78] Express Legacy Endpoint, MSI 00 Capabilities: [100] Virtual Channel Capabilities: [250] Latency Tolerance Reporting Capabilities: [128] Power Budgeting <?> Capabilities: [420] Advanced Error Reporting Capabilities: [600] Vendor Specific Information: ID=0001 Rev=1 Len=024 <?> Capabilities: [900] #19 Kernel driver in use: vfio-pci Kernel modules: nvidiafb, nouveau 41:00.1 Audio device: NVIDIA Corporation GP107GL High Definition Audio Controller (rev a1) Subsystem: Dell GP107GL High Definition Audio Controller Physical Slot: 6 Flags: fast devsel, IRQ 255 Memory at cf080000 (32-bit, non-prefetchable) [disabled] [size=16K] Capabilities: [60] Power Management version 3 Capabilities: [68] MSI: Enable- Count=1/1 Maskable- 64bit+ Capabilities: [78] Express Endpoint, MSI 00 Capabilities: [100] Advanced Error Reporting Kernel driver in use: vfio-pci Kernel modules: snd_hda_intel

Additional Dmesg Information:

root@pve-01:~# dmesg | grep "41:00" [ 1.754274] pci 0000:41:00.0: [10de:1cb3] type 00 class 0x030000 [ 1.754274] pci 0000:41:00.0: reg 0x10: [mem 0xce000000-0xceffffff] [ 1.754283] pci 0000:41:00.0: reg 0x14: [mem 0x18030000000-0x1803fffffff 64bit pref] [ 1.754297] pci 0000:41:00.0: reg 0x1c: [mem 0x18040000000-0x18041ffffff 64bit pref] [ 1.754306] pci 0000:41:00.0: reg 0x24: [io 0x9000-0x907f] [ 1.754315] pci 0000:41:00.0: reg 0x30: [mem 0xcf000000-0xcf07ffff pref] [ 1.754458] pci 0000:41:00.0: 32.000 Gb/s available PCIe bandwidth, limited by 2.5 GT/s x16 link at 0000:40:01.1 (capable of 126.016 Gb/s with 8 GT/s x16 link) [ 1.754490] pci 0000:41:00.1: [10de:0fb9] type 00 class 0x040300 [ 1.754508] pci 0000:41:00.1: reg 0x10: [mem 0xcf080000-0xcf083fff] [ 1.781620] pci 0000:41:00.0: vgaarb: VGA device added: decodes=io+mem,owns=none,locks=none [ 1.781620] pci 0000:41:00.0: vgaarb: bridge control possible [ 1.811278] pci 0000:41:00.1: D0 power state depends on 0000:41:00.0 [ 2.258616] pci 0000:41:00.0: Adding to iommu group 21 [ 2.258653] pci 0000:41:00.1: Adding to iommu group 21 [ 7.847822] vfio-pci 0000:41:00.0: vgaarb: changed VGA decodes: olddecodes=io+mem,decodes=none:owns=none

After VM Boot

PCIE Device:

root@pve-01:~# lspci -s 41:00 -v 41:00.0 VGA compatible controller: NVIDIA Corporation GP107GL [Quadro P400] (rev ff) (prog-if ff) !!! Unknown header type 7f Kernel driver in use: vfio-pci Kernel modules: nvidiafb, nouveau 41:00.1 Audio device: NVIDIA Corporation GP107GL High Definition Audio Controller (rev ff) (prog-if ff) !!! Unknown header type 7f Kernel driver in use: vfio-pci Kernel modules: snd_hda_intel

Dmesg:

root@pve-01:~# dmesg ... [ 3062.262871] vfio-pci 0000:41:00.0: vfio_ecap_init: hiding ecap 0x19@0x900 [ 3063.702406] vfio-pci 0000:41:00.0: vfio_bar_restore: reset recovery - restoring BARs [ 3063.733764] vfio-pci 0000:41:00.0: vfio_bar_restore: reset recovery - restoring BARs [ 3063.759983] vfio-pci 0000:41:00.0: vfio_bar_restore: reset recovery - restoring BARs [ 3063.789152] vfio-pci 0000:41:00.0: vfio_bar_restore: reset recovery - restoring BARs [ 3063.800319] vfio-pci 0000:41:00.0: Invalid PCI ROM header signature: expecting 0xaa55, got 0xffff [ 3063.810980] vfio-pci 0000:41:00.0: vfio_bar_restore: reset recovery - restoring BARs [ 3063.825283] vfio-pci 0000:41:00.0: vfio_bar_restore: reset recovery - restoring BARs [ 3063.829676] vfio-pci 0000:41:00.0: vfio_bar_restore: reset recovery - restoring BARs [ 3063.837817] vfio-pci 0000:41:00.0: vfio_bar_restore: reset recovery - restoring BARs [ 3063.853392] vfio-pci 0000:41:00.0: vfio_bar_restore: reset recovery - restoring BARs [ 3063.894470] vfio-pci 0000:41:00.0: vfio_bar_restore: reset recovery - restoring BARs [ 3063.916454] vfio-pci 0000:41:00.0: vfio_bar_restore: reset recovery - restoring BARs [ 3063.925763] vfio-pci 0000:41:00.0: vfio_bar_restore: reset recovery - restoring BARs [ 3063.945511] vfio-pci 0000:41:00.0: vfio_bar_restore: reset recovery - restoring BARs [ 3082.748642] kvm [43517]: vcpu0, guest rIP: 0xffffffff8106b364 ignored wrmsr: 0xda0 data 0x0 [ 3082.790655] kvm [43517]: vcpu0, guest rIP: 0xffffffff8106b364 ignored wrmsr: 0xda0 data 0x0 [ 3082.851592] kvm [43517]: vcpu1, guest rIP: 0xffffffff8106b364 ignored wrmsr: 0xda0 data 0x0 [ 3082.938058] kvm [43517]: vcpu2, guest rIP: 0xffffffff8106b364 ignored wrmsr: 0xda0 data 0x0 [ 3083.021439] kvm [43517]: vcpu3, guest rIP: 0xffffffff8106b364 ignored wrmsr: 0xda0 data 0x0 [ 3083.108127] kvm [43517]: vcpu4, guest rIP: 0xffffffff8106b364 ignored wrmsr: 0xda0 data 0x0 [ 3083.191588] kvm [43517]: vcpu5, guest rIP: 0xffffffff8106b364 ignored wrmsr: 0xda0 data 0x0 [ 3083.278314] kvm [43517]: vcpu6, guest rIP: 0xffffffff8106b364 ignored wrmsr: 0xda0 data 0x0 [ 3083.365051] kvm [43517]: vcpu7, guest rIP: 0xffffffff8106b364 ignored wrmsr: 0xda0 data 0x0 [ 3083.448406] kvm [43517]: vcpu8, guest rIP: 0xffffffff8106b364 ignored wrmsr: 0xda0 data 0x0 [ 3087.788173] kvm_set_msr_common: 51 callbacks suppressed [ 3087.788174] kvm [43517]: vcpu60, guest rIP: 0xffffffff8106b364 ignored wrmsr: 0xda0 data 0x0 [ 3087.871836] kvm [43517]: vcpu61, guest rIP: 0xffffffff8106b364 ignored wrmsr: 0xda0 data 0x0 [ 3087.955876] kvm [43517]: vcpu62, guest rIP: 0xffffffff8106b364 ignored wrmsr: 0xda0 data 0x0 [ 3088.040166] kvm [43517]: vcpu63, guest rIP: 0xffffffff8106b364 ignored wrmsr: 0xda0 data 0x0 [ 3088.215955] vfio-pci 0000:41:00.0: vfio_bar_restore: reset recovery - restoring BARs [ 3088.219107] vfio-pci 0000:41:00.0: vfio_bar_restore: reset recovery - restoring BARs [ 3100.100816] kvm [43517]: vcpu6, guest rIP: 0xffffffff8106b284 ignored rdmsr: 0xc001029b [ 3100.103434] kvm [43517]: vcpu6, guest rIP: 0xffffffff8106b284 ignored rdmsr: 0xc0010299 [ 3101.622908] kvm [43517]: vcpu49, guest rIP: 0xffffffff8106b2c4 ignored rdmsr: 0xda0 [ 3101.624318] kvm [43517]: vcpu49, guest rIP: 0xffffffff8106b284 ignored rdmsr: 0x3a [ 3101.624689] kvm [43517]: vcpu49, guest rIP: 0xffffffff8106b284 ignored rdmsr: 0xd90 [ 3101.624943] kvm [43517]: vcpu49, guest rIP: 0xffffffff8106b284 ignored rdmsr: 0x570 [ 3101.625181] kvm [43517]: vcpu49, guest rIP: 0xffffffff8106b284 ignored rdmsr: 0x571 [ 3101.625401] kvm [43517]: vcpu49, guest rIP: 0xffffffff8106b284 ignored rdmsr: 0x572 [ 3101.625628] kvm [43517]: vcpu49, guest rIP: 0xffffffff8106b284 ignored rdmsr: 0x560 [ 3101.625885] kvm [43517]: vcpu49, guest rIP: 0xffffffff8106b284 ignored rdmsr: 0x561

D3 Error:

Before adding disable_idle_d3=1 to /etc/modprobe.d/vfio-pci.conf, proxmox would give the following errors:

kvm: vfio: Unable to power on device, stuck in D3 kvm: vfio: Unable to power on device, stuck in D3

However, after adding disable_idle_d3=1 the proxmox message goes away, and proxmox does not report any issue. The VM ‘starts’ but doesn’t have any display output. This had little to no effect on dmesg or lspci output.

PCIE Gen 1 Corellation

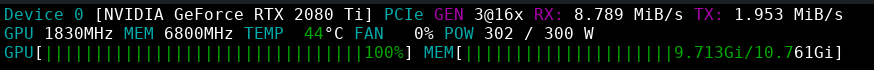

What I found from the additional dmesg output is that the card at 16x is using Gen 1 PCIe,

[ 1.754458] pci 0000:41:00.0: 32.000 Gb/s available PCIe bandwidth, limited by 2.5 GT/s x16 link at 0000:40:01.1 (capable of 126.016 Gb/s with 8 GT/s x16 link)

It is listed as capable of 16x Gen 3, but, is presumably operating at PCIE Gen 1 at this moment.

This would make the bios settings that specifically modify PCIE Gen 1 an interesting place to dig.

Shutdown the VM and Retry

When the graphics card is reporting “Unknown Header Type 7f” in lspci, I have tried to restart the VM in proxmox, and get the following DMESG output. In order to test again, I need to reboot the host.

[ 78.473349] vfio-pci 0000:41:00.0: timed out waiting for pending transaction; performing function level reset anyway [ 79.721260] vfio-pci 0000:41:00.0: not ready 1023ms after FLR; waiting [ 80.777226] vfio-pci 0000:41:00.0: not ready 2047ms after FLR; waiting [ 82.985161] vfio-pci 0000:41:00.0: not ready 4095ms after FLR; waiting [ 87.337063] vfio-pci 0000:41:00.0: not ready 8191ms after FLR; waiting [ 95.784989] vfio-pci 0000:41:00.0: not ready 16383ms after FLR; waiting [ 112.936730] vfio-pci 0000:41:00.0: not ready 32767ms after FLR; waiting [ 147.752952] vfio-pci 0000:41:00.0: not ready 65535ms after FLR; giving up

Further Discussion

At this point, I’m somewhat stumped. I’ve looked around on the internet trying to find issues where people report these errors:

“Invalid PCI ROM header signature: expecting”

“!!! Unknown header type 7f”

“Unable to power on device, stuck in D3”

And I have found less than I would have hoped. I expect this is not an issue with my proxmox configuration, but rather with my BIOS settings.

Does anyone have any ideas?

Edit 1: Fixed Images

Edit 2: Added Additional Dmesg Information

Edit 3: Added PCIE Gen 1 Corellation

Edit 4: Added Shutdown the VM and Retry