I love VFIO. The only two things that make it less than pleasant are battleye anticheat for rainbow six siege (I have to dual boot an OS just for that game) and evdev not being resettable until the VM is restarted.

For the anti-cheat, there might be little we can do - would these systems be okay if memory was encrypted on the guest?

For the evdev, it’s annoying when a USB hub resets and I’m in the middle of a game. I have to reboot the whole system to get my input back. I like evdev because I can switch between the host and guest for my keyboard and mouse with a simple combo, and just switch display inputs and use scream for audio. I don’t use looking glass even though it’s a great tool.

I just got bored about current and next GPU news and bought a 2nd hand 7900XTX Reference card for $800.

I may be wrong, but i may say i now have GPU for next 2 gens.

And so with no further do, I passedthrough it succesfully without doing anything special. Just got assigned the pci reference numbers to the vfio kernel args and make a little requirement to load the vfio-pci driver before the amdgpu driver as found on a promox forum post (because using a 4750G Renoir Desktop Ryzen 7 Pro iGPU for host on a X570 MSI PRO MB).

The only tricky thing i had to do is start the VM without HDMI cable plugged in. Same symptom as with the Intel ARCs on their starts.

No need to use ReBAR or anything else. Performance is pretty good all the way, and i only noticed some delays on rendering depending on the game and/or Chromium-based apps/GUIs. FH5 AAA game at 4K60 with Extreme settings is using about 50% of the GPU and about 12 GB of VRAM, although in some scenarios ups to 80% of GPU utilization. I know that if i make things right i could have an extra performance on it, but the thing is that with ReBAR enabled i loose the possibility of having a two-way GPU passthrough working together with another Intel A770 16GB LE that i use with a Win11 Insider Dev preview to test out WSL2 (<-- custom WSL2 distros, as endeavourOSWSL2, doesn’t get working GPU accel) + WSA with nested virt enabled (+ with now with stable diffusion: tomshardware/news/stable-diffusion-for-intel-optimizations).

Background is that the VM i assigned the 7900XTX was already setup with a Win10 /ameliorated.io 21H1 image, and assigned to it bf a 6750XT Red Devil, with drivers set at 23.4.2 Adrenalin but through SDIO (didn’t update the drivers yet, nor planning to, as perf is good, just maybe when SDIO releases another stable driver i’ll maybe consider it). That maybe did the trick also.

Didn’t tested tho if there’s any reset bug yet with this setup. The Intel surely does have it, being more harmful rebooting the VM that shutting it down as it can’t start again, but shutting it down gives me the ability to recover control of my evdev USB input devices and get a freeze on Virt-manager software that only leads to force closing warning dialog from Cinnamon, and so i force it.

Then i open it again and while it stays 30 secs until it connects to the libvirtd service, at the end service get “autoforcefully restarted?” and all memory is freed.

I then reboot entire host and only remains the qemu-system-x64 emulator process hanged that gets killed by the rebooting cycle after a while (30 secs max).

Then on the next boot the Intel card will not be available, moreover it won’t even show up as PCI device at all to be usable, but rebooting again solves that issue.

With two rebootings seems like is capable to recover to a normal state itself for the next passthrough.

Both of them can’t display the OVMF UEFI screens (6750XT did), so for updates and other pre-boot screens i have assigned the SPICE graphics with QXL video, and disabled it on the first place in device manager once the system and drivers have been setup (talking about Intel ARC VM).

And so to update between Win11 Insider Dev builds i don’t assign the pcie card and work a bit with that QXL alone to see what the VM is doing. The thing is i now remain stall on build 23493.1000 bc newer build fails at initializing VirtIO drivers. It wasn’t a new release of them at fedora group since January so i’m guessing it has to do with that.

Have two monitors, one 4K60 Benq for gaming, other 1080p Benq for work, pretty rock solid except for the reset bug of the Intel rn. 2 keybords/2 mouses/2 audio interfaces (HDMI/Displayport through monitor speakers).

Kernel on Host is Manjaro 6.3 Mainline (which is EOL now BTW, no VFIO variant, good MB IOMMU groupings). 6.4 fucked me some peripherals so i’m sticking with 6.3 until it gets better.

I’ll wrote down what AGESA version is using this MB once i get the chance of rebooting to check it out, as i updated it back in january 2021 for changing from the 2700X to the 4750G and since everything was working ok never updated it anymore.

I also have an Ansible role written by me to automate setting up VFIO passthrough. Don’t have it uploaded anywhere tho. Code isn’t clean and i am somewhat lazy to clean it. Is simple, it works, but it’s not perfect. I feel I’m not worthy to show it up in public, although if someone asks for it, i’m willing to share it, np.

Written from my Cromite web browser through Tor (root iptables Invizible Pro app) under WSA, under W11, under Manjaro.

P.D.: BTW Wendell why do you have your web/forum cloudflareeeeeed? github/zidansec/CloudPeler /crimeflare.eu.org

P.D.2: Use this against DDoS and not Crimeflare: github/SpiderLabs/ModSecurity

i dont have anything to actively contribute to this, but i want to add to the pool of people who are interested in setting up something on VFIO gaming ('nix host, windows guest) because 1, thats something really cool, and 2; i have enough to deal with windows 10 data gathering as it is, i dont want to have to deal with whatever windows 11 has baked in it.

Here is my setup.

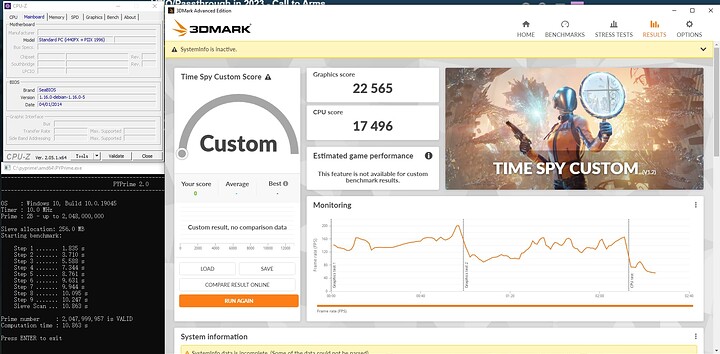

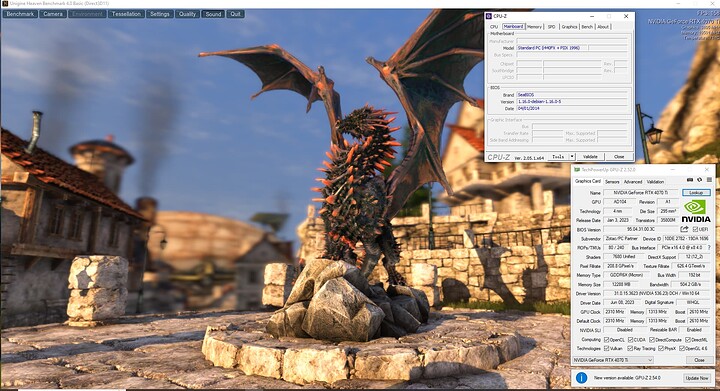

CPU: AMD Ryzen 9 7950X 16-Core Processor

RAM: 128GB@6000MT/s (Kingston FURY Beast DDR5-5600 EXPO 2x32GB, Kingston FURY Beast DDR5-6000 EXPO 2x32GB)

GPU: ZOTAC GAMING GeForce RTX 4070 Ti Trinity (12GB)

Main Board: MSI MPG X670E CARBON WIFI (MS-7D70)

Storage: 2x Intel SSD 670p M.2 NVMe 1TB, WD 8TB SATA(WD80EDAZ), WD 14TB Ultrastar DC HC530 SATA

Network: 10Gb Intel x540-T2 (SR-IOV Enabled)

PSU: Corsair RM750x

The host is mainly used as a NAS server backed by ZFS. On top of the ZFS storage, it hosts couple of LXD Containers, VMs. The RTX 4070 Ti is passed through to one of the VM. Everything is backed up by ZFS. I don’t use mirror. I do have backup. If anything fails, I just recover from the back up. Not a big deal for me.

Host: Ubuntu Server 23.04

Guest:

- Windows 10 Pro for gaming and working.

- Ubuntu container, as Plex Server.

- Ubuntu container, as Unifi Controller.

- Ubuntu container, as Web Server.

VM performance.

I appended my scripts to this post for anyone interested in VFIO with Radeon 7000 series cards or virtualization with the 7950X3D.

You would get better PCIe throughput to the GPU if you were running a Q35 system. NVidia drivers change some parameteres based on the PCIe bus width and speed reported, but i440FX never had PCIe and as such reports it as a PCI device, which had no concept of bus width or speed.

There is an entire discovery thread on here about it from a few years ago where this was proven and the feature to pass the bus parameters to the guest were added to QEMU.

Good point. The software stack has a long history all the way back to my Z87 Haswell 4770K system. I upgrade the hardware along the years. The windows VM was created a long time ago, too. At that time, I just passed throught the intel igpu, so that I could get display output directly. Yes, i440FX is outdated now. If let me do it right now, I will choose Q35.

Even though, I don’t notice the PCIE bandwidth issue. Even Resizable Bar is Enabled.

This is awesome, just got into this been a long time VMware user till now. I have VFIO for the most part working on I9, With Nvidia 2080 as main card and Nvidia 1050 for GPU pass thru. Building it basicly for Adobe Products and Office 365 suite of apps including the need fo Microsoft teams. My only issue at the moment to work is out is once I shutdown the VM, I can not start again and have the GPU start. It requires a full reboot of host/guest. Works once, but I do not date stop the VM and add a device, if so a reboot is needed. Other than the “Use it once” issue at the moment it seems working well on Garuda Linux. I just wish I could shutdown the VIM when I do not need it and start it again without a reboot.

I had the same issue when doing passthrough with a GTX 1070. The workaround I used was removing the device from the PCI bus with sysfs, then triggering a rescan to reattach it. I had to do this before every boot of the VM. I think I found this method somewhere on Arch Wiki. Maybe it will work for you?

The script I used:

reattach-vfio-devices

#!/bin/sh

# Edit these to point to the appropriate addresses on your system

gpuAddress='0000:09:00.0'

gpuAudioAddress='0000:09:00.1'

devices="$gpuAddress $gpuAudioAddress"

for dev in $devices; do

devpath="/sys/bus/pci/devices/$dev"

if test -d $devpath; then

echo -n "Removing device: $dev ... "

echo 1 | sudo tee "$devpath/remove" >/dev/null

sleep 2

echo "done"

else

echo "error: $dev not found in device tree" >&2

fi

done

echo 'Rescanning PCI bus'

echo 1 | sudo tee /sys/bus/pci/rescan

If removing the “GPU audio device” stalls and throws errors in the kernel log, try reattaching just the main GPU device.

Awesome thank you, I will try this. I am not passing the audio part of the 1050 NVidia. Even though it shows it as using the VFIO driver for it, when the audio part of card is placed into the VM it breaks looking glass. I am fine without it, if I can just stop the need for rebooting the whole setup. I will try this tonight if possible. Thank you so much.

Care to share how it breaks it? It should not be possible.

There might be a bug with vfio-pci, linux kernel 6.x, and Ryzen 7000 igpu.

The issue is when you try to load vfio-pci module for dgpu, it will trash the frame buffer of the igpu. It causes there is no more video output from the igpu after vfio-pci is loaded.

It doesn’t happen with linux kernel 5.x. It doesn’t happen with other gpu, either. The simple way to fix that is to put in another gpu (e.g. a cheap R7 240) for the host instead of using Ryzen 7000 igpu.

I’ve been rocking a ryzen 9 5900x with 64 gigs of ram, a 6800xt for host, and a 3090 for passthrough. I wanted to say thank you to all those who have contributed and helped make this possible for mere mortals. Huge shoutout to the developers like gnif. Looking glass is amazing and I would happily pay for it and look forward to supporting the project further.

I recently fell into the k8s rabbit hole again, and I found Kubevirt…then I said “hmm this seems complex but also cool, lets mess around.”

I took a look at Rancher Labs Harvester a year or so ago and found it interesting but I decided to stick with the tried and true Proxmox and I couldnt be happier with my two proxmox nodes. But I wanted to try Harvester to see if I could really manage vms with kubernetes and do things like vfio with it. So for science I bought a minisforum hx99g and got to tinkering.

It’s pretty amazing, especially if you use rancher to deploy k8s clusters onto harvester as a “cloud target”. As far as vfio goes, they make adding the pcie devices incredibly easy and simple. What I haven’t figured out is why my vm fails to boot up when I attach the 6600m.

I’m sure it’s a user error and adding the k8s ontop of everything adds another layer of complexity. All that being said, VFIO is awesome and I have enjoyed the past couple years of learning and tinkerning and look forward to more! Thank everyone!!

Sure if I can. Basicly running with just the GPU life is fine, Everything works as it should for the most part. Once I add the NVidia Audio portion of the card and start the VM up I get a Broken Glass icon in the upper right section of the screen. Looking Glass still shows login in window, but the Keyboard and Mouse no longer work. If I remove the audio section of the card, it works again.

Both the GPU and Audio are excluded and show VFIO as their driver if I list them out with LSPCI -nnv. I think that was the command I used. I am work at this time and can not access my home PC. As long I do not add the Nvidia Audio life is pretty good. Again though once I add it, I get what looks like a broken glass icon in the upper right of the screen and I can’t login using looking-glass.

This is to let you know that the LG host application can’t capture anything and/or start and you have fallen back to spice video. When in this mode input only works if you press Scroll Lock to capture the input, this is a failure recover feature.

You need to check why the GPU is not working, have a look in device manager.

Thank you I will check that tonight and see whats up. Very odd. So for now I just do not add the Audio from Nvida card. Havent really needed it anyway. ;-). Thanks again I will check this. I got Microsoft Teams working, audio is off on the video by a second. But tolerable. Works really well other than that.

When I add the audio portion of 1050 Gpu, I get the dreaded (Code43) on the video side. But the audio card installs fine. Once I remove the audio part of the card it goes to back to loading fine.

Well, I have a new desktop… and so far the VFIO passthrough is a fail…

AMD R7 7700

b650e taichi motherboard

RX 6900 XT (host)

I’m trying to pass the iGPU from the APU (integrated graphics passthrough), but I get a error code 43. This is an all AMD system. I’m sure discrete GPU passthrough would be fine, but I don’t want to add another GPU when I already have one built into the CPU.

I haven’t tried anything with GVT-d, but I don’t even know if that is possible on an AMD APU. It works on my intel laptop.

No glory here darn it. But thank you for the script and suggestion.

Drilled down this rabbit hole a bit more in recent days. I found a couple of surprises. So a quick update for folks interested in this topic.

AVIC has two parts. The so called “SVM AVIC” (related to the CPU/software interrupts) and “IOMMU AVIC” (interrupts from devices).

Now the bizzareness. Zen 2 and Zen 3 do not have “IOMMU AVIC” (!!) support in hardware. AMD seems to remain silent about it for years. Zen 1 and Zen+ have both “SVM AVIC” and “IOMMU AVIC” (!!) support in hardware.

I’m not sure about Zen 4. And curious about it. I wonder what people get from ‘dmesg’ in Linux. Here is an example output from Zen 2:

$ dmesg|grep AMD-Vi

[ 0.151457] AMD-Vi: Using global IVHD EFR:0x0, EFR2:0x0

[ 0.730724] pci 0000:00:00.2: AMD-Vi: IOMMU performance counters supported

[ 0.731501] pci 0000:00:00.2: AMD-Vi: Found IOMMU cap 0x40

[ 0.731502] AMD-Vi: Extended features (0x58f77ef22294a5a, 0x0): PPR NX GT IA PC GA_vAPIC

[ 0.731505] AMD-Vi: Interrupt remapping enabled

It’s from a Ryzen but I expect EPYC has the same (look forward to hearing more surprise).

Software wise it also requires support from Guest OS. Windows 10/11 seems fine with “SVM AVIC” but not clear about “IOMMU AVIC”. Linux seems fine with both. MacOS is not okay with either unsurprisingly.

My machine spent most of its up time in MacOS VM. So seems I’ve been chasing after thin air. LOL