Good day, everybody.

For the last couple of days I’ve been trying to consolidate my servers and make my whole self-hosted infrastructure more portable. I would love to accomplish this using just docker(-compose).

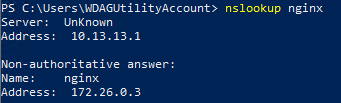

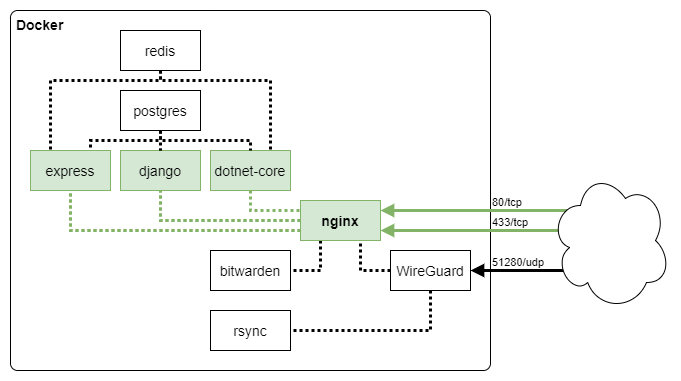

I’ve already put all of my services into one big docker-compose file, with all services behind an NGINX reverse-proxy. To reduce the potential attack-surface, I would like to make my more sensitive services (password-manager, webmail, media-server, etc.) only reachable through a WireGuard VPN.

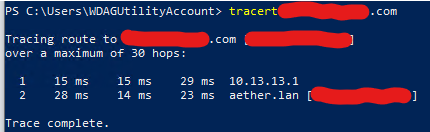

I’ve come as far as configuring a WireGuard container (linuxserver/docker-wireguard) and connecting to it. Where I’ve been failing is in trying to get the NGINX-container to listen on both the ethernet and wireguard interfaces.

This is a heavily redacted version of the docker-compose.yml:

services:

nginx:

image: nginx:mainline-alpine

ports:

- "80:80"

- "443:443"

volumes:

- "/certs:/etc/ssl/cert"

- "/static:/usr/share/nginx/html"

- "/log:/var/log/nginx"

networks:

- public

- private

- wireguard

wireguard:

image: linuxserver/wireguard

cap_add:

- NET_ADMIN

- SYS_MODULE

volumes:

- "/config:/config"

- "/lib/modules:/lib/modules"

ports:

- "51820:51820/udp"

sysctls:

- net.ipv4.conf.all.src_valid_mark=1

networks:

- wireguard

public-container:

...

networks:

- public

private-container:

...

networks:

- private

And this is what i was trying in the NGINX config:

server {

listen <eth0-ipv4-address>:443 ssl;

listen [<eth0-ipv6-address>]:443 ssl;

server_name public.com

include /etc/nginx/public-proxy.conf;

}

server {

listen <wg0-ipv4-address>443 ssl;

listen [<wg0-ipv6-address>]:443 ssl;

server_name private.com

include /etc/nginx/public-proxy.conf;

include /etc/nginx/private-proxy.conf;

}

But it doesnt seem to be so easy. I’ve also tried setting network_mode: "service:wireguard" on the NGINX container, but then I make the proxy unreachable on the eth0 interface.

Any suggestions or pointers would be dearly appreciated. Should there be any way to do this, I will probably also need some help with the NGINX configuration and getting the DNS-situation figured out.

I thank you in advance!

)

)