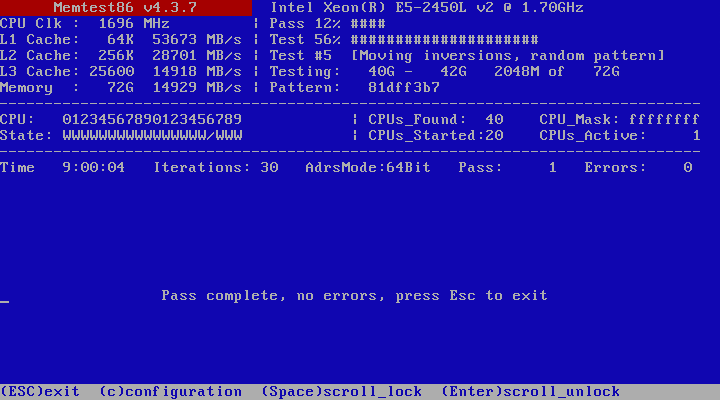

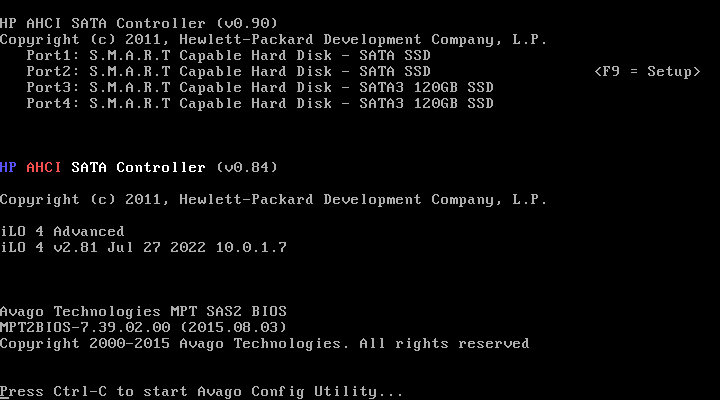

It appears I didn’t configure memtest to use both CPUs so I’m going to give it a try again, but it ran through without any errors.

It appears I didn’t configure memtest to use both CPUs so I’m going to give it a try again, but it ran through without any errors.

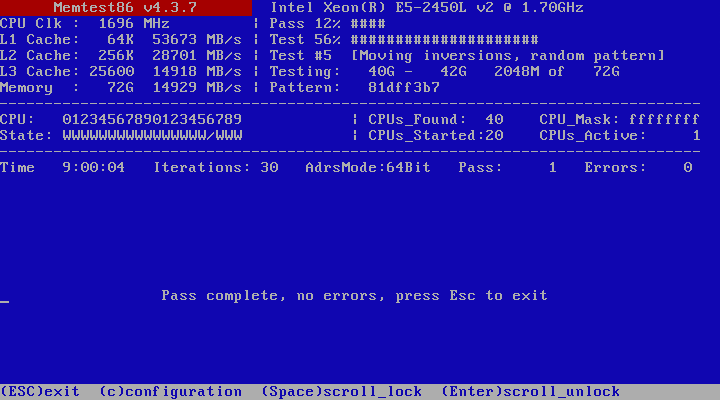

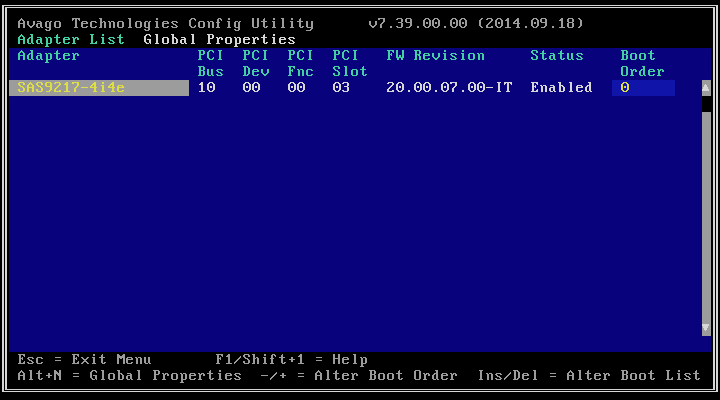

Rebooted and tried to start the Avago Config Utility with Ctrl + C.

This produced a red screen like this:

This shows up like this in the logs.

Anyone know if this could be related to the ZFS issues?

When searching online for the “NMI Detected HP Proliant” I found a few suggestions like not using HP Memory, re-applying thermal paste to the southbridge, and just not using an HPE certified card.

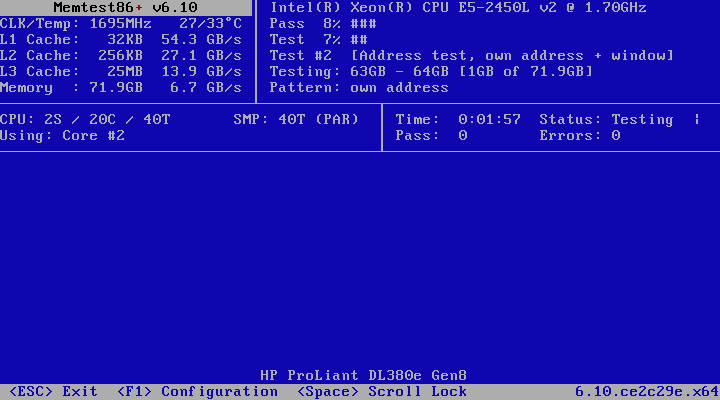

Couldn’t figure out how to get Memtest86 v4 to run on multiple CPUs so now running Memtest86+.

Memtest86+ completed real quick in the default parallel mode. No issues.

Booted into FreeDOS and flashed latest BIOS rom and IT mode firmware on the 9207-4i4e.

Still crashes when trying to enter into the config utility during POST.

Booted into TrueNAS to see if the issue is resolved despite still crashing. Ran zpool clear shelf and same thing happened.

pool: shelf

state: UNAVAIL

status: One or more devices are faulted in response to IO failures.

action: Make sure the affected devices are connected, then run 'zpool clear'.

see: https://openzfs.github.io/openzfs-docs/msg/ZFS-8000-JQ

scan: resilvered 840K in 00:00:01 with 0 errors on Wed Feb 22 23:38:32 2023

config:

NAME STATE READ WRITE CKSUM

shelf UNAVAIL 0 0 0 insufficient replicas

raidz2-0 DEGRADED 0 0 0

843c78cb-e4f4-49c3-94ed-6df39813d0ef ONLINE 0 0 0

ab837ac4-b2f8-4e90-be23-f3eba9270a6a ONLINE 0 0 0

c9dc3a80-dba2-43d7-ae07-e4c795fe5725 ONLINE 0 0 0

f6ce1fbe-9b24-4f77-bb71-a1dcb917be7a ONLINE 0 0 0

01448d17-2e96-4a23-ae24-784205b5baac REMOVED 0 0 0

raidz2-1 UNAVAIL 0 0 0 insufficient replicas

ff427fbc-4378-4704-96b0-c5bafd733ac9 REMOVED 0 0 0

e4db13f0-9f23-40e5-89c3-1c80109b5be8 REMOVED 0 0 0

9d3fa290-bd66-4237-9d62-4cbfca338006 REMOVED 0 0 0

f46fd6a3-04f8-4f6d-8c4b-2a6af5d57afa REMOVED 0 0 0

da0f430b-c41e-4c73-a6cf-2cb796196e0a REMOVED 0 0 0

raidz2-2 UNAVAIL 0 0 0 insufficient replicas

b2d582ab-40aa-4308-993c-fa19da583b53 REMOVED 0 0 0

0fca336a-3ba0-43ba-a7db-1c25f5c53b1d REMOVED 0 0 0

08b6f785-af46-444b-9bfb-7f328781c1e9 REMOVED 0 0 0

1b1edf4a-ec8b-416d-86ef-160c511717ac REMOVED 0 0 0

c40684a5-ba2b-4acf-b14f-754579413e19 REMOVED 0 0 0

raidz2-3 UNAVAIL 0 0 0 insufficient replicas

9391acdb-fa3c-4e70-a372-63437236f630 REMOVED 0 0 0

04197bd5-6ceb-4155-972d-d0b6413b36d7 REMOVED 0 0 0

34d68ce2-d06e-4cd4-93b5-d6e13e184cb9 REMOVED 0 0 0

629e798e-3783-4132-bd3f-b0b0e59a909f REMOVED 0 0 0

b37cf4bd-02e4-4046-a70f-643333701aa6 REMOVED 0 0 0

special

mirror-4 ONLINE 0 0 0

9efed506-136d-482e-8bf4-298baf9b7577 ONLINE 0 0 0

cce016bb-169b-49a5-898b-a3b0081d3b7d ONLINE 0 0 0

logs

mirror-5 ONLINE 0 0 0

7e4395d2-a6da-4073-a3b1-69b6559571dd ONLINE 0 0 0

bbb6919a-c4ad-4628-a16b-a211da06dc05 ONLINE 0 0 0

cache

9fa1042f-573a-4498-b98d-f503666eb500 ONLINE 0 0 0

036aaa42-e7d7-4dcd-8b66-f9fa87e6ed8c ONLINE 0 0 0

spares

de525a83-906f-49e0-89d1-1a95fabdac5f AVAIL

e0a461ee-1099-455e-a79f-f57e7208a23f AVAIL

errors: List of errors unavailable: pool I/O is currently suspended

Attaching the dmesg too. Seeing very similar things so I’m doubting this fixed anything.

after_update_dmesg.txt (229.9 KB)

The temps on the chipset are certainly toasty compared to the rest of the system, but nowhere near the critical temp.

Gonna try resetting the server to factory settings tomorrow.

Google found me this suggestion.

Did you upgrade fw on your HBA recently?

If not, do you have another PCIe slot to move the HBA to? Maybe reseat?

Great find!

I upgraded the fw just barely, but looks like the BIOS is the same version as before

Installed the known good BIOS and now I can enter the config utility!

Before i start messing around with these controller settings going to see if the updated HBA BIOS resolves the issue.

Edit: Well… that didn’t fix anything in TrueNAS.

I will need to do a few more reboots, but running a scrub right now to be sure everything is alright!

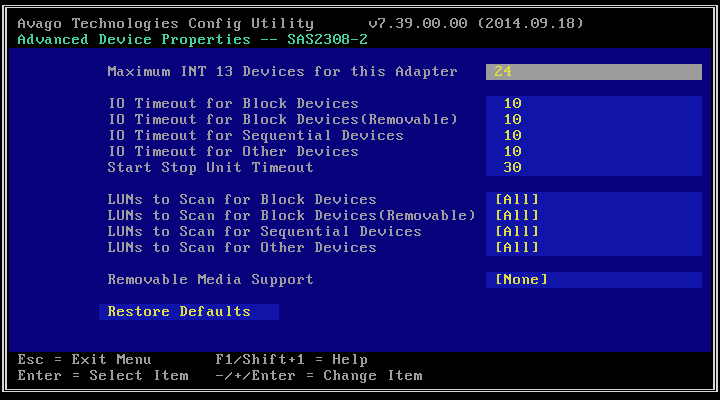

It appears that these settings fixed it in my case:

I have no idea if these settings are bad yet, I would assume ZFS would catch any issues if things aren’t written to disk. Some of these may also be much higher than needed.

Edit: Scrub was clean, on reboot running into similar issue again… ![]() Must have just been one of the good reboots.

Must have just been one of the good reboots.

dmesg.txt (242.7 KB)

Since the server isn’t at my home I made a visit.

Here’s a list of things I did in no particular order:

Still no luck. Ordered a new DAC-type cable which should arrive tomorrow. Going to reset the BIOS and iLO at that time too.

I would suggest a totally different route. For this you’ll a little bit knowledge of Linux and a spare (empty) SSD.

Take the SSD and install Ubuntu on it, and then install the required ZFS apps and libraries needed. See here for instructions (Follow those instructions but do not try to create any pool or anything).

Boot the SSD with the Ubuntu on your system, login, become root (sudo) and try to import the pool (in your case the command should be something like: zpool import tank ).

If it works - then great, power off the system, power on, try to import again. Works? then it looks like the hardware and firmware are Ok and it might be an issue with Truenas. If not, it might be an issue with your HBA card.

After doing those tests, you can always reboot to Truenas, just try not to write anything to the pool when doing those tests.

Swapped the DAC cable and was very excited when it worked on first boot. Rebooted to see if it was the problem and no dice… same issue. Tried the iLO reset, no dice. Reset BIOS settings, same thing.

I left the server off all night so thought cold boots may be helping, but tried leaving it unplugged for 15 minutes and booting. No dice.

The server takes an incredibly long time to boot so it took a while to get Ubuntu Server installed because it kernel panicked once while booting from the live USB. I installed zfsutils-linux and mounted the pool with zpool import shelf -f with no issues. Rebooted and it ran into the same issues that I’ve been seeing on TrueNAS.

This is leading me to believe it has something to do with mounting the drive while the system is booting or some other timing issue…

Edit: Gonna play around and see how TrueNAS mounts these ZFS pools; fstab, systemd, some ZFS thing, etc.

Well there’s only /etc/fstab (no mstab, vfstab, etc) and it doesn’t have anything other than the boot volume. Systemd mounts don’t seem to be initiating anything related. It appears to be something IX systems or ZFS is doing and the services I found for mounting ZFS with systemd weren’t enabled.

I’ve decided as a stop-gap solution to just boot it up, reinstall TrueNAS, import the pools, and hope I don’t have to reboot until I can save up for a replacement system. If anyone has some good ideas I’m open to giving them a shot. Thank you to everyone who’s helped and/or suggested things to try.

As for the replacement, I’m looking at a TrueNAS Mini R and loading it up with 6 20TB drives in a single RAIDz2. At a later time I can throw in another 6 drives to increase total capacity to 160TB. I was leaning towards a Synology RS2421+ largely due to the flexibility of adding drives as required, but their recent restrictions on 3rd party hard drives makes it more expensive than the Mini R.

This current system was designed at a time when it was the primary SAN/NAS for my homelab and so performance for VMs and similar workloads was paramount. It has since become a media server along with backups with the homelab stuff moving to a Turing Pi v2 due to power consumption, better resiliency, and quieter operation.

Update: I completely reinstalled TrueNAS SCALE. Pools imported just fine, but learned SCALE doesn’t support multipathing yet so I shut it down to remove the other HBA. Booted back up without errors. No idea if it was a lucky boot, some configuration issue (which wouldn’t make sense with the issues I had with Ubuntu), something related to the shares or services relying on the pools (same thing as above), or something else entirely.

Once I have something else to transfer all the data off to I may try a few more things, but things appear to be running well for now.

Hello, I think I am having a similar issue. I tried adding a LSI00300 IT Mode LSI 9207-8E 6Gb/s Extelnal PCI-E 3.0x8 Host Controller Card to our Dell R720XD server running Proxmox VE 8 with TrueNAS to add a NetApp DS2446 with drives in it. This card ended up taking down our whole server. I tried redoing the Proxmox host over, then restoring backups, but the problem came back. I figured out it was this card that was causing the whole system to go down. I’m not sure if this card was not flashed for IT mode correctly, or if it is just not compatible with my system. Could anyone recommend an HBA Controller Card that does not have issues like this? Or a solution to make this card work.