This is a cross post on reddit:

(6) Reddit - Dive into anything

OKay. So I love TMM. I’ve purchased it more to support the development than to actually gain access to the paid features. But I find it remarkable that the most obvious feature I would want is missing. When TMM (or literally every other media software…) queries a file it takes into consideration the metadata of it, stuff like the title is pulled so we can get clues for matching/scraping. You would be AMAZED at what is stale and lurking in your files potentially ousting you for where that totally-legally-acquired movie came from!

We have a software that nicely helps us organize our files, standardize name conventions, subtitles, trailers, everything in an attempt to create the:ULTIMATE SOURCE OF MEDIA TRUTH

But for some, odd, reason, we never write any of that harnessed metadata back into the NAS.WHY? Well, the sinister truth is that we just need to do it ourselves. So I wrote a script, with /r/chatgpt 's help.FWIW I am not a coder, but feel free to fork this and do whatever you guys want. I am just putting it under GPL so people aren’t stupid and do something horrible to their files and try to blame me. There may be some bugs I fix, but I may or may not find or get to them all.

Some assumptions are made here:

-

ffmpeg must be placed in a folder called bin in the same directory from which the script is run

-

‘Z:\movies\’ is the monitor directory by default

-

.mp4 files are the only format its looking for by default.

-

I am expressly wiping out the HDR type metadata field, as I have rencoded all of my HDR content to SDR for my workload. Feel free to comment out those sections.

-

My testing has only been on Windows, unsure of compatibility issues elsewise.

FEATURES

-

We crawl the defined directory to find files which match the format we are looking for.

-

We extract the metadata from the NFO file generated by TMM for that file if it exists.

-

We write that metadata back to the file, with some added cleanup and assumptions.

-

Now our file is immediately recognizable by things like Plex, so no more false positives.

-

If you lose your TMM database, you wont have to match all of your files again!

-

Basic progress tracking

-

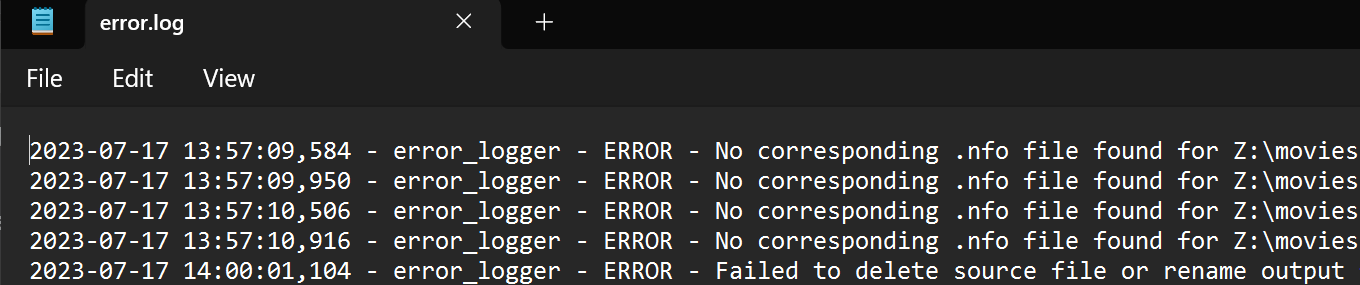

Everything is logged to the directory from which the script was run. There is an error log and an informational one (which really doesn’t tell you much information lol).

-

The system creates a JSON file to track previously proced files

-

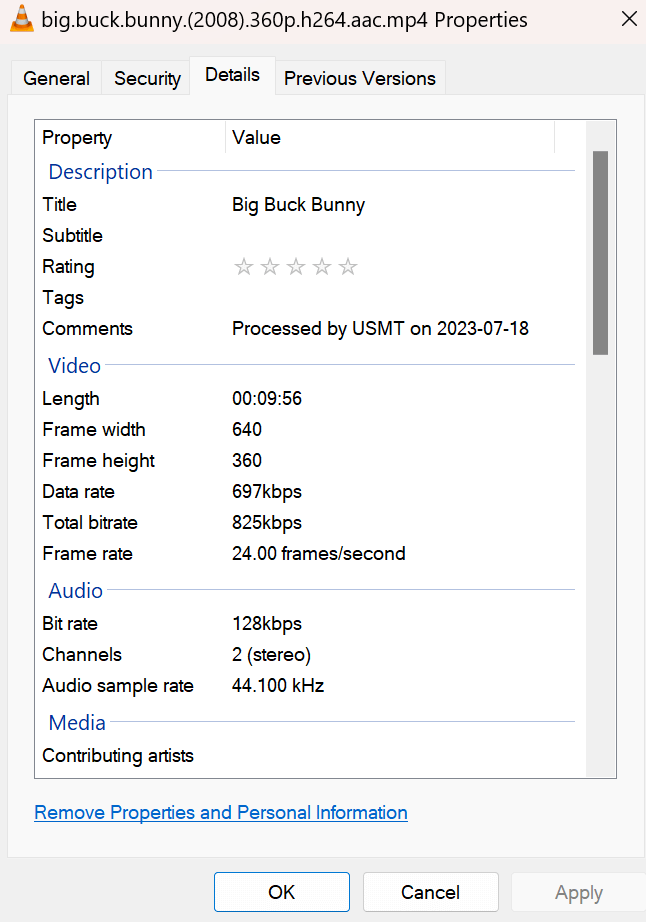

There is also a tagging process which occurs and includes ‘Processed by USMT on DATE’ in order to ensure that if there is database corruption we still aren’t doing extra work.

-

There is a secondary python script which you can use to track progress between (and during) runs.

-

A file called 'YOURFILENAME_output.MP4 is created, so we are never modifying anything directly. The script will delete the original file and replace it with the new one once completed.Before Example…and lets just say I’ve seen alot weirder and more colorfully labeled things.

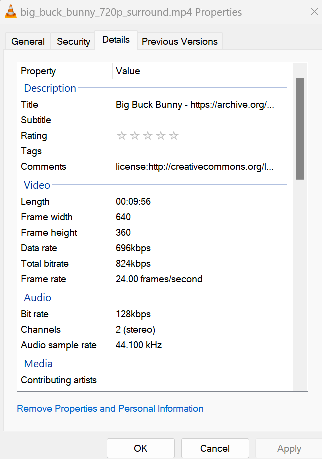

Before Windows

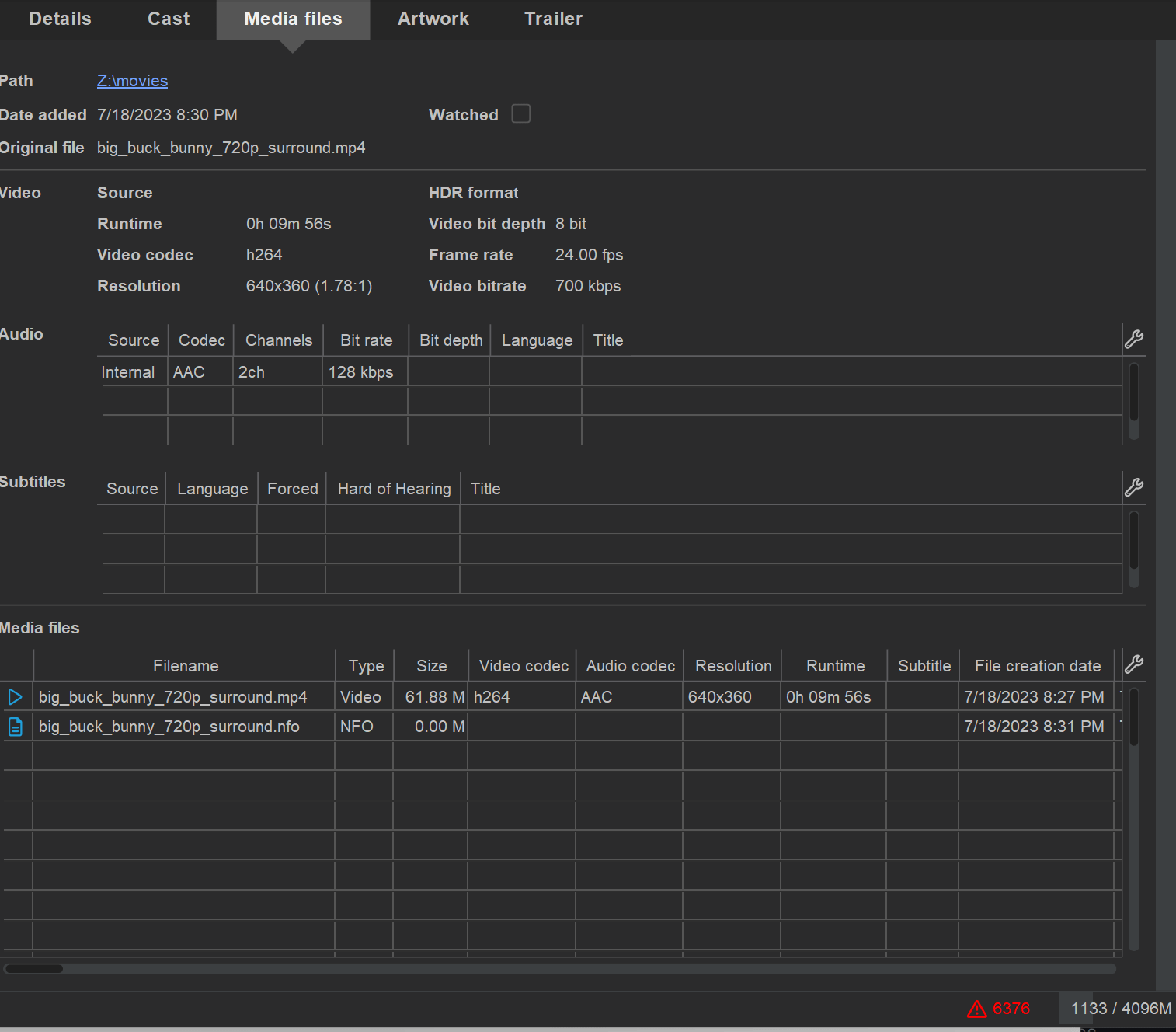

TMM Before

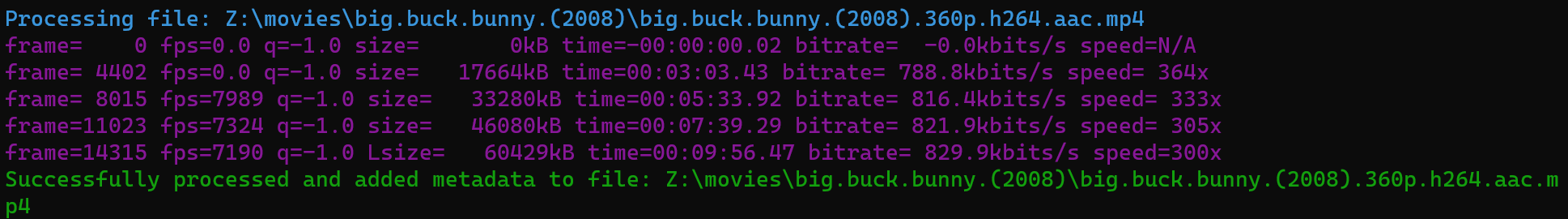

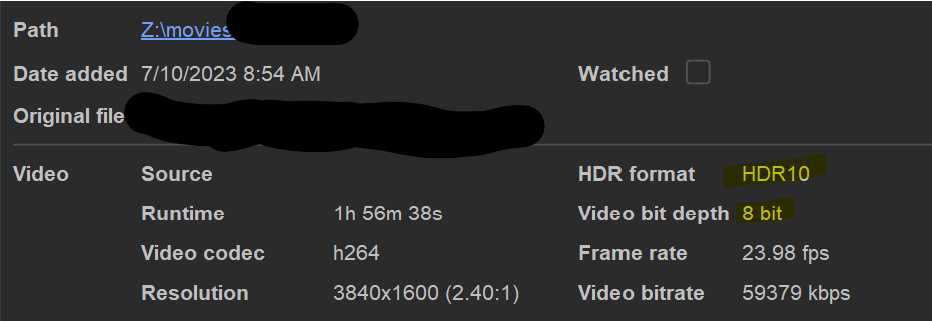

Script running

TMM Before Name Match on Detection

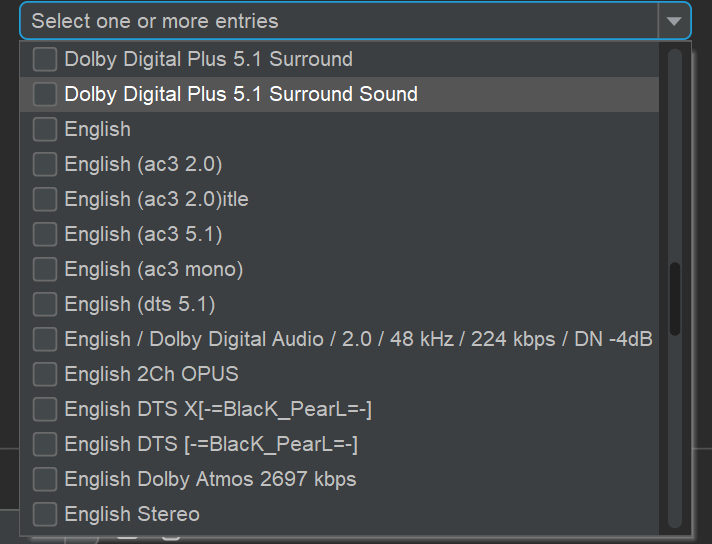

Non Standardized Audio Channel Titles Before Script.

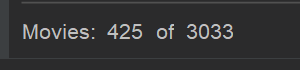

425 Movies that don’t have any value assigned to audio track languages!

One of 43 movies which were 8 bit videos that were labeled as having HDR

Progress Tracking

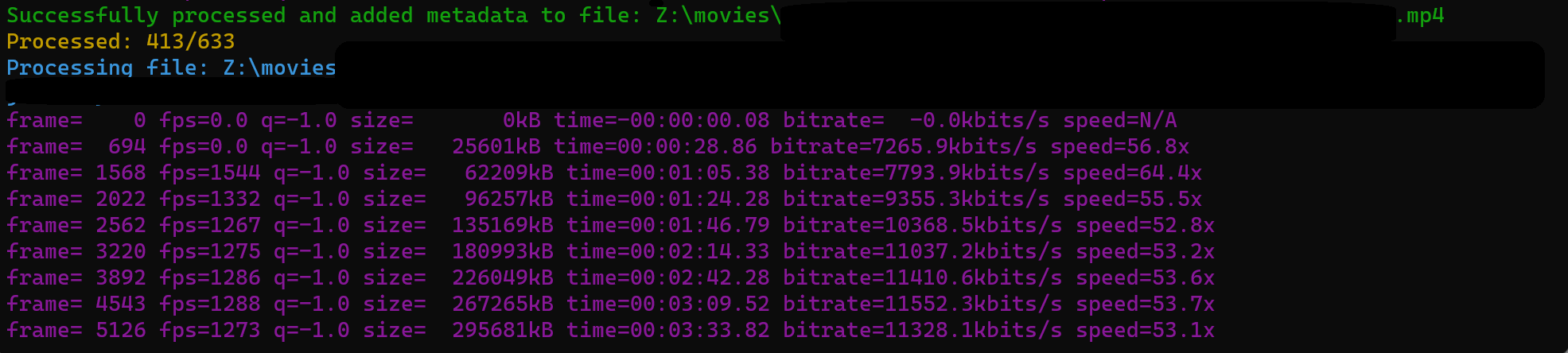

Reports

Logging

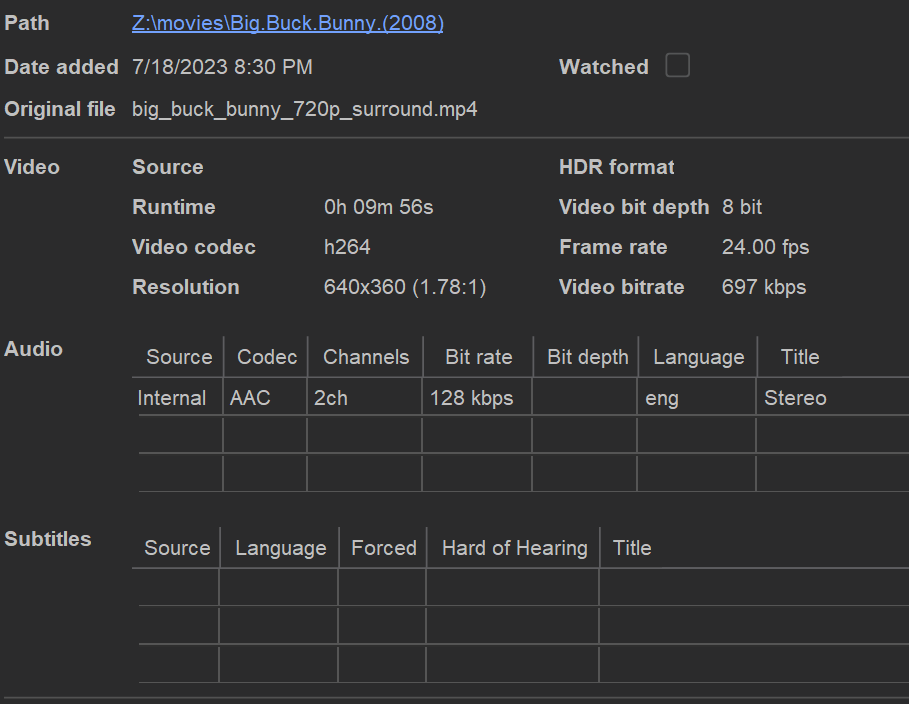

After cleanup TMM

After Cleanup Windows

# ffmpeg must be placed in a folder called bin in the same directory from which the script is run

# ULTIMATE SOURCE OF MEDIA TRUTH - nickf v2.1

# Copyright (C) 2023 Nick Fusco

#

# This program is free software: you can redistribute it and/or modify

# it under the terms of the GNU General Public License as published by

# the Free Software Foundation, either version 3 of the License, or

# (at your option) any later version.

#

# This program is distributed in the hope that it will be useful,

# but WITHOUT ANY WARRANTY; without even the implied warranty of

# MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the

# GNU General Public License for more details.

#

# You should have received a copy of the GNU General Public License

# along with this program. If not, see <https://www.gnu.org/licenses/>.

# ffmpeg must be placed in a folder called bin in the same directory from which the script is run

########################################################################################################

import os

import json

import subprocess

import xml.etree.ElementTree as ET

from datetime import datetime

import logging

from colorama import init, Fore, Style

# Initialize colorama

init()

# Header titles

HEADER_TITLE = "ULTIMATE SOURCE OF MEDIA TRUTH - nickf v2.1"

PROGRESS_TITLE = "Monitoring Progress"

# Print the header

print(Fore.GREEN + HEADER_TITLE)

print(Fore.YELLOW + PROGRESS_TITLE)

# Set up logging

info_logger = logging.getLogger('info_logger')

error_logger = logging.getLogger('error_logger')

info_logger.setLevel(logging.INFO)

error_logger.setLevel(logging.ERROR)

info_handler = logging.FileHandler('info.log')

error_handler = logging.FileHandler('error.log')

info_handler.setLevel(logging.INFO)

error_handler.setLevel(logging.ERROR)

formatter = logging.Formatter('%(asctime)s - %(name)s - %(levelname)s - %(message)s')

info_handler.setFormatter(formatter)

error_handler.setFormatter(formatter)

info_logger.addHandler(info_handler)

error_logger.addHandler(error_handler)

# JSON File that stores processed and skipped files information

json_file = 'processed_files.json'

# Function to load the json file

def load_json():

try:

with open(json_file, 'r') as file:

return json.load(file)

except FileNotFoundError:

info_logger.info(f'JSON file {json_file} not found. A new one will be created.')

return {}

except json.JSONDecodeError as e:

error_logger.error(f'Error decoding JSON file {json_file}. Error: {e}')

return {}

# Function to write to the json file

def write_to_json(data):

try:

with open(json_file, 'w') as file:

json.dump(data, file)

except Exception as e:

error_logger.error(f'Failed to write to JSON file {json_file}. Error: {e}')

# Function to construct the path to the FFmpeg and FFprobe executables

def get_bin_path(bin_name):

return os.path.join(os.path.dirname(__file__), 'bin', bin_name)

# ffmpeg must be placed in a folder called bin in the same directory from which the script is run

# Function to clean up metadata strings

def clean_metadata_string(s):

return s.replace("\"", "")

# Function to parse the .nfo file and extract the required metadata

def extract_info_from_nfo(nfo_path):

try:

tree = ET.parse(nfo_path)

root = tree.getroot()

return {child.tag: clean_metadata_string(child.text.replace('\n', ' ')) if child.text else '' for child in root}

except ET.ParseError as e:

error_logger.error(f'Error parsing {nfo_path}. Skipping metadata extraction. Error: {e}')

return None

# Function to check if the 'comment' metadata exists in the .mp4 file

def check_metadata_comment(file_path):

command = f'{get_bin_path("ffprobe")} -show_entries format_tags=comment -v quiet -of csv="p=0" "{file_path}"'

comment_line = subprocess.getoutput(command)

return comment_line.startswith('Processed by USMT on')

# You can modify this to display something else to keep track of, both are intended to be set to the same value, but some other reasons may exist than my application.

# Function to add metadata to the .mp4 file

def add_metadata_to_mp4(file_path, metadata):

output_file_path = os.path.splitext(file_path)[0] + '_output.mp4'

comment = f'Processed by USMT on {datetime.now().strftime("%Y-%m-%d")}'

# You can modify this to display something else to keep track of, both are intended to be set to the same value, but some other reasons may exist than my application.

command = f'{get_bin_path("ffprobe")} -v quiet -print_format json -show_streams -select_streams a "{file_path}"'

result = subprocess.run(command, stdout=subprocess.PIPE, stderr=subprocess.PIPE, shell=True, text=True, encoding='utf8')

try:

audio_info = json.loads(result.stdout)

except json.JSONDecodeError as e:

error_logger.error(f'Failed to decode JSON output from ffprobe for {file_path}. Error: {e}')

return

language_cmd = ''

audio_metadata_cmd = ''

if 'streams' in audio_info:

for i, track in enumerate(audio_info['streams']):

lang = track.get('tags', {}).get('language', '')

channels = track.get('channels', 0)

if lang == 'und': # und for undefined in ISO 639

language_cmd += f' -metadata:s:a:{i} language=eng' # eng for English in ISO 639

if channels == 1:

error_logger.error(f'Mono audio detected in {file_path}. Skipping.')

return

elif channels == 2:

# I am assuming a value of Stereo here, since that is standardized on ingest for me. You can modify this behavior.

audio_title = "English Stereo" if lang == 'eng' else "Stereo"

audio_metadata_cmd += f' -metadata:s:a:{i} title="{audio_title}"'

elif channels > 2:

# I am assuming a value of Dolby Digital 5.1 here, since that is standardized on ingest for me. You can modify this behavior.

audio_metadata_cmd += f' -metadata:s:a:{i} title="Dolby Digital 5.1"'

else:

error_logger.warning(f'No audio streams found in {file_path}.')

# DANGER WILL ROBINSON Clear the HDR Format field if it exists. I am expressly removing this tag from media files here!

if 'HDR Format' in metadata:

metadata['HDR Format'] = ''

# Check if metadata value is None or contains newline characters and if so, skip adding it or replace newline characters

metadata_cmd = ' '.join(f'-metadata {k}="{v}"' for k, v in ((k, v.replace("\n", " ")) for k, v in metadata.items()) if v is not None and v != 'None' and '\n' not in v)

# Set the description (VLC "comments") to the "plot" from metadata, and the encoded_by (VLC "Encoded by") to "Handbrake and ffmpeg" with a timestamp

description = metadata.get('plot', '')

encoded_by = f'Handbrake and ffmpeg on {datetime.now().strftime("%Y-%m-%d")}'

command = f'{get_bin_path("ffmpeg")} -y -i "{file_path}" {metadata_cmd}{language_cmd}{audio_metadata_cmd} -metadata comment="{comment}" -metadata description="{description}" -metadata encoded_by="{encoded_by}" -codec copy "{output_file_path}"'

print(Fore.CYAN + f'Processing file: {file_path}') # Print the name of the file being processed

try:

process = subprocess.Popen(command, stdout=subprocess.PIPE, stderr=subprocess.PIPE, shell=True, encoding='utf8')

while True:

output = process.stderr.readline()

if output == '' and process.poll() is not None:

break

if output:

line = output.strip()

if 'frame=' in line and 'fps=' in line and 'size=' in line:

print(Fore.MAGENTA + line) # Print ffmpeg output

rc = process.poll()

if rc != 0:

raise subprocess.CalledProcessError(rc, command)

os.remove(file_path)

os.rename(output_file_path, file_path)

print(Fore.GREEN + f'Successfully processed and added metadata to file: {file_path}') # Print success message

except subprocess.CalledProcessError as e:

error_logger.error(f'Failed to add metadata to {file_path}. Error: {e}')

print(Fore.RED + f'Error: Failed to add metadata to {file_path}. See error.log for more details.') # Print error message to console

except OSError as e:

error_logger.error(f'Failed to delete source file or rename output file. Error: {e}')

print(Fore.RED + f'Error: Failed to delete source file or rename output file. See error.log for more details.') # Print error message to console

def process_directory(directory):

processed_files = load_json()

total_files = 0

processed_count = 0

for dirpath, dirnames, filenames in os.walk(directory):

for filename in filenames:

# I am assuming only .mp4 files here. You would have to widen the paramters below

if filename.endswith('.mp4'):

if "trailer" in filename.lower():

continue

total_files += 1 # Count the file for total files

mp4_file_path = os.path.abspath(os.path.join(dirpath, filename)) # Get the absolute file path

if mp4_file_path in processed_files:

continue

if check_metadata_comment(mp4_file_path):

processed_files[mp4_file_path] = "Skipped"

continue

nfo_file_path = os.path.splitext(mp4_file_path)[0] + '.nfo'

if os.path.exists(nfo_file_path):

metadata = extract_info_from_nfo(nfo_file_path)

if metadata is not None:

add_metadata_to_mp4(mp4_file_path, metadata)

processed_files[mp4_file_path] = "Processed"

processed_count += 1 # Increment the processed count

else:

error_logger.error(f'No corresponding .nfo file found for {mp4_file_path}')

processed_files[mp4_file_path] = "Failed"

# Write to json after each file is processed

write_to_json(processed_files)

print(Fore.YELLOW + f"Processed: {processed_count}/{total_files}")

return total_files, processed_count

# Usage

while True: # Start an infinite loop

total_files, processed_files = process_directory("Z:\\movies\\")

print(Fore.GREEN + f"All files processed: {processed_files}/{total_files}")

# Print that the script is sleeping for an hour

print(Fore.BLUE + "Sleeping for 1 hour...")

# Sleep for 1 hour

time.sleep(3600)

# Define the monitor directory where your files are

#################################################

process_directory("Z:\\movies\\big.buck.bunny.(2008)")

# ffmpeg must be placed in a folder called bin in the same directory from which the script is run

# ULTIMATE SOURCE OF MEDIA TRUTH - nickf v2.1

# Copyright (C) 2023 Nick Fusco

#

# This program is free software: you can redistribute it and/or modify

# it under the terms of the GNU General Public License as published by

# the Free Software Foundation, either version 3 of the License, or

# (at your option) any later version.

#

# This program is distributed in the hope that it will be useful,

# but WITHOUT ANY WARRANTY; without even the implied warranty of

# MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the

# GNU General Public License for more details.

#

# You should have received a copy of the GNU General Public License

# along with this program. If not, see <https://www.gnu.org/licenses/>

import json

import os

import csv

from collections import defaultdict

from termcolor import colored

from tabulate import tabulate

def analyze_data(data):

file_count = len(data)

status_count = defaultdict(int)

for status in data.values():

status_count[status] += 1

failed_once_count = status_count["Failed"]

processed_count = status_count["Processed"]

failed_files = defaultdict(int)

for status in data.values():

if status == "Failed":

for file, file_status in data.items():

if file_status == "Failed":

failed_files[file] += 1

failed_multiple_count = sum(count > 1 for count in failed_files.values())

skipped_count = status_count["Skipped"]

failed_finished_count = sum(1 for file, count in failed_files.items() if count > 1 and data[file] == "Processed")

total_count = file_count + failed_once_count + failed_multiple_count + skipped_count

success_count = processed_count + failed_finished_count

success_percentage = (success_count / total_count) * 100

failed_once_percentage = (failed_once_count / total_count) * 100

failed_multiple_percentage = (failed_multiple_count / total_count) * 100

skipped_percentage = (skipped_count / total_count) * 100

table = [

[colored("Total Files Processed", "cyan"), file_count],

[colored("Failed Files (Once)", "red"), failed_once_count],

[colored("Failed Files (Multiple)", "red"), failed_multiple_count],

[colored("Finished Files (Previously Failed)", "green"), failed_finished_count],

[colored("Processed Files", "green"), processed_count],

[colored("Skipped Files", "yellow"), skipped_count],

[colored("Success Percentage", "cyan"), f"{success_percentage:.2f}%"],

[colored("Failed Once Percentage", "red"), f"{failed_once_percentage:.2f}%"],

[colored("Failed Multiple Percentage", "red"), f"{failed_multiple_percentage:.2f}%"],

[colored("Skipped Percentage", "yellow"), f"{skipped_percentage:.2f}%"]

]

headers = ["Metric", "Value"]

print(colored("Data Analysis Report", "cyan"))

print(tabulate(table, headers, tablefmt="fancy_grid"))

# Write failed multiple files to a CSV

csv_file_path = os.path.join(os.path.dirname(os.path.abspath(__file__)), "failed_multiple_files.csv")

with open(csv_file_path, "w", newline="") as csv_file:

writer = csv.writer(csv_file)

writer.writerow(["File Name", "File Path"])

for file, count in failed_files.items():

if count > 1:

writer.writerow([file, data[file]])

print(f"\nList of Files Failed Multiple Times saved to {csv_file_path}")

input("Press Enter to exit...") # Wait for user input

# Get the path of the script's directory

script_dir = os.path.dirname(os.path.abspath(__file__))

# Construct the absolute path of the JSON file

json_file_path = os.path.join(script_dir, "processed_files.json")

# Load the JSON data from the file

with open(json_file_path) as file:

data = json.load(file)

analyze_data(data)