Recent is ordered by frequency of use and All is ordered by application name, alphabetically.

You can apparently do things with application directories as seen in /usr/share/desktop-directories/. Probably need to read up on the XDG specs.

Recent is ordered by frequency of use and All is ordered by application name, alphabetically.

You can apparently do things with application directories as seen in /usr/share/desktop-directories/. Probably need to read up on the XDG specs.

This seems to be working so far. Thanks!

I’m trying to get my NFS server to have more throughput. Right now I can transfer about I Gigabyte per minute. I have file transfers that can get hundreds of GB in size.

I’m using CentOS 7 as the NFS server. Its a VM and I have put it on more performant datastores, which helped out a bit, but not nearly enough.

At this point I’m thinking about teaming nics to see if I can increase the throughput. I’m using the “defaults” in addition to NFSv4 when I mount the NFS share.

Can anyone think of some things to check before assuming I need more NICs?

What kind of workload is the NFS traffic? Large sequential, small random or mixed?

As a technical term I’m not sure what sequential/mixed means in this case, but the case I’m trying to solve involves getting a 30GB - 700GB file to transfer. I’m assuming this would be sequential.

Also, it’s already compressed, so I’m not that lucky to just compress the data before the transfer.

I notice in the transfer using:

watch -n1 ls -l #assume I'm in mount directory on the NFS server

In that directory, there only file present is the one being uploaded.

The “total” field is sometime 3 - 15 gb “ahead” of the file size.

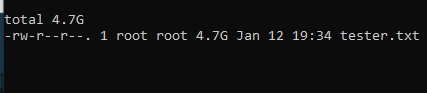

In this image you can see the total field and the file size. They are equal here because I added “no_wdelay” to try to create synchronous writes. However, before adding this there was a significant gap between “total” and filesize.

I’m assuming there is some degree of windowing going on where the total shows the amount loaded to memory and the file size is what is actually written to disk. When the file size becomes the size of the total… the total jumps up and the file size slowly climbs.

This lead me to think there is an issue with memory -> disk writes on the local machine. However, I’m able to write a 30GB file locally on the NFS server to that directory using dd in about 7 minutes. So I’m not certain that is the issue. But given there is windowing, maybe that is actually slow.

Using:

free -h #on the NFS server

I noticed the “free” memory began to be consumed during the transfer and the buffer/cache memory increased until free memory was down to about 100mb. At this point I increased memory, and further tests showed this helped improve time. This may be a coincidence, but my guess is that this is going to have limited returns the more I add, especially as the file size transfer grows.

The next thing I did was create a NFS server on a datastore with better performance. This also cut down on the time. It’s possible, that increasing RAM on this machine might cut the time down more.

To me the equation for this operation looks something like this:

totalTime = processor{client} + RAM{client} + (numberOfNetworkTransfers * networkLatency) + processor{server} + RAM{server}+ disk{server}.

The numberOfNetworkTransfers could be cut down by increasing amount of data sent during each transfer. I’m unable to cut down on RAM->Disk Writes any further, so I’m assuming here that I need to increase transfer speed or reduce network latency. That’s why I’m thinking NIC teaming.

But, I could be missing something.

What’s the storage stack? Md/lvm/ext4?

Is this 10GBE?

Try jumbo frames and nfsv3 with tcp,async,rwsize=65536 (On the client)

Yeah 10GBE. Client BTFRFS, Server XFS.

We should be able to jumbo frames on the network, but don’t I have to change the MTU’s on the client and servers NICs to handle that?

Yes, set client and server to 9000. The switches usually include the header so it’s 9200ish. Just set it as high as it will go. On the switch it’s just a maximum that it will tolerate since it’s obviously not generating traffic. Recommend a dedicated vlan though to avoid mtu mixing. I assume this is a dedicated physical interface separate from the default gateway (on both server and client)?

Client and Server have single NIC. Switch is set to 9000.

incoming meme

Back To Problem

So only a single NIC on each machine. And just a heads up these are VMs.

Ah ok. Go ahead and set client and server to 9000. It’s going to create some load somewhere on your network when normal traffic hits a standard mtu but you can figure out if that’s a reasonable compromise or if it matters at all.

Thanks for the advice, I’m going to run these test in the morning. I’ll reply to let you know how the tests turn out.

Thanks @oO.o

cotton

That helps tremendously. The main difficulty is that I have really old /home directory with a ton of obsolete icons and organizing it is a nightmare.

I’ve found that like iOS, GNOME lets you drop one icon on top of another to create a “group”, for lack of a better word. That helps too…

Does anyone know off-hand how portable the binaries produced by tic are? Wondering if I can dump them into my dot file repo or if they need to be compiled for each machine.

Not a big deal either way, I just want to have italics inside of tmux/vim and it requires adding some things to the screen-256color terminfo.

Shadowbane back with another Linux problem. I am trying to ssh into a Ubuntu Server virtual machine from my Kubuntu 20.4.1 Linux desktop. I am trying to install and set up a Pi-Hole Where I am stuck because I can’t log into my created Ubuntu Server VM using SSH. I did install the SSH server program on my Ubuntu Server. Anyone have any ideas of why the Ubuntu Server is refusing the connection from my Kubuntu desktop.

virtualbox Kernel driver not installed (rc=-1908)

Gets me everytime, i have removed and installed the dkms’s’s and booted to a 5.8 kernel. No avail.

How do i fix this without rebooting?

K

I’m not certain but I don’t believe the format has changed in ages. However, I wouldn’t be too surprised if there were problems with endian order, or word sizes.

man 5 terminfo has this:

It is not wise to count on portability of binary terminfo entries between commercial UNIX versions. The problem is that there are at least two versions of terminfo (under HP-UX and AIX) which diverged from System V terminfo after SVr1, and have added extension capabilities to the string table that (in the binary format) collide with System V and XSI Curses extensions.

So it seems that they only worried about differences between vendors, not individual OS versions.

Yeah. I’d like this to be compatible between macOS and Linux so I think I’ll play it safe and compile it per machine. Might just put it in the zshrc and compile it if it’s missing.

I assume it’s running? Check the firewall?

I think I found the answer to my problem. The Ubuntu server program installs keys by default and of coarse I don’t have a key. I am trying an earlier version of Ubuntu Server and see if that works.

As far as I know, you can’t fix it. It would help if you mention what version of Linux you are using. I have found Virtualbox’s manual to be very helpful. Here is the Link to Virtual box’s user manual