Hey everyone!

I’ve recently bought some second-hand components IOT build a new Workstation for myself.

I have been running into somewhat random crashes.

In these instances, the entire host OS hangs, and the fans all ramp up.

I’m at the end of my troubleshooting knowledge, as I can find absolutely no diagnostic logs or errors.

SPECS:

- Windows Hyper-V Server (Core) 2019 (10.0.17763 N/A Build 17763)

- EPYC 7532

- Supermicro H12SSL-i (Rev1.02)

- 7x 32Gb DDR4 2133 ECC Samsung (2Rx4 PC4-2133P-RA0-10-DC0)

- 1x 32Gb DDR4 2133 ECC Samsung (2Rx4 PC4-2133P-RA0-10-MC0)

(Bit annoying, but from reading it’s a revison number?) - Crucial P5 Plus 2TB

- Corsair RM1000e

- LSI SAS9211-8i flashed IT mode

This machine is running Hyper-V to virtualize and consolidate some machines into a single box.

I’ve got Hyper-V installed on the entire SSD, with VM disks mapped in the SSD.

During the early install, I could barely get Hyper-V (or other Windows OSs) installed at all without the described crash behaviour.

I’ve managed to get it relatively stable with some BIOS adjustments, and it has installed OS, boots and runs a couple VMs relatively stable.

Video: BIOS Settings on the H12SSL-i

I’m not across exactly what I’ve updated here, but some googling of similar issues lead me to change:

- NUMA Cores per socket - 4

- Every “C-State” related setting to - Disabled

- Local APIC Mode - x2APIC

- NVMe / M2 Firmware sources - AMI Native support

- Disabling Watchdog

Currently, Hyper-V has the latest SP3_IO, SP3_PSHED, ASPEED and Broadcom drivers off the Supermicro site.

I’ve got TrueNAS Core 32768MB/12Processors with the HBA passed to it with DDA.

W11Pro running 65536MB/32Processors.

WServer2022 8096MB/8Processors.

I’ve experienced the issues without the HBA or TrueNAS installed at all.

I’ve tried installing Hyper-V on a SATA SSD instead of the M2 with the same results.

I’ve tried disabling onboard VGA as I read Windows doesn’t always play nicely with the ASPEED.

The CPU has been reseated, and torqued correctly.

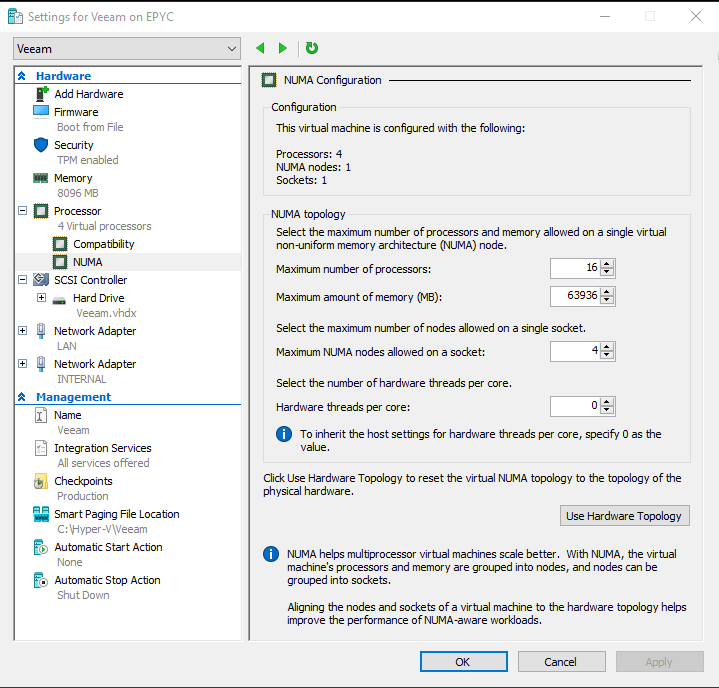

From my extensive googling, I did update the NUMA config on all the VM CPUs to reflect the Hardware Topology (Using the button)

After the BIOS changes, I thought I was in the clear, and had a few days of uptime. But with great sadness I wasn’t that lucky.

TrueNAS and W11 were running at the time, and i was in the Ws2022 installer.

Everything hangs, RD sessions, Hyper-V Manager, and the fans ramp up.

I had another crash today, with the W11 VM and an identical spec copy running at the same time.

During these crashes, BMC is still online, but all the sensor data goes blank.

Watching BMC as it crashes, all temps and voltages are in the green. Mostly mid 40c temps.

I’ve tried capturing a couple “System Crash Dumps” through the BMC, but they don’t really seem to contain alot of info.

After Hyper-V comes back online, Windows event viewer is next to useless, stating that the previous shutdown was unexpected!

I would really appreciate all your expertise and tips on how I can look at troubleshooting this further,

Or hopefully there’s a glaringly obvious setting/config I’ve stuffed up!

Thank you for your time

Cheers,