Hi Guys,

so long story short, I got a brand new Supermicro H13SAE-MF Board last week, plugged everything in and it booted right up. Memtest and Stresstest went successful, so I wanted to check on my 2 Samsung PM9A3 U.2 3.84TB Drives but they did not show up.

Tried to change the Lanes for Bifurcation Support on my BIOS, this didnt work out.

Called my Technican at the Distri, he told me its blocked by AMD as this is a consumer platform.

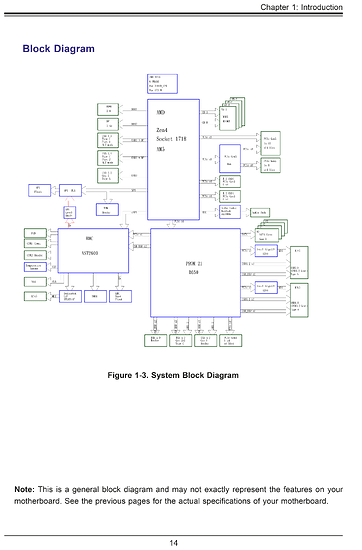

Talked to Supermicro, and they were like “lol, rtfm, fk newbs, k, bye” and sent me over a Schematic from their Manual. Turns out, they mixed some Lanes with a PCIe Switch and the Board doesnt support 4x/4x/4x/4x Bifurcation.

//clarification from me, they are not using a PCIe Switch but they are muxing!

My bad. So i switched plans, installed two Samsung 980 Pro 1TB in the M.2 Slots for Booting the OS, doged by 4x Bifurcation Card and bought two Break-Out Cards (10G Tekt) that adapt from PCIe x4 to SFF 8643 which I then connected to my SSDs.

The SSDs are now connected to the PCIe 5.0 Ports that are routed directly to the CPU, the one x4 Slot from the Chipset is now blocked by my X520 Networking Card.

Now the weird part, the SSDs randomly show up or are gone. I cant figure out why?

I tried stresstesting the connection with Benchmark Tools and had no Problems.

Both disks were at some time detected and showed the correct connect (PCIe 4.0 x4).

So I swapped cables (two different manufacturers) but to no avail, the problem stayed.

So I switched the PCIe Slots, nothing changed.

I tested the Disks with the 4x Bifurcation Card on my previsous ASROCK Rack Board (B650) and had 0 Problems.

What changed from then to now?

I swapped out the ASRock Rack Board for a Supermicro H13SAE-MF, swapped the Base OS from Win 10 (for testing) to Windows Server 2022 (for production use) and I swapped out the 10G 4x Bifurcation Card for Single Break-Out Cards.

Did anyone expierience similar issues? IPMI and BIOS are already updated, Windows is patched and all drivers (Win 11 Base) from the AMD Site were installed successfully.

When available, both Disks (for Software RAID 1) show good R/W Speeds and dont have any Problems. But after a reboot or some other random thing they seem to timeout and not come back up, only after a reboot.

Thanks for reading.