Let me start by saying I am a potato and my understanding of things may be wrong, so I apologize for that, but I’m doing my best here.

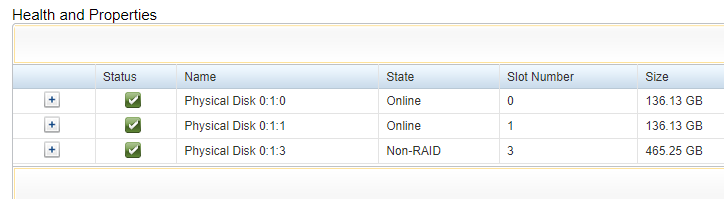

I am running Ubuntu 22.04 on a Dell PowerEdge R620. The OS runs off an ESXi dual SD card add-in board thingie. The server also currently has three disks, two are 136 GB HDDs in a RAID 1 array as a virtual disk and the other is a 500 GB HDD. These show as, and are mounted at boot as, /media/<username>/RAID and /media/<username>/DATA. This happens normally, and I’ve not made any edits to /etc/fstab or anything, other than for my SMB share from my NAS.

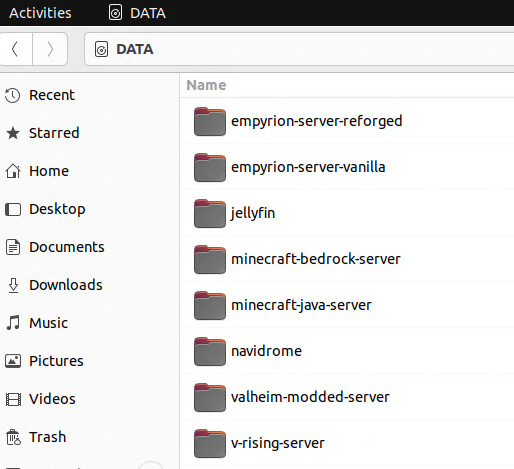

Among other things, I am running a number of game servers on Docker using Portainer and these are configured to use bind mounts to dirs on the DATA disk (I know volumes are better but since I needed to add a lot of files and want to do backups and such, bind mounts seemed easier).

Additionally, due to the relatively small size of the SD cards the OS is using, I also configured Docker to use the RAID disk(s) as its storage location for things like volumes and images using a /etc/docker/daemon.json file with { "data-root": "/media/<username>/RAID/docker" }.

Things were going okay but gradually the system slowed down, especially Docker, and Portainer got really really slow. It would hang, I’d get weird errors, containers would hang and refuse to start or stop and couldn’t even be killed, and I would struggle to even get the Docker service to restart.

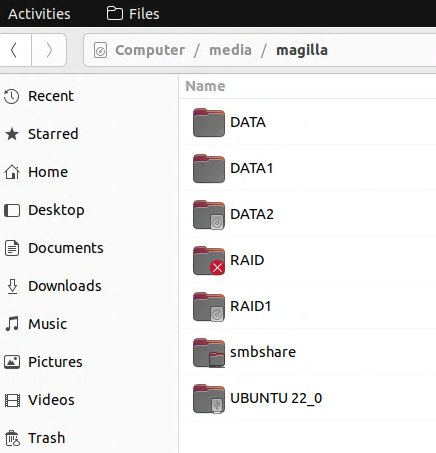

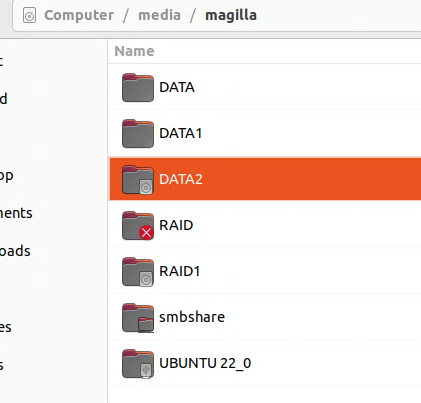

But the main issue was, after the system is rebooted, I would see apparent duplicates of the RAID and DATA disks in the /media/<username> folder, so there might be RAID and RAID1 and DATA and DATA1 and etc. These folders sometimes appeared to contain a copy of at least some of the files, but the game servers would be running fresh worlds and such.

However, if I click into one of the number-appended copies, it shows as being the original non-appended disk.

Gradually, I realized (or came to suspect, at least), that these issues are all related and the root cause issue is that the disks are not yet mounted when the containers in question start. The disks do take a few minutes to become available- it’s an older machine, and they’re older disks, and they’re spinning rust, and they’re in RAID, etc. But since the containers are using bind mounts, they are creating new dirs called RAID or DATA and then when the disks mount, they get pushed to RAID1 or DATA1 (or even DATA2 sometimes). And this has all kinds of bad knock-on effects.

So I need Docker to not start until the disks are mounted. I looked online and saw you can do edit sudo systemctl edit docker.service and add something like:

[Unit]

Requires=fs.target

After=local-fs.target

But will fs.target work? Do I need to either use something else or use this but also add something else? I am questioning it because the filesystem on the SD card is often ready and useable well before the disks are mounted and available. I am finding analogues online (this same issue but using an external HDD, or a USB drive, or an SMB share) but nothing exactly like my situation, and I don’t feel like I have a good enough grasp of what’s going to to make changes without fully understanding them.

I also saw you could add the specific mount from systemctl list-unit-files but I searched through there and didn’t see anything that jumped out.

One final solution I saw suggested was:

[Service]

ExecStartPre=/bin/sleep 10

However, this seems kind of janky and could be unreliable. I guess I could just set to 30 seconds or 60 seconds or something and live with it, but I’d rather have a more intentional solution.

I am also wondering if using volumes would fix this but I tried messing with it and couldn’t really get it working in a way where I could easily copy files (in some cases, A LOT of them) into or out of the volume, but this is basically required to use an existing world, or mods, with most game servers. Also, since the volume would be on RAID, I think it might just cause the same issue.

Any suggestions are welcome. Pls remember I am classified as a meat popsicle and don’t roast me too hard.

Thanks!