So I installed a Mellanox 10G network card into my Proxmox Server. Its showing as being installed. I then swapped my bridge over to use the 10G nic. I can access it, and transfer files between machines, but still only at 115MB/s max.

Heres info I pulled so far…

# lspci -nn | grep Ethernet

01:00.0 Ethernet controller: Mellanox Technologies MT27500 Family [ConnectX-3]

# ip addr

4: enp1s0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq master vmbr0 state UP group default qlen 1000

link/ether f4:52:14:55:5f:70 brd ff:ff:ff:ff:ff:ff

# cat /sys/class/net/enp1s0/speed

10000

If I am reading the output correctly, the Cat command shows its operating at 10G speed (10,000). However # ip addr shows it at 1,000?

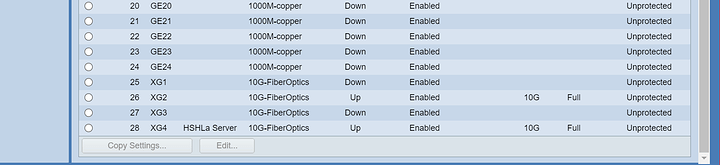

I checked the switch side and the cisco router is showing a full 10G connection (I had fun searching how to take it out of “Stacking Mode” to stand alone to open up the 10G ports already lol). XG28 port below

Do I have to manually tell Debian/Proxmox to use 10,000 vs 1,000 speed? If so, where would I do this?

Any help appreciated.

1 Like

115MB/s is about the speed of a HD.

Can you run an iperf on the link and verify that your storage is not what is slow?

2 Likes

Sure, its a RAID 10 array with 6 disks.

Any help with command format? I’m still learning…NM I got it need to install it… on both machines…lol

1 Like

#iperf -s on the server

#iperf -c 198.51.100.5 -d from the client

2 Likes

Perfect, Its the guide I’m using. I have to reboot other machine.

1 Like

[ ID] Interval Transfer Bandwidth

[ 3] 0.0-10.0 sec 2.57 GBytes 2.21 Gbits/sec

Seems its connected. My second machine is only connected at 2.5G… Guess thats as fast as my raid array is…humm

1 Like

ZFS, MDADM, or hardware RAID?

1 Like

ZFS raid 10, 7200rpm Ironwolfs 6 total. Samaba shares are still half speed between Linux clients so that will be next on the list.

I guess I was hoping for a little more oommpphhh… Guess SSD storage is needed lol.

2 Likes

The iperf command transfer between the server instance and the client instance.

It’s not tied to disk/array speed.

Any delay would be more likely network config.

Technically it could be bottlenecked by ram or cpu, but you are unlikely to be testing it on a machine with faster network than processor/ram…

1 Like

Yeah its the ryzen 7 2700 64GB asrockrack server I built and a ryzen 3700x 32GB machine.

1 Like

Does it do 10 tests, and give an average? (Median/mean/highest?)

1 Like

No that was the only output I got.

1 Like

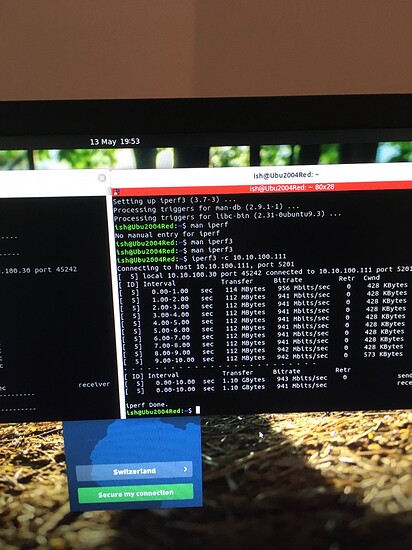

Linux? I get a bunch of tests, but seems I have iperf3

(And yes, I Did have to read the manual…)

2 Likes

This is between 2 proxmox servers with 10gb nics in them.

[ 3] 0.0-10.0 sec 10.9 GBytes 9.35 Gbits/sec

(which seems plausible)

2 Likes

Yeah my iperf says it has 2.3Gbytes a sec so that’s close to the max of my second machine with a 2.5G nic. I am waiting to install the second nic in the other server. I may have to try iperf3 instead regular to get all those tests. So it seems the connection is there and working right, so must be a disk max speed issue.

1 Like

its your disks, 99% sure. I am doing a few test benches on 6x SAS SSD’s and while I am no ZFS expert so these are not tuned the results are telling.

config:

NAME STATE READ WRITE CKSUM

raid10 ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

sda ONLINE 0 0 0

sdb ONLINE 0 0 0

sdc ONLINE 0 0 0

mirror-1 ONLINE 0 0 0

sdd ONLINE 0 0 0

sde ONLINE 0 0 0

sdf ONLINE 0 0 0

RANDREAD: bw=81.7MiB/s (85.7MB/s)

RANDWRITE: bw=15.1MiB/s (15.9MB/s)

SEQWRITE: bw=84.9MiB/s (89.1MB/s)

SEQREAD: bw=218MiB/s (229MB/s)

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

raid10 7.00T 4.00G 6.99T - - 0% 0% 1.00x ONLINE -

config:

NAME STATE READ WRITE CKSUM

raid10 ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

sda ONLINE 0 0 0

sdb ONLINE 0 0 0

mirror-1 ONLINE 0 0 0

sdc ONLINE 0 0 0

sdd ONLINE 0 0 0

mirror-2 ONLINE 0 0 0

sde ONLINE 0 0 0

sdf ONLINE 0 0 0

RANDWRITE: bw=24.3MiB/s (25.4MB/s)

RANDREAD: bw=94.4MiB/s (99.0MB/s)

SEQWRITE: bw=121MiB/s (127MB/s)

SEQREAD: bw=221MiB/s (232MB/s)

2 Likes

Yeah I was fairly sure. Thank you though, good tool to have in the tool box. Iperf that is.

1 Like

If I transfer a 1gb file between these servers - i get…

100% 975MB 330.8MB/s 00:02

(using scp) This is most likely drive limitations…

sam

1 Like

What category of cable are you using and what’s the run length?

1 Like