The new video about Solidigm was released today. Very interesting… I want to know how well they work in Linux. Here is what I found when I searched:

-

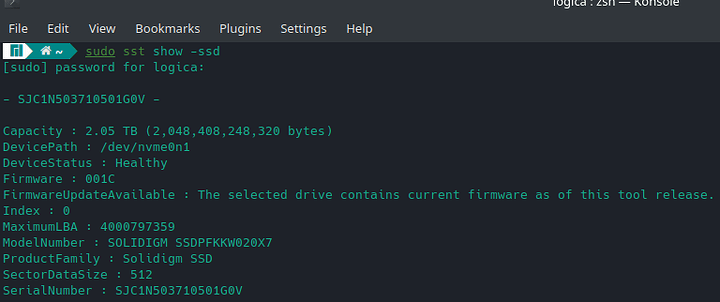

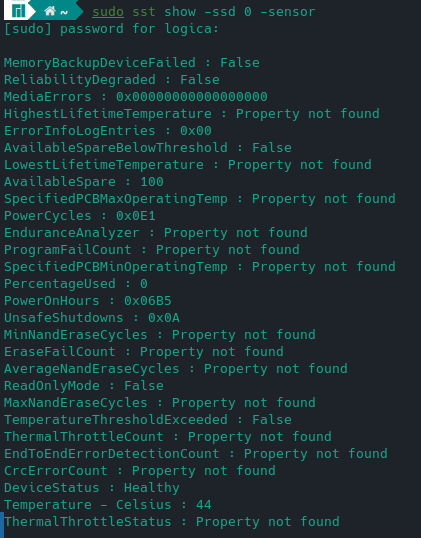

The Solidigm Storage Tool is supported under Linux, latest is 1.7 March 2023

-

The Arch wiki page has some good info on Intel/Solidigm

-

The nvme-cli tool has a solidigm plugin which seems to be mostly about SMART stats and product specific logs. See commits. They also has commands and documentation specific to Intel, some of which might actually be for this class of drives.

-

fwupd seems to have some support for Solidigm. Maybe due to Dell and maybe just for Intel 670P and p421plus?

Here are the questions I still have:

-

Do these devices have Power Loss Protection?

-

How well do they work when using LUKS or other encryption types? I remember some very early SSD controllers (sandforce maybe?) did some tricks to improve performance and reduce wear, like block deduplication, Windows specific things, etc (this was all pre-TRIM I think) but those fell down in the face of writing essentially random data. Still, the firmware could probably still optimize around which blocks seem to get used the most…

-

Is there any plan for an upstreamed Linux driver?