OK, so I am SUPER confused, I have a “ubuntu” 20.04 system that I can not for the life of me get to do SMB transfers over ~200MB/s no matter what I change and I am starting to think I am taking crazy pills or sshed into /dev/null.

Test Setup

Windows 10 VM on latest VMware Tools, VMXNET3 NIC

TrueNAS box with a connectx-4 card, both 25G ports are in a LAGG/port-group/VPC whatever you wanna call it. 4 vdev ZFS pool

“Ubuntu 20.04” box also has a connectx-4 card with both 25G ports are in a LAGG/port-group/VPC. 3 vdev ZFS pool

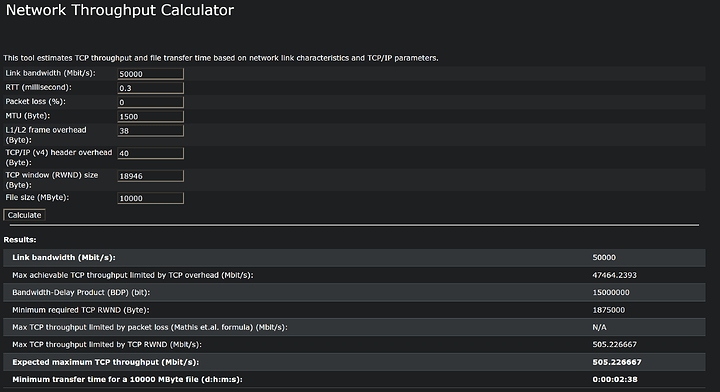

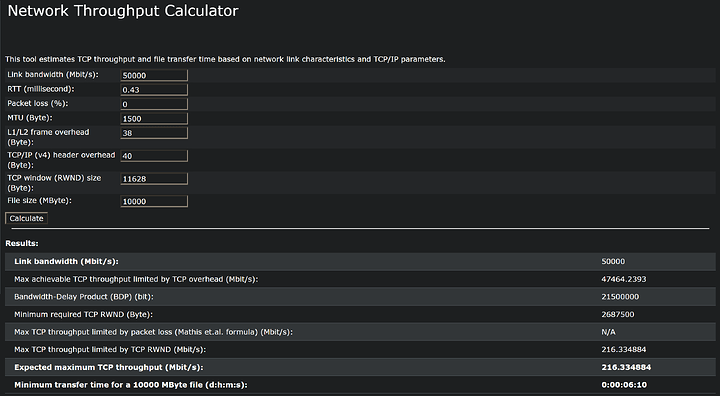

end-to-end latency with a TCP ping is ~0.63ms for TrueNAS

end-to-end latency with a TCP ping is ~0.54ms for “Ubuntu”

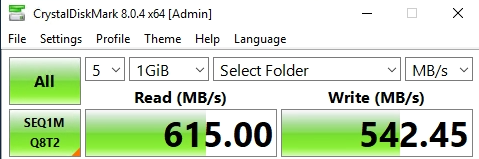

TrueNAS gets speeds I would expect with a single thread/stream.

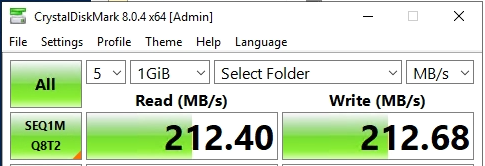

“Ubuntu” gets way less.

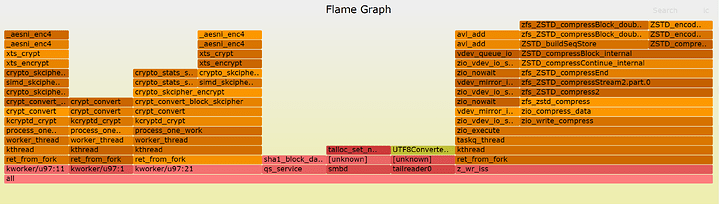

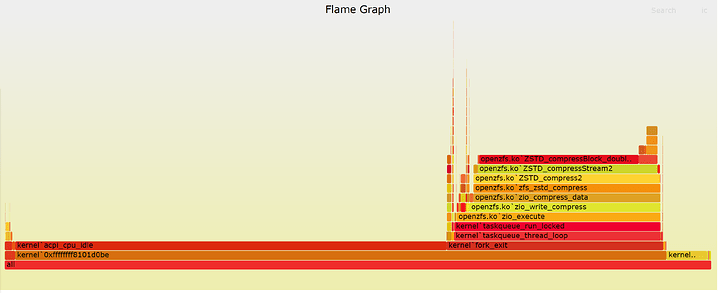

I have tried every samba tuneing option I have seen get thrown around, most of them are old and seem to be compiled into the defaults now.

I have tried SMB Multichannel, all it does is spread the load out across the NIC’s, not make it any faster, rss/no rss, same thing, what am I missing?