Hello Everyone,

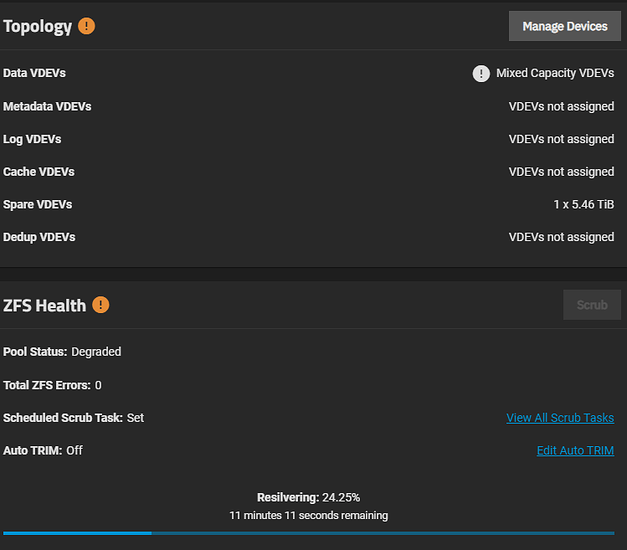

So I recently started dumping all of my dvd’s and blu-rays into my server. By and large this had been going swimmingly. A few nights ago I was streaming via jellyfin while more dvds were being ripped and the video quality and FPS started to tank. Wondering what was going on, I ventured to my desktop and pulled up the GUI. The Data VDEVs were listed as mix capacity (They’re identical 4 disc RZ1 VDEVs with a hot spare) and the Pool Status was Degraded with over 500 ZFS Errors. At the time when I looked at the Devices page, the degraded discs were on my StarTech sata expansion card. I stopped the two discs that were getting ripped, pulled a couple of recent work files off the server and onto my desktop, let it finish its in-progreess resilver, and shut the server down.

I thought something was wrong with the StarTech card, so after some reading, I decided to replace it with a new LSI 9207-8i with a pair of 4 way breakouts.

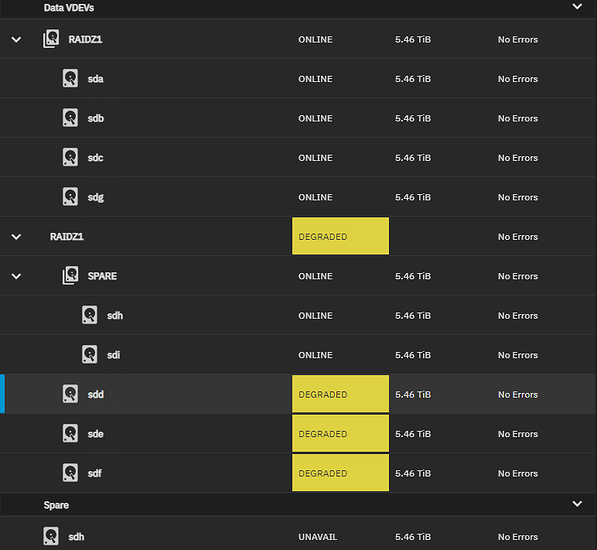

Card and cables arrived today, I popped them in, plugged in the according drives, and booted the server back up. It started resilvering and I went in to the devices to see what all the drives were up to.

Not much in the way of useful info there, so I went and ran zpool status in the shell.

Now, at the time it happened late Saturday night, I didn’t know zpool status, so I didn’t think to run it. But today, it was showing 48 errors. I don’t understand what prompts a checksum error, but I do know I hadn’t written to or read from any drives while this was happening. This is also where I realized that my spare got called off the bench.

For some reason TN seems to be shuffling the sd letter assignments, so I’ve come to a couple of wrong conclusions. I originally thought it was the StarTech card. But when I booted it up today, it had sda-d listed as the drives in trouble. Per the original configuration, a-d are on the mobo. So I thought maybe I just had too much happening for the mobo to keep up with. Now that 8 of the drives are on the LSI card, the lettering has roughly gone back to original. So my original thought to blame the StarTech card seems to stand up again.

Resilver is done:

And here’s where I don’t know what to do next. I did read the doc it told me to. But I’m still not sure what to do. I can run the zpool clear command, but will that take the drives out of degraded mode? And why did my spare get pulled into this? All the drives in raidz1-1 only have about 400 hours on them, but I’m not buying the idea that 3 brand new drives are having an early death. It had to be the StarTech Expansion card. That aside, I don’t know what the 500+ errors were that piled up before I realized something was wrong. (I have tried to set up OAuth email to alert me of stuff like this, but it REFUSES to work.) That I can tell, none of the files seem to be corrupted that were being W/R when this was all going sideways.

So what should I be doing here? Do I clear the pool and carry on? Is there a way to see all the previous errors? Why is my spare drive involved? If the card was the issue, how do I get the drive that the spare replaced back into the mix as the new spare? Anyone got any ideas on the email thing? This guide is what I followed and I got no love.

For reference, this is the NAS build:

Mobo: GIGABYTE Z590 AORUS Master

CPU: i5-11600K

Network: Dual 10Gb X540-AT2

NVME: Samsung 980 Pro

Pool: 9x 6TB WD Blue

(4 in a RaidZ1)

(4 in a RaidZ1)

(1 as a Hot Spare)

HBA: LSI 9207-8i

Optical: 2x LG WH16NS40 16x Bluray

PSU: Corsair HX1000

Case: Corsair 750D