r5 for budget builds.

One thing that should be noted that if you were to compare the performance of those parts to Intel, you would be paying a lot more. So depending on how you look at it, yes it is cheap.

I feel like I need to rant on this in the blog section of the forum.

Very grateful for this review. I haven't taken the Ryzen plunge yet, but was interested in doing PCI-passthrough with Bhyve running FreeBSD 11. ServeTheHome found FreeBSD 11 booted just fine on the 1700, but they hadn't tested much in the way of IOMMU.

The VFIO maintainer seems to have had some contact with AMD:

Initial information from AMD suggests that ACS may in fact be enabled via a BIOS option. I cannot confirm yet if this should be available on all versions of Ryzen. Do you have an "NBIO Debug Options" selection available under AMD CBS? The only video I've found shows various "Common Options" menus, including "NBIO Common Options", but the video didn't descend into that menu. Maybe there's a global debug or advanced options flag that could make such a menu item appear. Or perhaps it's not available in these initial firmware releases yet and vendors need pressure from AMD and/or users to make it available.

https://www.redhat.com/archives/vfio-users/2017-March/msg00021.html

Have you (or anyone else) seen anything in "NBIO Common Options" that might enable some additional options/menus relating to ACS? His post seems to suggest that that's where the magic button would be if mobo makers enabled it on their end.

I carefully watched your Taichi reviews to glimpse anything there but didn't seeing anything, but perhaps on some of the other boards maybe?

I saw this when it came through on the mailing list.

MSI Tomahawk B350 lacks all IOMMU functionality alltogether as far as I can tell.

Gigabyte AX370 and ASRock Taichi X370 have nearly identical IOMMU. I do have options under NB, but no combination of those will set proper groupings on the IOMMU peripherals. So I dug around in there after seeing this post but.. nada.

I have also been in contact with GB and ASRock engineers, who from what I understand, have escalated it up to AMD. I was also talking to AMDs technical marketing person on twitter about an hour ago and he said to "hold on this while he investigates" to report back in a few days.

My patch gives the best groupings, but the lack of true isolation means that similar cards can still "see" each other and funky stuff happens. e.g. crash/panic.

Well that's a shame, doesn't seem like there's any unturned stones left.

But great to hear AMD responding on Twitter, and GB/ASRock reaching out to AMD directly. If there's a path forward I guess that would be it.

Seems like I made a good choice holding out for a GB board, to think I almost scooped up an MSI on the off-chance it would work... no IOMMU at all is some pretty weak sauce....

That's just the B350. I want to take a look at the titanium x370 which is still pending

I have an MSI B350 Tomahawk. I've been able to achieve some(*) success with PCIe passthrough, so I'm not sure what you mean by "lacks all IOMMU functionality." Linux detects AMD-Vi, and I usually get a few faults during bootup (especially when the amdgpu driver loads).

As for my qualified success, I've been able to start an Ubuntu VM and have it drive the passed-through GPU (RX480), but it's not exactly usable. The display is either incredibly laggy, or it will hang after a few seconds, or it will crash my host system, depending on what combination of settings I used. I've tried with Windows as a guest. If I start with a clean install, the Windows installer will actually use the passed-through GPU no problem at all. However, once I boot into the "real" Windows environment, the display goes black shortly after the desktop gets rendered (presumably some driver is being updated that is no longer happy?)

The path I've been going down is to have the host use a GPU in the PCIe 2.0 slot, and passthrough the GPU in the PCIe 3.0 slot (CPU lanes). It was more than a little hassle to get Linux to stop using the BIOS-initialized card, but I think I have a handle on that now.

I have read that people recommend not trying to pass-through a BIOS-initialized card, but I'm wondering if there has been any success with that configuration on any chipset. At the very least both BIOS and EFI (TianoCore) VM's have been able to light up the PCIe 3.0 GPU, so I'm curious what sorts of failure modes would be caused by doing this.

Mine fault loops immediately on boot then panics. This is during amd vi unit. Io fault I could not find any IOMMU options in UEFI.

Using an rx480 plux rx460 . Will try other GPU combos.

Version 0.9 levels of anything are generally not the most reliable things. AMD have certainly not managed the launch that well and I don't think that the motherboards were totally ready for version 1.0 status. April will be a much better month for x370 and B350 motherboards I suspect.

You are comparing an "i7" like build with a pentium/i3 build so the price comparison is not really realistic. What would the comparable 7700K build run to?

could you configure the secondary GPU with vfio-pci instead of the amd drivers in the host system and pass through the entire group?

No, can't pass one device with vfio when groups are like this but I found this which sounds promising but I haven't tried yet https://lwn.net/Articles/660745/

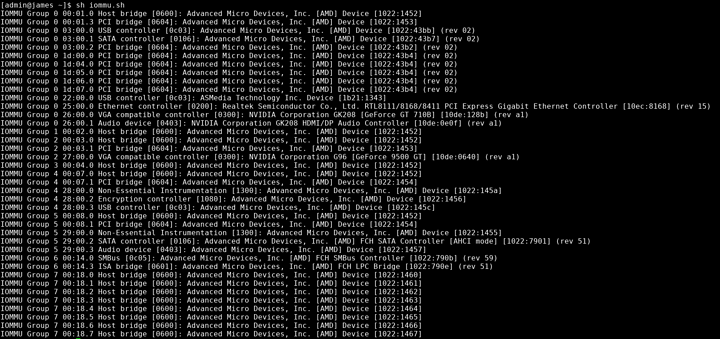

Well, I installed a VGA in the x4 slot and it seemed to be about right, though I haven't got passthrough actually working yet. Perhaps some of you guys could help me work out what the groupings mean in this situation?

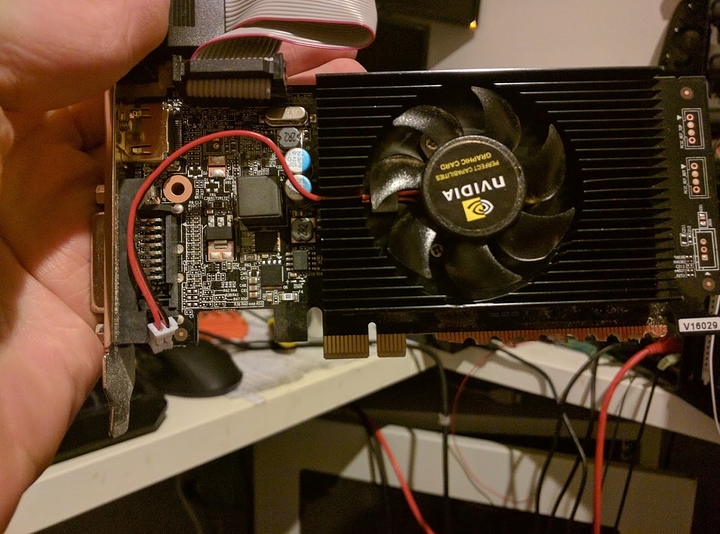

Heres how I got a gpu to run in a x4 slot:

I am without remorse for what I did to that poor card.

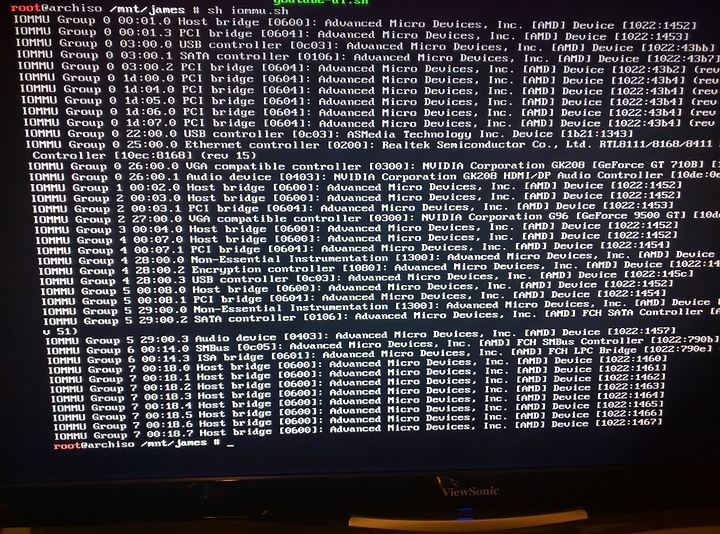

For whatever reason my Arch Install did not agree with having two cards installed at once. So, I had to run the iommu.sh bash script through a live USB:

Looks like the two GPU's are separated into group 0 and group 2. Does this look viable for PCI passthrough to you guys? I'll be honest - I don't really know what I'm looking at. Is having the PCI Bridge in the same group as the GPU going to be a problem?

Whether this works or not, it's been fun to play with. My 2500k is still going strong, so I'm happy to play with this for a while before moving all my files onto the 1700 PC.

Problem will be no way to boot from the x4 first and leave the x16 uninitialized.

And the x,4 will be put into group 0 I'd guess

Well, it did put the x4 into group 0.

Regarding passthrough - is the issue with booting from the x16 trying to re-initialise the GPU when it is passed through to the VM's EFI? As I understand the idea, the host OS attaches it to a stub driver to prevent Linux from using the x16 GPU. Later, it is passed through to the VM. How does booting the Host from the x16 GPU affect this process?

I have a fury so once it's initialized, it's done. I might be able to get the 480 to work but once some cards initialized , it's done.

I might be able to hack vfio to reset the card (some cards) but it's not very user friendly

Try adding the vfio options and see if you can actually boot a VM that initialized that card in group 2.

have a fury so once it's initialized, it's done. I might be able to get the 480 to work but once some cards initialized , it's done.

I might be able to hack vfio to reset the card (some cards) but it's not very user friendly

Try adding the vfio options and see if you can actually boot a VM that initialized that card in group 2.

Well, I have made some progress. Not booting a VM, but progress anyway.

I setup vfio-pci to attach itself to the boot gpu after system start. Heres a 29 second video of it (please ignore the piles of junk on my desk)

The machine is starting to boot using the first gpu (right screen), then when vfio-pci attaches to the boot gpu the screen is disconnected and the other graphics card (the left screen) is attached. Unfortunately, I don't know how to get a terminal to open on the second graphics card after it boots. I think it may involve changing X's config files - but I'm still exploring. Any ideas?

So between the 9500 GT and the GT710 which was mauled?

Still waiting for my Gigabyte K7 board. Actually canceled my order for an Asus board when I saw that Gigabyte actually seemed to care about stuff like this, even on their consumer boards. I think Ryzen will be one killer platform for virtualized desktops once these problems gets sorted out.

The GT710.

The 9500 is an old warhorse I keep in my junk drawer for just this situation. I wouldn't want to use it as a daily GPU.

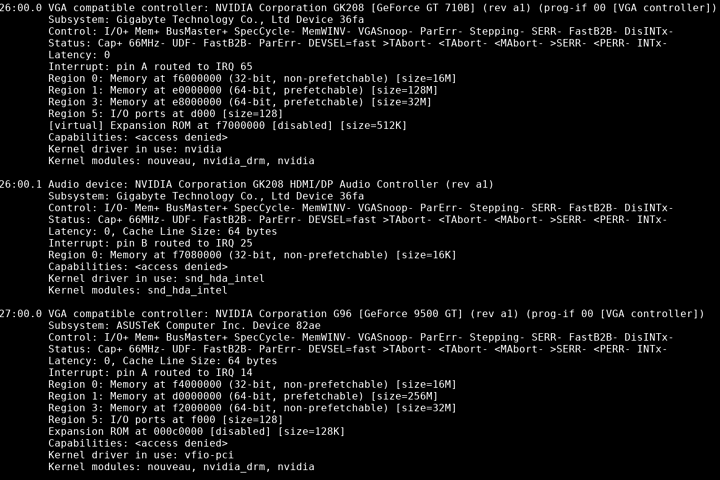

Good news - I got it to boot using the correct card, and get it to the desktop.

See attached:

The 9500 is in the x16 slot, but attached to the pcie-vfiio driver and not used by linux. Instead arch is using the 710 attached to the x4 pcie 2.0 link from the chipset.

Next, trying to hook the 9500 into a vm. If I can prove the concept there, I move on to trying to get it working on my 1070.

Hahahaha best way to mod card, ever :D