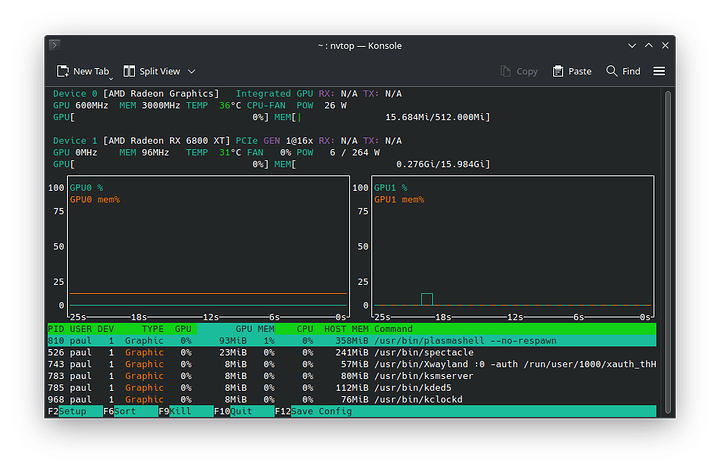

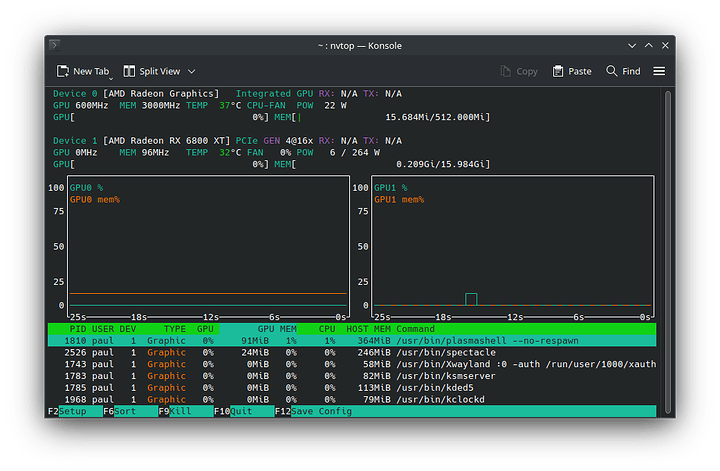

Unfortunately after many months, this is still an issue, and it doesn’t seem to be an nvtop issue.

I sometimes still boot with my GPU in gen 1/3 mode as opposed to gen 4, and I can confirm it results in degraded performance in games and a performance test, especially noticeable when it’s gen 1.

Script

I’ve tried to see if this is a hardware/BIOS/driver issue, I read through several issues on the amdgpu gitlab, and I wrote this script that I run when I see nvtop reporting anything not gen 4.

#!/usr/bin/env bash

pcidevpath='/sys/bus/pci/devices'

dev1='00:01.1'

dev2='01:00.0'

dev3='02:00.0'

gpu='03:00.0'

cd "$pcidevpath"

for dev in $dev1 $dev2 $dev3 $gpu; do

lspci -nnk -s "$dev"

sudo lspci -vvv -s "$dev" | grep 'LnkSta:'

sudo dmesg | grep "pci 0000:$dev"

cd "0000:$dev"

for file in pp_dpm_pcie current_link_speed current_link_width; do

[[ -f "$file" ]] && echo "$file: " && cat "$file"

done

echo

done

Basically it walks through the directories to “PCI Bridges” that lead to my GPU, and prints (hopefully) relevant info from lspci, dmesg, and other files in the directories.

Output

Here’s a separated output (while nvtop reports gen1) to make it easier to read:

First, the “Root Port”, notice the current_link_speed is at GEN1 here!!!

00:01.1 PCI bridge [0604]: Advanced Micro Devices, Inc. [AMD] Device [1022:14db]

Subsystem: Micro-Star International Co., Ltd. [MSI] Device [1462:7d78]

Kernel driver in use: pcieport

LnkSta: Speed 2.5GT/s, Width x16

[ 0.305829] pci 0000:00:01.1: [1022:14db] type 01 class 0x060400 PCIe Root Port

[ 0.305845] pci 0000:00:01.1: PCI bridge to [bus 01-03]

[ 0.305849] pci 0000:00:01.1: bridge window [io 0xf000-0xffff]

[ 0.305852] pci 0000:00:01.1: bridge window [mem 0xfcb00000-0xfcdfffff]

[ 0.305857] pci 0000:00:01.1: bridge window [mem 0xf800000000-0xfc0fffffff 64bit pref]

[ 0.305916] pci 0000:00:01.1: PME# supported from D0 D3hot D3cold

[ 0.307845] pci 0000:00:01.1: PCI bridge to [bus 01-03]

[ 2.347191] pci 0000:00:01.1: PCI bridge to [bus 01-03]

[ 2.347192] pci 0000:00:01.1: bridge window [io 0xf000-0xffff]

[ 2.347195] pci 0000:00:01.1: bridge window [mem 0xfcb00000-0xfcdfffff]

[ 2.347197] pci 0000:00:01.1: bridge window [mem 0xf800000000-0xfc0fffffff 64bit pref]

[ 2.348023] pci 0000:00:01.1: Adding to iommu group 1

current_link_speed:

2.5 GT/s PCIe

current_link_width:

16

Next, the Navi 10 Upstream port, current_link_speed is GEN1 speed, lspci output shows that LnkSta is “(downgraded)” and dmesg shows “32.000 Gb/s available PCIe bandwidth, limited by 2.5 GT/s PCIe x16 link at 0000:00:01.1” aka the root port.

01:00.0 PCI bridge [0604]: Advanced Micro Devices, Inc. [AMD/ATI] Navi 10 XL Upstream Port of PCI Express Switch [1002:1478] (rev c1)

Kernel driver in use: pcieport

LnkSta: Speed 2.5GT/s (downgraded), Width x16

[ 0.307503] pci 0000:01:00.0: [1002:1478] type 01 class 0x060400 PCIe Switch Upstream Port

[ 0.307518] pci 0000:01:00.0: BAR 0 [mem 0xfcd00000-0xfcd03fff]

[ 0.307535] pci 0000:01:00.0: PCI bridge to [bus 02-03]

[ 0.307541] pci 0000:01:00.0: bridge window [io 0xf000-0xffff]

[ 0.307544] pci 0000:01:00.0: bridge window [mem 0xfcb00000-0xfccfffff]

[ 0.307554] pci 0000:01:00.0: bridge window [mem 0xf800000000-0xfc0fffffff 64bit pref]

[ 0.307647] pci 0000:01:00.0: PME# supported from D0 D3hot D3cold

[ 0.307738] pci 0000:01:00.0: 32.000 Gb/s available PCIe bandwidth, limited by 2.5 GT/s PCIe x16 link at 0000:00:01.1 (capable of 252.048 Gb/s with 16.0 GT/s PCIe x16 link)

[ 0.308636] pci 0000:01:00.0: PCI bridge to [bus 02-03]

[ 2.347177] pci 0000:01:00.0: PCI bridge to [bus 02-03]

[ 2.347179] pci 0000:01:00.0: bridge window [io 0xf000-0xffff]

[ 2.347183] pci 0000:01:00.0: bridge window [mem 0xfcb00000-0xfccfffff]

[ 2.347186] pci 0000:01:00.0: bridge window [mem 0xf800000000-0xfc0fffffff 64bit pref]

[ 2.348332] pci 0000:01:00.0: Adding to iommu group 12

current_link_speed:

2.5 GT/s PCIe

current_link_width:

16

Next, the downstream port, shows GEN4 speeds.

02:00.0 PCI bridge [0604]: Advanced Micro Devices, Inc. [AMD/ATI] Navi 10 XL Downstream Port of PCI Express Switch [1002:1479]

Subsystem: Advanced Micro Devices, Inc. [AMD/ATI] Navi 10 XL Downstream Port of PCI Express Switch [1002:1479]

Kernel driver in use: pcieport

LnkSta: Speed 16GT/s, Width x16

[ 0.307910] pci 0000:02:00.0: [1002:1479] type 01 class 0x060400 PCIe Switch Downstream Port

[ 0.307940] pci 0000:02:00.0: PCI bridge to [bus 03]

[ 0.307945] pci 0000:02:00.0: bridge window [io 0xf000-0xffff]

[ 0.307948] pci 0000:02:00.0: bridge window [mem 0xfcb00000-0xfccfffff]

[ 0.307958] pci 0000:02:00.0: bridge window [mem 0xf800000000-0xfc0fffffff 64bit pref]

[ 0.308046] pci 0000:02:00.0: PME# supported from D0 D3hot D3cold

[ 0.309296] pci 0000:02:00.0: PCI bridge to [bus 03]

[ 2.347162] pci 0000:02:00.0: PCI bridge to [bus 03]

[ 2.347165] pci 0000:02:00.0: bridge window [io 0xf000-0xffff]

[ 2.347169] pci 0000:02:00.0: bridge window [mem 0xfcb00000-0xfccfffff]

[ 2.347172] pci 0000:02:00.0: bridge window [mem 0xf800000000-0xfc0fffffff 64bit pref]

[ 2.348352] pci 0000:02:00.0: Adding to iommu group 13

current_link_speed:

16.0 GT/s PCIe

current_link_width:

16

And finally my GPU, also shows GEN4 speeds, except pp_dpm_pcie does not have an asterisk by neither option 0 nor 1, dmesg also says bandwidth is limited by the root port.

03:00.0 VGA compatible controller [0300]: Advanced Micro Devices, Inc. [AMD/ATI] Navi 21 [Radeon RX 6800/6800 XT / 6900 XT] [1002:73bf] (rev c1)

Subsystem: Micro-Star International Co., Ltd. [MSI] Device [1462:3951]

Kernel driver in use: amdgpu

Kernel modules: amdgpu

LnkSta: Speed 16GT/s, Width x16

[ 0.308711] pci 0000:03:00.0: [1002:73bf] type 00 class 0x030000 PCIe Legacy Endpoint

[ 0.308729] pci 0000:03:00.0: BAR 0 [mem 0xf800000000-0xfbffffffff 64bit pref]

[ 0.308741] pci 0000:03:00.0: BAR 2 [mem 0xfc00000000-0xfc0fffffff 64bit pref]

[ 0.308749] pci 0000:03:00.0: BAR 4 [io 0xf000-0xf0ff]

[ 0.308757] pci 0000:03:00.0: BAR 5 [mem 0xfcb00000-0xfcbfffff]

[ 0.308765] pci 0000:03:00.0: ROM [mem 0xfcc00000-0xfcc1ffff pref]

[ 0.308792] pci 0000:03:00.0: BAR 0: assigned to efifb

[ 0.308863] pci 0000:03:00.0: PME# supported from D1 D2 D3hot D3cold

[ 0.308964] pci 0000:03:00.0: 32.000 Gb/s available PCIe bandwidth, limited by 2.5 GT/s PCIe x16 link at 0000:00:01.1 (capable of 252.048 Gb/s with 16.0 GT/s PCIe x16 link)

[ 2.326646] pci 0000:03:00.0: vgaarb: setting as boot VGA device

[ 2.326646] pci 0000:03:00.0: vgaarb: bridge control possible

[ 2.326646] pci 0000:03:00.0: vgaarb: VGA device added: decodes=io+mem,owns=none,locks=none

[ 2.348387] pci 0000:03:00.0: Adding to iommu group 14

pp_dpm_pcie:

0: 2.5GT/s, x1 310Mhz

1: 16.0GT/s, x16 619Mhz

current_link_speed:

16.0 GT/s PCIe

current_link_width:

16

Finally, here is the output of the performance test:

$ echo 4 | sudo tee /sys/kernel/debug/dri/1/amdgpu_benchmark

Apr 19 21:46:29 kernel: amdgpu 0000:03:00.0: amdgpu: amdgpu: dma 1024 bo moves of 4 kB from 4 to 2 in 51 ms, throughput: 640 Mb/s or 80 MB/s

Apr 19 21:46:29 kernel: amdgpu 0000:03:00.0: amdgpu: amdgpu: dma 1024 bo moves of 8 kB from 4 to 2 in 52 ms, throughput: 1256 Mb/s or 157 MB/s

Apr 19 21:46:29 kernel: amdgpu 0000:03:00.0: amdgpu: amdgpu: dma 1024 bo moves of 16 kB from 4 to 2 in 54 ms, throughput: 2424 Mb/s or 303 MB/s

Apr 19 21:46:29 kernel: amdgpu 0000:03:00.0: amdgpu: amdgpu: dma 1024 bo moves of 32 kB from 4 to 2 in 60 ms, throughput: 4368 Mb/s or 546 MB/s

Apr 19 21:46:29 kernel: amdgpu 0000:03:00.0: amdgpu: amdgpu: dma 1024 bo moves of 64 kB from 4 to 2 in 68 ms, throughput: 7704 Mb/s or 963 MB/s

Apr 19 21:46:29 kernel: amdgpu 0000:03:00.0: amdgpu: amdgpu: dma 1024 bo moves of 128 kB from 4 to 2 in 86 ms, throughput: 12192 Mb/s or 1524 MB/s

Apr 19 21:46:29 kernel: amdgpu 0000:03:00.0: amdgpu: amdgpu: dma 1024 bo moves of 256 kB from 4 to 2 in 122 ms, throughput: 17184 Mb/s or 2148 MB/s

Apr 19 21:46:29 kernel: amdgpu 0000:03:00.0: amdgpu: amdgpu: dma 1024 bo moves of 512 kB from 4 to 2 in 174 ms, throughput: 24104 Mb/s or 3013 MB/s

Apr 19 21:46:30 kernel: amdgpu 0000:03:00.0: amdgpu: amdgpu: dma 1024 bo moves of 1024 kB from 4 to 2 in 326 ms, throughput: 25728 Mb/s or 3216 MB/s

Apr 19 21:46:30 kernel: amdgpu 0000:03:00.0: amdgpu: amdgpu: dma 1024 bo moves of 2048 kB from 4 to 2 in 622 ms, throughput: 26968 Mb/s or 3371 MB/s

Apr 19 21:46:31 kernel: amdgpu 0000:03:00.0: amdgpu: amdgpu: dma 1024 bo moves of 4096 kB from 4 to 2 in 1212 ms, throughput: 27680 Mb/s or 3460 MB/s

Apr 19 21:46:34 kernel: amdgpu 0000:03:00.0: amdgpu: amdgpu: dma 1024 bo moves of 8192 kB from 4 to 2 in 2392 ms, throughput: 28048 Mb/s or 3506 MB/s

Apr 19 21:46:39 kernel: amdgpu 0000:03:00.0: amdgpu: amdgpu: dma 1024 bo moves of 16384 kB from 4 to 2 in 4751 ms, throughput: 28248 Mb/s or 3531 MB/s

Apr 19 21:46:48 kernel: amdgpu 0000:03:00.0: amdgpu: amdgpu: dma 1024 bo moves of 32768 kB from 4 to 2 in 9472 ms, throughput: 28336 Mb/s or 3542 MB/s

Apr 19 21:47:07 kernel: amdgpu 0000:03:00.0: amdgpu: amdgpu: dma 1024 bo moves of 65536 kB from 4 to 2 in 18925 ms, throughput: 28368 Mb/s or 3546 MB/s

So 28.368 gigabits/second, or 3.546 gigabytes/second, a far cry from gen 4 speeds (256 gigabits/second or 32 gigabytes/second)

Even my Ryzen 7000 series iGPU is faster than this!

12:00.0 VGA compatible controller [0300]: Advanced Micro Devices, Inc. [AMD/ATI] Raphael [1002:164e] (rev c6)

$ echo 4 | sudo tee /sys/kernel/debug/dri/0/amdgpu_benchmark

Apr 19 23:03:13 kernel: amdgpu 0000:12:00.0: amdgpu: amdgpu: dma 1024 bo moves of 4 kB from 4 to 2 in 22 ms, throughput: 1488 Mb/s or 186 MB/s

Apr 19 23:03:13 kernel: amdgpu 0000:12:00.0: amdgpu: amdgpu: dma 1024 bo moves of 8 kB from 4 to 2 in 22 ms, throughput: 2976 Mb/s or 372 MB/s

Apr 19 23:03:13 kernel: amdgpu 0000:12:00.0: amdgpu: amdgpu: dma 1024 bo moves of 16 kB from 4 to 2 in 22 ms, throughput: 5952 Mb/s or 744 MB/s

Apr 19 23:03:13 kernel: amdgpu 0000:12:00.0: amdgpu: amdgpu: dma 1024 bo moves of 32 kB from 4 to 2 in 24 ms, throughput: 10920 Mb/s or 1365 MB/s

Apr 19 23:03:13 kernel: amdgpu 0000:12:00.0: amdgpu: amdgpu: dma 1024 bo moves of 64 kB from 4 to 2 in 23 ms, throughput: 22792 Mb/s or 2849 MB/s

Apr 19 23:03:13 kernel: amdgpu 0000:12:00.0: amdgpu: amdgpu: dma 1024 bo moves of 128 kB from 4 to 2 in 24 ms, throughput: 43688 Mb/s or 5461 MB/s

Apr 19 23:03:13 kernel: amdgpu 0000:12:00.0: amdgpu: amdgpu: dma 1024 bo moves of 256 kB from 4 to 2 in 28 ms, throughput: 74896 Mb/s or 9362 MB/s

Apr 19 23:03:13 kernel: amdgpu 0000:12:00.0: amdgpu: amdgpu: dma 1024 bo moves of 512 kB from 4 to 2 in 35 ms, throughput: 119832 Mb/s or 14979 MB/s

Apr 19 23:03:13 kernel: amdgpu 0000:12:00.0: amdgpu: amdgpu: dma 1024 bo moves of 1024 kB from 4 to 2 in 49 ms, throughput: 171192 Mb/s or 21399 MB/s

Apr 19 23:03:13 kernel: amdgpu 0000:12:00.0: amdgpu: amdgpu: dma 1024 bo moves of 2048 kB from 4 to 2 in 77 ms, throughput: 217880 Mb/s or 27235 MB/s

Apr 19 23:03:13 kernel: amdgpu 0000:12:00.0: amdgpu: amdgpu: dma 1024 bo moves of 4096 kB from 4 to 2 in 132 ms, throughput: 254200 Mb/s or 31775 MB/s

Apr 19 23:03:14 kernel: amdgpu 0000:12:00.0: amdgpu: amdgpu: dma 1024 bo moves of 8192 kB from 4 to 2 in 244 ms, throughput: 275032 Mb/s or 34379 MB/s

Apr 19 23:03:14 kernel: amdgpu 0000:12:00.0: amdgpu: amdgpu: dma 1024 bo moves of 16384 kB from 4 to 2 in 464 ms, throughput: 289256 Mb/s or 36157 MB/s

Apr 19 23:03:15 kernel: amdgpu 0000:12:00.0: amdgpu: amdgpu: dma 1024 bo moves of 32768 kB from 4 to 2 in 916 ms, throughput: 293048 Mb/s or 36631 MB/s

Apr 19 23:03:17 kernel: amdgpu 0000:12:00.0: amdgpu: amdgpu: dma 1024 bo moves of 65536 kB from 4 to 2 in 1815 ms, throughput: 295792 Mb/s or 36974 MB/s

295.792 gigabits/second or 36.974 gigabytes/second.

I’ve tried going into my motherboard’s BIOS, and manually setting GEN4 for the PCI_E1 Gen Switch and Chipset Gen Switch, I still have my Linux system boot in GEN1 speed sometimes.

Technically I can “fix” this by rebooting, or putting my PC to sleep then waking it and hoping that the gen 4 speeds are correctly initialized, but between this and the GPU’s RGB not being controllable on Linux, along with firefox w/ VAAPI sometimes causing GPU resets, it’s really making me regret purchasing an AMD graphics card (a previous generation one too), even if it otherwise works well.

BUT I still want to know why/how this is happening, and where the issue lies, please give me any input/info if you know, I’d really appreciate it.