my main goal: what is the most “correct” method of routing packets coming from a tun interface to the internet (via system’s default gateway.)

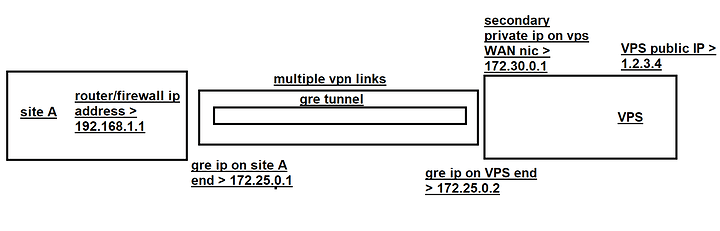

so what I have is a gre tunnel running over vpn to my vps.

to achieve this I have added an extra IRFC 1918 ip to my VPS’s WAN Interface (192.168.100.1).

and the gre tunnel is running from my route’s local ip (192.168.1.1) to this second ip.

I have OSPF running on my vpn so router knows how to get to 192.168.100.1 and the gre tunnel establishes.

Now I want to route some service to the internet using my vps’s ip.

I suspect that I need to snat packets coming from the tun interface to my vps’s public ipv4.

But I’m note sure why that’s necessary .

vps wan ip: x.x.x.x/32

vps second local ip on wan interface: 192.168.100.1/29

vpn endpoint ip on vps: 172.24.0.1/16

gre tun tunnel address on vps:172.25.0.1/30

gre tun tunnel adress on LAN : 172.25.0.2/30

vpn endpoint on local lan: 172.24.0.2/16

local lan net : 192.168.1.1/24

- ospf running on vpn interface.

look at the example below:

- service with Ip address 192.168.1.5 sends packed destined to a wan ip.

- I have a rule that sets gateway for all packets coming from 192.168.1.5 to be 172.25.0.1.

- icmp destined to x.x.x.x gets a reply.

- icmp destined to google.com gets to 172.25.0.1 and doesn’t get any further.

( tcpdump on VPS’s gre shows traffic like this : 172.25.0.2 >>> google.com)

my question is why gre tun doesn’t know how to route packets out via default gateway?

shouldn’t gre tun follow system routing tables?

can instead of SNAT can I configure OSPF differently so less manual configuration is required?

Note:

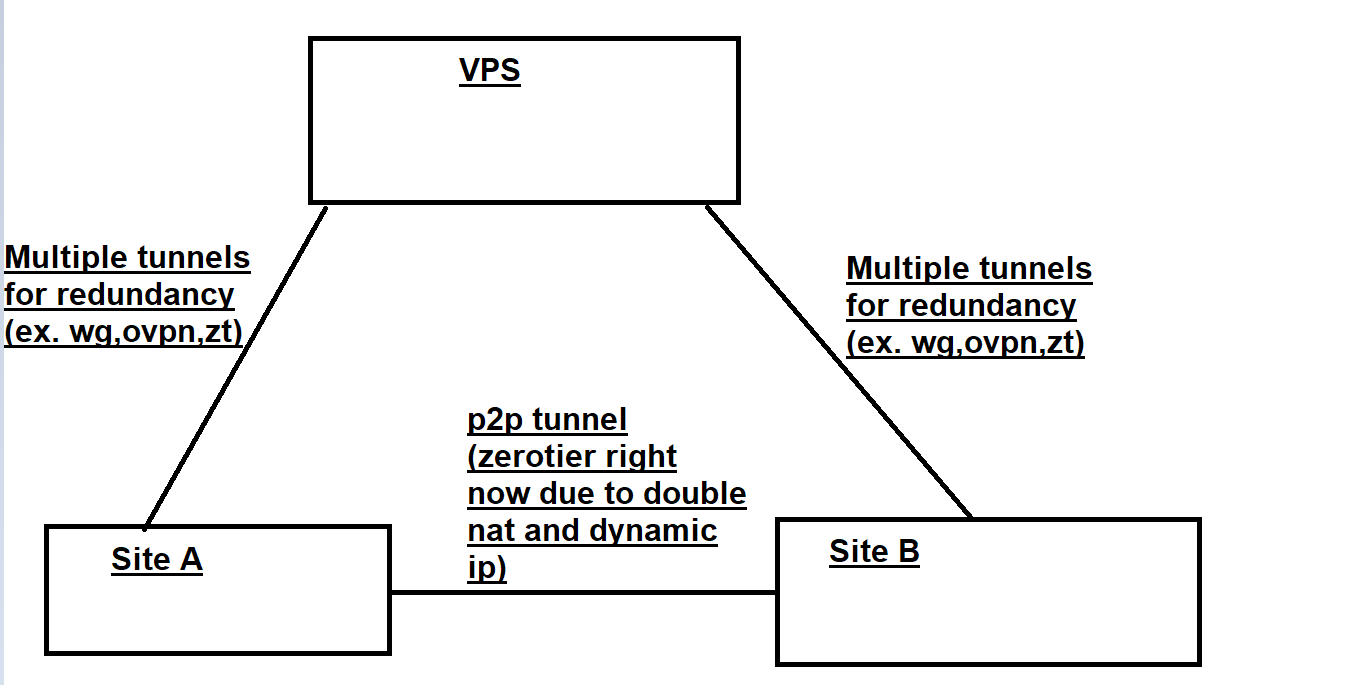

my main goal is to have many redundant paths to the vps’s network by way of multiple vpn tunnels between local router and vps which packets originating from 192.168.1.5 can take to go out of vps’s public ip.

correct me if I’m wrong, but with this current config if a vpn link goes down because of ospf running on all vpn interfaces, gre tunnel finds another path to 192.168.100.1 and recovers on it’s own.

Note 2 : Should I run OSPF on gre tunnel itself?