Ok, so there is a lot of data that I’ve been trying to process.

Now, the reason I’m here is because I am building an, admittedly overkill, PLEX server based on TrueNAS Scale.

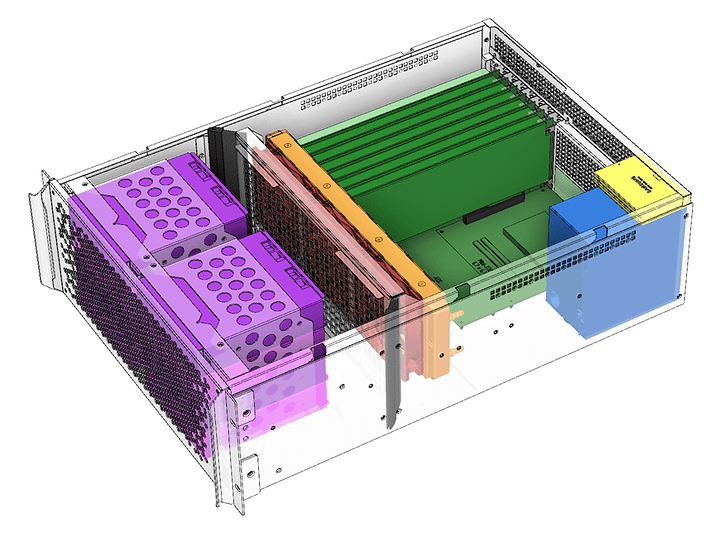

I have a rack case from Sliger with eight 5.25" bays all filled with Icy Dock MB608SP-B 6x2.5" drive cages.

The hardware is an Asus P12R-E-10G, an Intel Xeon E-2388G, 128GB ECC RAM, and two LSI 9305-24i HBAs.

My boot drives are two Micron 5400 Boot M.2 SATA drives.

I am contemplating two Intel P5800X, or possibly two 905p, SSDs for a special vdev, but I’m not sure if it is necessary for mostly large video files. And, I want really quick response during navigation and playback. I am still learning about all of this.

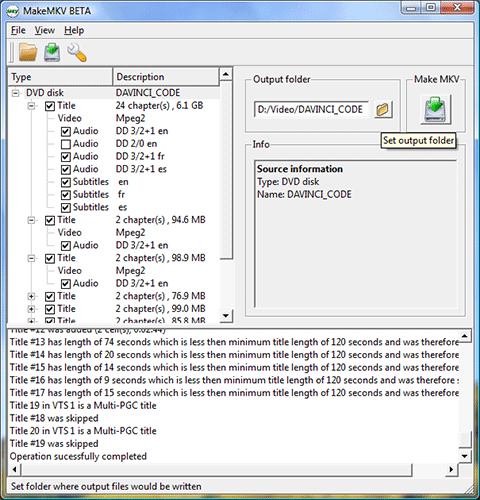

So, here’s how I understand this. The more vdevs, the faster the pool because it’s basically creating a striped volume of the vdevs. I am looking at Z2 vdevs. So, I’m considering eight Z2 vdevs of six drives each or four Z2 vdevs of 12 drives each. Obviously there are other options like four or 24 drive vdevs, but I want the best balance of performance and loss of space to parity. What is the point where the benefit of speed is less than the cost of parity?

I have been buying bits and pieces for this server for almost a year. Right now I have 36 Micron 5400 Pro 3.84TB SATA SSDs and I buy a few more with every paycheck, so I almost have everything I need to build the server. Just wanting the input of some people who know more about this than I do.

To be clear, I know of all of the different ways I could have done this and everyone’s opinions on what would be better or worse, that is not what I’m asking for help with. I am excited about this server. It’s the server I want. I won’t criticize your choices, so please I’m just asking about the ZFS configuration and appreciate any help. ![]()

Thanks!!