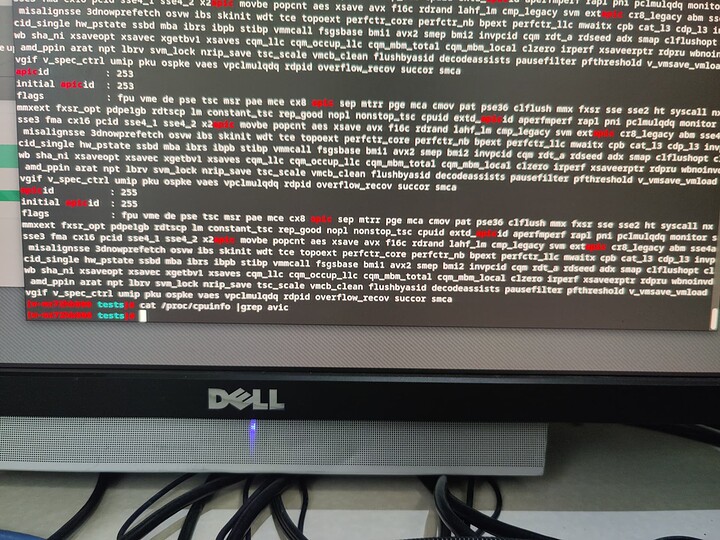

Can some user of MZ72-HB0 motherboard verify that this motherboard is not disabling Virtual Interrupt Controller (ie. avic) feature of an EPYC 7003 CPU? Turns out that the Supermicro H12DSi-N6 which I am using now is disabling this feature ( https://forums.servethehome.com/ind…13-no-virtual-interrupt-controller-avic.35801 ) , so I’m going to have to switch motherboard to use avic. In case this is not obviuos, I am asking for the result of lscpu | grep avic

Not exactly your question, but EPYC 74F3 on a ROMED8-2T here, and there’s no avic in /proc/cpuinfo. Confirmed manually with cpuid function 0x8000000A → EDX:0x119b9cff bit 13 = 0 (based on AMD64 ARM volume 2 section 15.29.7 “CPUID Feature Bits for AVIC”).

Thank you - I found the same on Asrock motherboard, happen to have it around here in another single-socket system. Now considering the new Gigabyte to replace the Supermicro

It’s no longer enabled on GB. What are you trying to do? X2apic/apic should accomplish the same thing?

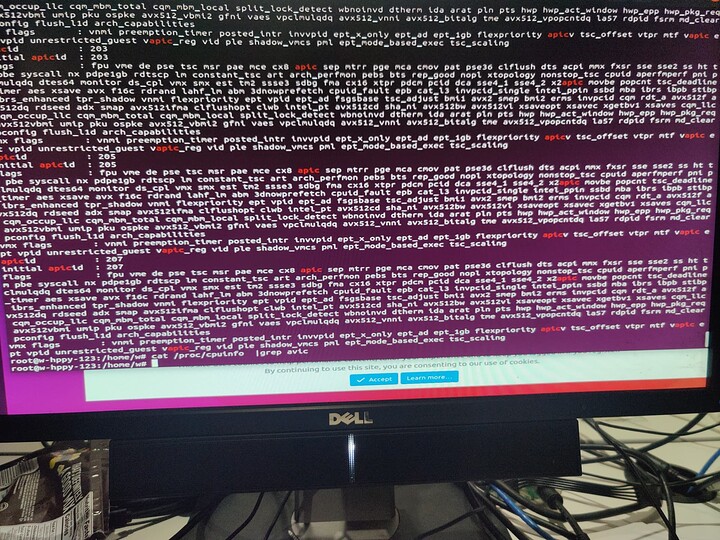

Also not a thing on modern xeons. Here’s a dual 8380

Hm odd it’s enabled on mine although with Rome. Did you have it before? The Milan bios is newer so maybe it was disabled. Also when i did test avic with KVM it didn’t seem very stable so maybe that’s why? Didn’t test very thorough so could have been something else. Kernel 5.18 brings x2avic so that might be more interesting to test

Thank you Wendell. So, I think there’s a little misunderstanding here. avic (not apic) is AMD-specific feature flag. It is AMD implementation of Virtual Interrupt Controller. On Intel CPUs, the Virtual Interrupt Controller is marked by feature flag apicv or vapic (both can be seen on your 2nd screenshot above, I’m little confused about which one it is). In both cases, the job of Virtual Interrupt Controller, when present on host CPU, is to enable APIC features in guest virtual machine. Or, as stated at https://www.amd.com/system/files/TechDocs/48882_IOMMU.pdf

AVIC is an implementation of a guest virtual APIC. Allows the processor and the IOMMU to coordinate the delivery of interrupts directly to running guest VMs

An IOMMU that supports the guest virtual APIC feature (MMIO Offset 0030h[GASup] = 1) can deliver interrupts directly to a running guest operating system in a virtual machine when used with an AMD processor that supports the Advanced Virtual Interrupt Controller (AVIC) architecture.

I am still a little confused about the distinction between GASup feature flag and avic feature flag, but I think a good approximation is that they are both host flags (one for IOMMU and another your regular CPU flag) and both are a prerequisite for the virtual APIC inside guests. Without virtual APIC, interrupt delivery inside guests suffers from bad latency, which manifests itself e.g. with crackling sound inside guest OS.

I am specifically ignoring the configuration of that virtual APIC inside guests (e.g. as discussed at SVM AVIC/IOMMU AVIC improves interrupt performance on Zen(+)/2/etc based processors ), since without these feature flags present in the host CPU, one cannot even get started. They really are a prerequisite, as seen e.g. in these Linux kernel source snippets:

arch/x86/kvm/svm/svm.c, svm_hardware_setup(void), note check for X86_FEATURE_AVIC:

enable_apicv = avic = avic && npt_enabled && boot_cpu_has(X86_FEATURE_AVIC);

if (enable_apicv) {

pr_info("AVIC enabled\n");

amd_iommu_register_ga_log_notifier(&avic_ga_log_notifier);

}

drivers/iommu/amd/init.c:

early_enable_iommus(void), note check for FEATURE_GAM_VAPIC:

if (AMD_IOMMU_GUEST_IR_VAPIC(amd_iommu_guest_ir) &&

!check_feature_on_all_iommus(FEATURE_GAM_VAPIC))

amd_iommu_guest_ir = AMD_IOMMU_GUEST_IR_LEGACY_GA;

print_iommu_info(void):

if (irq_remapping_enabled) {

pr_info("Interrupt remapping enabled\n");

if (AMD_IOMMU_GUEST_IR_VAPIC(amd_iommu_guest_ir))

pr_info("Virtual APIC enabled\n");

So, this is not about APIC (at this stage), since Virtual APIC is a guest feature. This is about features which must be present on the host CPU to make Virtual APIC in guest possible.

It appears that plenty of motherboards disable this CPU feature on Milan CPUs and I’m rather unhappy about that. I can understand that this feature will not work if Secure Nested Paging (SVM-SNP) is enabled, as per errata https://www.amd.com/system/files/TechDocs/56683-PUB_1.04.pdf (item 1278), but since this feature is disabled by default on the few motherboards I’ve seen (Supermicro and AsRock), there must be something else.

I am at the stage when I’m looking for the one motherboard which will allow me to use avic in an EPYC Milan, so that I can keep using these rather expensive CPUs for the purpose of IOMMU virtualization.

Ahh, ok. I knew intel had virtualized interrupts but I forgot it was a different mechanism.

I have been on the bleeding edge for too long, sorry. How about x2avic? Is that on your radar and are you aware of how that fits into things?

I am definitely looking forward to x2AVIC AMD Readies Linux Patches For x2AVIC Support - Phoronix and will see how things work once it appears in 5.18 (or 5.19, I guess?) kernel. It’s a good thing I maintain my own kernel package, from vanilla kernel.org sources. Still, I am a little worried that, for the same very mysterious reasons AVIC does not seem to work for me, x2AVIC might not work either.

My Tyan S8030/Epyc 7443p/Proxmox machine returns nothing with lscpu | grep avic

A windows VM I setup for minecraft bedrock initially had horrible audio crackling, but that completely went away after I confined it to a NUMA node and did various other in-VM latency optimizations.

I’m not sure if you are doing other types of audio stuff that are even more sensitive.

Thank you @Log , that’s interesting. I’m not doing anything fancy and my VMs are confined to NUMA node and do have some latency optimizations (isolated cores etc.). Could you share your libvirt xml? Perhaps I can improve my situation without AVIC.

Curiously, I just found the manual has a mention of APIC

Local APIC Mode.

xAPIC / x2APIC / Auto

edit Got intel’s APIC confused with AMD’s AVIC lol. Not what you’re looking for, but APIC does show under proxmox if you’re curious.

I’ll try to dig up what I did for latency either tonight or tomorrow.

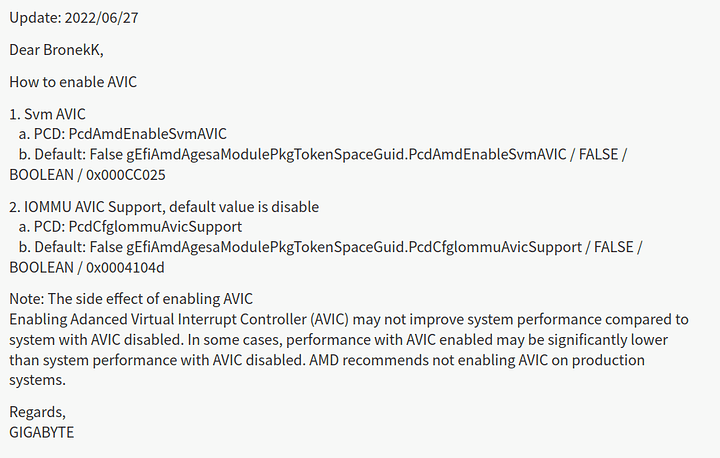

For anyone looking at this topic, I received answer from Gigabyte support. Yet to understand what this actually means ![]()

I am also at a loss looking at this. It’s almost like they copy pasted a bit of some kind of config file, or a GUI value editor that would build the options into the UEFI screen.

Not sure if it would help, but I’m trying to put together a home lab and I have an MZ72-HB0 (Rev 3.0) motherboard with dual EPYC 7B12 CPUs. This is Rome, not Milan, and my understanding is that these CPUs are roughly equivalent to the 7742 model.

I have no idea what I’m doing by the way. This is my first PC build, I’m more of a high-level language software dev.

I built the PC last weekend, and now this weekend will be trying to figure out installing Proxmox, ZFS, etc. Also trying to figure out temp management, etc.

I assume that once I have an OS installed, I maybe could run some shell commands and share the output in case that would be of any help. I trolled through the BIOS settings, most of which I don’t really understand, and I didn’t see anything about “AVIC”. It does look like the Gigabyte support e-mail is referencing their BIOS code.

For now, looking at the BIOS in the BMC web interface, here are some settings that I see:

- Advanced

** CPU Configuration

*** SVM Mode = Enabled (Enable/Disable CPU Virtualization)

** PCI Subsystem Settings

*** SR-IOV Support = Enabled (If system has SR-IOV capable PCIe Devices, this option Enables or Disables Single Root IO Virtualization Support) - AMD CBS

** CPU Common Options

*** Local APIC Mode = Auto (Options: xAPIC, x2APIC, Auto)

** NBIO Common Options

*** IOMMU = Enabled (Enable/Disable IOMMU)

Anyway, I’m happy to try and help some if I can, which is probably doubtful being a noob as far as this stuff goes.

Hi @Bronek ,

Were you able to resolve your interrupt latency issues in your virtual machines?

I’m considering getting a similar board (haven’t seen any reviews or even discussion on it, just kind of came across it when looking at a listing online), the Gigabyte MZ72-HB2, and the only thing that sticks out is that it uses the Aspeed AST2600 chip over Aspeed AST2500.

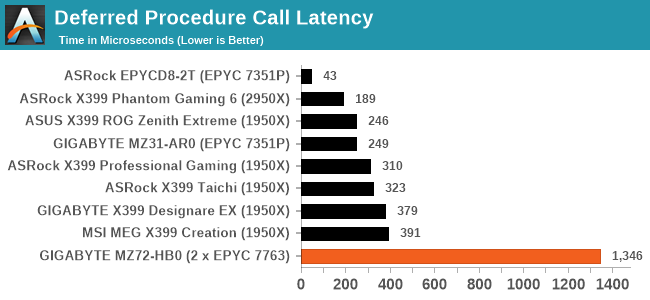

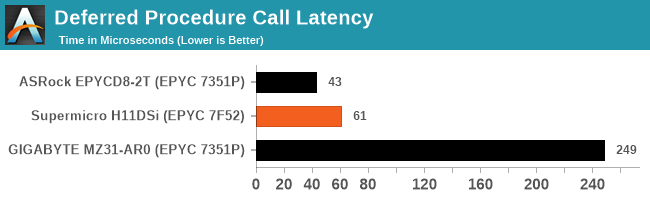

Regarding the MZ72-HB0, Anandtech has a review in which they state that with default settings the MZ72-HB0 has terrible interrupt latency in their testing:

If the issue is pervasive even with VFIO (I have to wonder what’s causing such extreme latency, maybe something that can be avoided?), then I’d have to look at another board.

Anandtech has a review for the H11DSi, with much more favorable results with interrupt latency tests:

The problem is, this board is the older PCIe 3.0 variant. I haven’t found any reviews for H12DSi boards that test interrupt latency.

Does anyone have any experience running Windows with or without VFIO with either the H12DSi, Gigabyte MZ72-HB0, or Gigabyte MZ72-HB2, and if so, what has your experience been?

Any input on this would be greatly appreciated!

The AMD IOMMU provides the interrupt virtualization and remapping. Check the detected IOMMU capabilities in the kernel logs. Here are some of the interesting log entries RHEL 9:

Milan in Lenovo SR655:

x2apic: enabled by BIOS, switching to x2apic ops

AMD-Vi: Extended features (0x841f77e022094ace, 0x0): PPR X2APIC NX IA GA PC SNP

AMD-Vi: X2APIC enabled

ThreadRipper Pro(Rome) in Lenovo P620:

x2apic: IRQ remapping doesn't support X2APIC mode

AMD-Vi: Extended features (0x5cf77ef22294a5a): PPR NX GT IA PC GA_vAPIC

Intel Haswell in a NUC:

DMAR-IR: Enabled IRQ remapping in x2apic mode

x2apic enabled

Also, some hypervisors don’t support avic and will just emulate x2apic since avic is slow. avic wasn’t supported when x2apic is enabled. x2avic support has been recently added to the Linux kernel.

The ACPI tables, IVRS for AMD and DMAR for Intel, are used to provide interrupt virtualization support and configuration. It is how the firmware enable, disables, and override hardware support. Use the various amd_iommu_* kernel options to configure the IOMMU and to print ACPI tables for it during IOMMU initialization.

edited to add logs from various processors.

Hi - nope, the interrupt latency only became worse (no idea why, TBH). I’ve given up on AMD for VM hosting, about to rebuild my rig with an Ice Lake Xeon 8358 and Supermicro X12SPA-TF

Hi - I’ve examined the part of Linux kernel doing this logging and tweaked it for my tests for a more extended logging, too. The GA_vAPIC feature you see enabled in the log relies on AVIC CPU flag being detected by the kernel. And that’s simply not happening with the AMD Epyc Milan CPUs on three motherboards I tried, including various BIOS version and all sane BIOS configurations I could think of. So I’ve given up on AMD Epyc and switching back to Intel Xeons.

Something is not adding up here. The x2avic should supplant the need for hardware avic?

Relevant maybe

https://lwn.net/Articles/887196/

It sounds like the code path on Milan is software avic. Ice lake xeon would probably also be a non x2apic path which leads to old avic being on as well? Some boards did give the option to disable x2apic which might help.

I should have a better understanding of what you’re doing but my setup has been stable for a year so I haven’t messed with it.

Well, the problem is not as much what I’m doing, but what the CPU / AGESA / motherboard are doing. No matter how I booted the computer (distribution, kernel version or kernel command line) , this:

lscpu | grep avic

… always returned nothing. And it should return something, because without avic there’s no virtual interrupt controller.

Aaaanyway, I just disassembled this machine, and although I do plan to put it together again in a server role (would be a shame to waste these nice CPUs), at this moment my VMs are running on Ice Lake Xeon 8358 and oh boy, what a change. No more stupid latency ruining everything!

I decided on this step because latency has gotten so bad that it badly affected USB protocol timings, making VMs almost unusable. I guess it wouldn’t have happened without some configuration mistakes on my side, but with so many NUMA nodes mistakes are easy to make ![]() And anyway, Linux kernel source is pretty clear - on AMD there’s not virtual interrupt controller if

And anyway, Linux kernel source is pretty clear - on AMD there’s not virtual interrupt controller if avic is not available, full stop.

late edit: unless things have changed significantly with this x2AVIC patch, which they might. I am catching up reading sources.

another edit: I do have another machine with the same problem and with a Milan CPU, will try kernel 6.1 force_avic feature on it and report back.