This morning I decided to finally dig into the disk tuning for Qemu, it turns out that the libvirt/qemu defaults are totally junk for SSDs. By default it uses a single IO thread for all IO operations, as such blocking occurs and disk performance suffers.

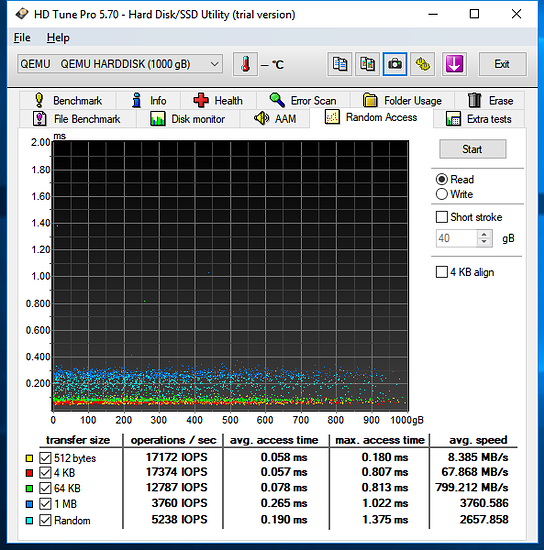

Before:

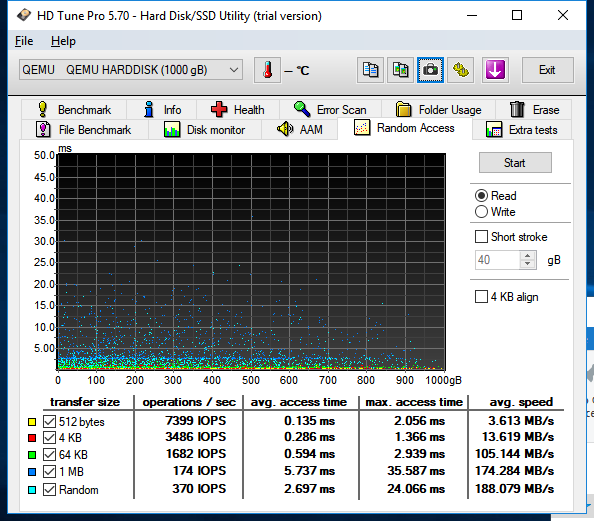

After:

I am passing an entire disk into the VM, an older Samsung 840 EVO 1TB SSD and I switched to using SCSI rather then AHCI in the guest as it’s performance is much more predictable.

Here is the magic:

-object iothread,id=iothread1 \

-device virtio-scsi-pci,id=scsi1,iothread=iothread1 \

-drive if=none,id=hd1,file=/dev/disk/by/id/ata-Samsung_SSD_EVI_1TB_xxxx,format=raw,aio=threads \

-device scsi-hd,bus=scsi1.0,drive=hd1,bootindex=1

A SCSI controller should be created for each additional disk so that each get their own IO thread.

This could likely be tuned even more by telling qemu to report 4K blocks instead of 512 bytes, but I will need to reinstall windows to test.

I also tested all the different cache modes, improvements can be had for write if cache is set to none, but since my use case is minimal write the default used by qemu gives the best read performance.

I will leave it up to the reader to figure out how to translate this to libvirt