Entertaining a 3rd GPU, would likely need to be a LOW-Powered chip [x4 electrical]

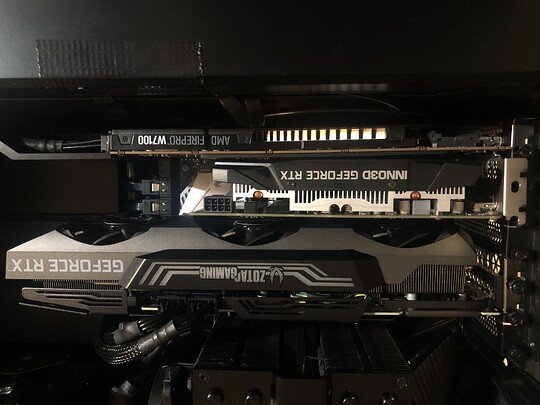

Right now the “3rd” card (meaning in the third (x4) slot) is an AMD FirePro W7100, and it hast it’s own 6-pin connector for power. Works just fine for powering an HD and 21:9 UW monitor.

The first card (read: first (x16) slot) is a 3090 (for the Windows VM).

The spare card (after the upgrade to the 3090) is my previous 2080ti.

All of them bring their own power-connectors with them - obviously. The two NVIDIA’s have - again, obviously - two 8-pin.

But since my current (750w) PSU only has 2 PCIE 12v outlets, it needs to be upgraded for this to work. Now, the replacement would be a rather expensive PSU (something 1200+ watts (with more 12v’s), to support both of the power-hungry GPUs and the rest of the system). So this would be a non-trivial expense which I’d like to avoid if something on the “logic side” was destined to fail.

Since I want to get into ML it would be nice to be able to play some games while waiting for calculations to finish. So, that’s the motivation - and also, just because it can (possibly).

Depending on how many NVMe drives you have in there as well, your going to start to limit your PCIe lane availability.

This will disable some of the sata ports on the controller, something to consider.

You’re right. I have three 1TB NVMe’s in there, so this disables two of the six SATA ports. The other four carry a 4TB harddrive each. Planning to also setup a Freenas VM for storage in there. 16(12)TB of RAID5 seems enough, though. Especially since this is only to play with Freenas, those 4Tb drives come from a recent upgrade of my Synology NAS.

The third GPU should not change this, correct? The first NVMe is connected to the CPU and the other two to the PCH - which is also supporting the 3rd card (config now). The second PCIe slot should only split the x16 into two x8? That wouldn’t do much to the 3090, since it’s PCIe 4.0 anyway. And the 2080ti for Linux is just for display and a little ML. Maybe I’ll swap the two … depends on how the memory situiation turns out.

2080ti is still enough for gaming, if it needs to be.

I’ve only used it with 2 cards, so you’ll have to let us know if it works with 3. My guess would be that it will since the IOMMU groups for the board for me have always been really good, but that’s just a guess.

Thanks. Guess I’ll just get that PSU then, when they are in stock.

I’ll keep you guys posted.

Now waiting for level one KVM to arrive

Ok, stupid me.

It doesn’t fit.

There is actually too little space on the Aorus Master to fit the Gigabyte RTX 2080 ti Gaming OC (2.something slots).

The 3090 is not the problem, but the (on AMD) necessary third GPU in the lower (4x) slot. A 2-slot card (like the 2060 super) will fit, but mine has no USB-C port …

The 2080 ti does.

I am open to suggestions.

New case with Threadripper and a board with way more PCIe slots? (would also allow me to run both VFIO GPUs on x16 …)

Water-cooled GPUs?

2-slot 2080 ti FE? RTX 3000 doesn’t seem to sport a USB controller any more …

I’m a bit bummed right now, would have really loved to give this a try …

Ok. Got myself together and bought a used founder’s edition card. Can always sell the Gigabyte card anyways.

Now it gets interesting.

I don’t remember the fancy command to display all the system info in console. So here it is:

OS Manjaro (all updates, current)

AMD Ryzen 5950x

Gigabyte Aorus X570 Master

Host GPU (third (usable, last) slot, PCIe x4) AMD W7100

GPU (first x16 slot (in x8 mode), for Windows (works fine, but has no USB Port, so passing through the only possible from the host)): Zotac RTX 3090

GPU (second x16 slot (in x8 mode), for Linux): NVIDIA RTX 2080 ti FE

and a bunch of other stuff not relevant to the matter … storage etc.

Assumption: passing through not only the GPU and audio from the 2080 ti will give me another usable USB host in the GPUs IOMMU group to connect to KVM.

Problem:

The 2080 ti FE will not passthrough it’s USB port, the VM fails to boot and crashes:

2021-01-28T16:50:00.510087Z qemu-system-x86_64: warning: This feature depends on other features that were not requested:

CPUID.8000000AH:EDX.npt [bit 0]

2021-01-28T16:50:00.510090Z qemu-system-x86_64: warning: This feature depends on other features that were not requested:

CPUID.8000000AH:EDX.nrip-save [bit 3]

2021-01-28T16:50:07.505912Z qemu-system-x86_64: vfio_err_notifier_handler(0000:0d:00.0) Unrecoverable error detected. Please collect any data possible and then kill the guest

I’ve gone the extra mile with my Gigabyte RTX2080 ti OC gaming card (installed in the first slot, as it is a 2.something variant and wouldn’t fit between the W7100 and the 3090. Basically the same result, but with different log messages:

2021-01-28T17:05:15.335122Z qemu-system-x86_64: warning: This family of AMD CPU doesn't support hyperthreading(2)

Please configure -smp options properly or try enabling topoext feature.

2021-01-28T17:05:21.868583Z qemu-system-x86_64: vfio_err_notifier_handler(0000:0d:00.0) Unrecoverable error detected. Please collect any data possible and then kill the guest

So I tried to change the CPU in KVM to KVM64 (or something along those lines), still failed, but with a different message:

2021-01-28T17:05:55.653556Z qemu-system-x86_64: vfio_err_notifier_handler(0000:0d:00.0) Unrecoverable error detected. Please collect any data possible and then kill the guest

Then I did an extended extra mile and testet the FE and the Gigabyte 2080 ti in an Intel system (i7-8700 (non-K) and Gigabyte Z390 Gaming X mainboard):

This system has the latest Proxmox installed and it gave me display output and USB passthrough on both cards just fine.

I’m still lost, any suggestions? Is there a way (some kernel parameter or whatever) to get the USB port on Turing to work? How and why are FE and AIB cards different? They us the same TU102, after all …

I know, possibly Proxmox would behave differently on the AMD system, but I am a bit reluctant to try because Proxmox might have to be updated first. I could, however, install Manjaro on the Intel machine … any thoughts?

What debugging is useful/necessary?

(currently searching for an AMD reference model RX 6800, it has a USB-C port and it is a 2-slot design, so anyone who wants to sell, please drop a message)

Just for reference, my IOMMU groups:

IOMMU Group 0 00:01.0 Host bridge [0600]: Advanced Micro Devices, Inc. [AMD] Starship/Matisse PCIe Dummy Host Bridge [1022:1482]

IOMMU Group 1 00:01.1 PCI bridge [0604]: Advanced Micro Devices, Inc. [AMD] Starship/Matisse GPP Bridge [1022:1483]

IOMMU Group 10 00:07.1 PCI bridge [0604]: Advanced Micro Devices, Inc. [AMD] Starship/Matisse Internal PCIe GPP Bridge 0 to bus[E:B] [1022:1484]

IOMMU Group 11 00:08.0 Host bridge [0600]: Advanced Micro Devices, Inc. [AMD] Starship/Matisse PCIe Dummy Host Bridge [1022:1482]

IOMMU Group 12 00:08.1 PCI bridge [0604]: Advanced Micro Devices, Inc. [AMD] Starship/Matisse Internal PCIe GPP Bridge 0 to bus[E:B] [1022:1484]

IOMMU Group 13 00:14.0 SMBus [0c05]: Advanced Micro Devices, Inc. [AMD] FCH SMBus Controller [1022:790b] (rev 61)

IOMMU Group 13 00:14.3 ISA bridge [0601]: Advanced Micro Devices, Inc. [AMD] FCH LPC Bridge [1022:790e] (rev 51)

IOMMU Group 14 00:18.0 Host bridge [0600]: Advanced Micro Devices, Inc. [AMD] Matisse Device 24: Function 0 [1022:1440]

IOMMU Group 14 00:18.1 Host bridge [0600]: Advanced Micro Devices, Inc. [AMD] Matisse Device 24: Function 1 [1022:1441]

IOMMU Group 14 00:18.2 Host bridge [0600]: Advanced Micro Devices, Inc. [AMD] Matisse Device 24: Function 2 [1022:1442]

IOMMU Group 14 00:18.3 Host bridge [0600]: Advanced Micro Devices, Inc. [AMD] Matisse Device 24: Function 3 [1022:1443]

IOMMU Group 14 00:18.4 Host bridge [0600]: Advanced Micro Devices, Inc. [AMD] Matisse Device 24: Function 4 [1022:1444]

IOMMU Group 14 00:18.5 Host bridge [0600]: Advanced Micro Devices, Inc. [AMD] Matisse Device 24: Function 5 [1022:1445]

IOMMU Group 14 00:18.6 Host bridge [0600]: Advanced Micro Devices, Inc. [AMD] Matisse Device 24: Function 6 [1022:1446]

IOMMU Group 14 00:18.7 Host bridge [0600]: Advanced Micro Devices, Inc. [AMD] Matisse Device 24: Function 7 [1022:1447]

IOMMU Group 15 01:00.0 Non-Volatile memory controller [0108]: Samsung Electronics Co Ltd Device [144d:a80a]

IOMMU Group 16 02:00.0 PCI bridge [0604]: Advanced Micro Devices, Inc. [AMD] Matisse Switch Upstream [1022:57ad]

IOMMU Group 17 03:00.0 PCI bridge [0604]: Advanced Micro Devices, Inc. [AMD] Matisse PCIe GPP Bridge [1022:57a3]

IOMMU Group 18 03:01.0 PCI bridge [0604]: Advanced Micro Devices, Inc. [AMD] Matisse PCIe GPP Bridge [1022:57a3]

IOMMU Group 19 03:02.0 PCI bridge [0604]: Advanced Micro Devices, Inc. [AMD] Matisse PCIe GPP Bridge [1022:57a3]

IOMMU Group 2 00:01.2 PCI bridge [0604]: Advanced Micro Devices, Inc. [AMD] Starship/Matisse GPP Bridge [1022:1483]

IOMMU Group 20 03:03.0 PCI bridge [0604]: Advanced Micro Devices, Inc. [AMD] Matisse PCIe GPP Bridge [1022:57a3]

IOMMU Group 21 03:04.0 PCI bridge [0604]: Advanced Micro Devices, Inc. [AMD] Matisse PCIe GPP Bridge [1022:57a3]

IOMMU Group 22 03:05.0 PCI bridge [0604]: Advanced Micro Devices, Inc. [AMD] Matisse PCIe GPP Bridge [1022:57a3]

IOMMU Group 23 03:08.0 PCI bridge [0604]: Advanced Micro Devices, Inc. [AMD] Matisse PCIe GPP Bridge [1022:57a4]

IOMMU Group 23 0a:00.0 Non-Essential Instrumentation [1300]: Advanced Micro Devices, Inc. [AMD] Starship/Matisse Reserved SPP [1022:1485]

IOMMU Group 23 0a:00.1 USB controller [0c03]: Advanced Micro Devices, Inc. [AMD] Matisse USB 3.0 Host Controller [1022:149c]

IOMMU Group 23 0a:00.3 USB controller [0c03]: Advanced Micro Devices, Inc. [AMD] Matisse USB 3.0 Host Controller [1022:149c]

IOMMU Group 24 03:09.0 PCI bridge [0604]: Advanced Micro Devices, Inc. [AMD] Matisse PCIe GPP Bridge [1022:57a4]

IOMMU Group 24 0b:00.0 SATA controller [0106]: Advanced Micro Devices, Inc. [AMD] FCH SATA Controller [AHCI mode] [1022:7901] (rev 51)

IOMMU Group 25 03:0a.0 PCI bridge [0604]: Advanced Micro Devices, Inc. [AMD] Matisse PCIe GPP Bridge [1022:57a4]

IOMMU Group 25 0c:00.0 SATA controller [0106]: Advanced Micro Devices, Inc. [AMD] FCH SATA Controller [AHCI mode] [1022:7901] (rev 51)

IOMMU Group 26 04:00.0 Non-Volatile memory controller [0108]: Samsung Electronics Co Ltd NVMe SSD Controller SM981/PM981/PM983 [144d:a808]

IOMMU Group 27 05:00.0 Non-Volatile memory controller [0108]: Samsung Electronics Co Ltd NVMe SSD Controller SM981/PM981/PM983 [144d:a808]

IOMMU Group 28 06:00.0 VGA compatible controller [0300]: Advanced Micro Devices, Inc. [AMD/ATI] Tonga PRO GL [FirePro W7100] [1002:692b]

IOMMU Group 28 06:00.1 Audio device [0403]: Advanced Micro Devices, Inc. [AMD/ATI] Tonga HDMI Audio [Radeon R9 285/380] [1002:aad8]

IOMMU Group 29 07:00.0 Network controller [0280]: Intel Corporation Wi-Fi 6 AX200 [8086:2723] (rev 1a)

IOMMU Group 3 00:02.0 Host bridge [0600]: Advanced Micro Devices, Inc. [AMD] Starship/Matisse PCIe Dummy Host Bridge [1022:1482]

IOMMU Group 30 08:00.0 Ethernet controller [0200]: Intel Corporation I211 Gigabit Network Connection [8086:1539] (rev 03)

IOMMU Group 31 09:00.0 Ethernet controller [0200]: Realtek Semiconductor Co., Ltd. RTL8125 2.5GbE Controller [10ec:8125] (rev 05)

IOMMU Group 32 0d:00.0 VGA compatible controller [0300]: NVIDIA Corporation Device [10de:2204] (rev a1)

IOMMU Group 32 0d:00.1 Audio device [0403]: NVIDIA Corporation Device [10de:1aef] (rev a1)

IOMMU Group 33 0e:00.0 VGA compatible controller [0300]: NVIDIA Corporation TU102 [GeForce RTX 2080 Ti Rev. A] [10de:1e07] (rev a1)

IOMMU Group 33 0e:00.1 Audio device [0403]: NVIDIA Corporation TU102 High Definition Audio Controller [10de:10f7] (rev a1)

IOMMU Group 33 0e:00.2 USB controller [0c03]: NVIDIA Corporation TU102 USB 3.1 Host Controller [10de:1ad6] (rev a1)

IOMMU Group 33 0e:00.3 Serial bus controller [0c80]: NVIDIA Corporation TU102 USB Type-C UCSI Controller [10de:1ad7] (rev a1)

IOMMU Group 34 0f:00.0 Non-Essential Instrumentation [1300]: Advanced Micro Devices, Inc. [AMD] Starship/Matisse PCIe Dummy Function [1022:148a]

IOMMU Group 35 10:00.0 Non-Essential Instrumentation [1300]: Advanced Micro Devices, Inc. [AMD] Starship/Matisse Reserved SPP [1022:1485]

IOMMU Group 36 10:00.1 Encryption controller [1080]: Advanced Micro Devices, Inc. [AMD] Starship/Matisse Cryptographic Coprocessor PSPCPP [1022:1486]

IOMMU Group 37 10:00.3 USB controller [0c03]: Advanced Micro Devices, Inc. [AMD] Matisse USB 3.0 Host Controller [1022:149c]

IOMMU Group 38 10:00.4 Audio device [0403]: Advanced Micro Devices, Inc. [AMD] Starship/Matisse HD Audio Controller [1022:1487]

IOMMU Group 4 00:03.0 Host bridge [0600]: Advanced Micro Devices, Inc. [AMD] Starship/Matisse PCIe Dummy Host Bridge [1022:1482]

IOMMU Group 5 00:03.1 PCI bridge [0604]: Advanced Micro Devices, Inc. [AMD] Starship/Matisse GPP Bridge [1022:1483]

IOMMU Group 6 00:03.2 PCI bridge [0604]: Advanced Micro Devices, Inc. [AMD] Starship/Matisse GPP Bridge [1022:1483]

IOMMU Group 7 00:04.0 Host bridge [0600]: Advanced Micro Devices, Inc. [AMD] Starship/Matisse PCIe Dummy Host Bridge [1022:1482]

IOMMU Group 8 00:05.0 Host bridge [0600]: Advanced Micro Devices, Inc. [AMD] Starship/Matisse PCIe Dummy Host Bridge [1022:1482]

IOMMU Group 9 00:07.0 Host bridge [0600]: Advanced Micro Devices, Inc. [AMD] Starship/Matisse PCIe Dummy Host Bridge [1022:1482]All of the GPUs are in their own IOMMU groups, so this shouldn’t be a problem, right? Especially, since the two Turing cards behave the same, regardless of which slot they are in and if there are three or only two cards present.

Have you made sure that you are trying to pass though all 4 of these devices to the VM for the 2080TI? You always need to pass everything from an IOMMU group through to the VM and if you missed any of the devices it might fail. Just a wild guess!

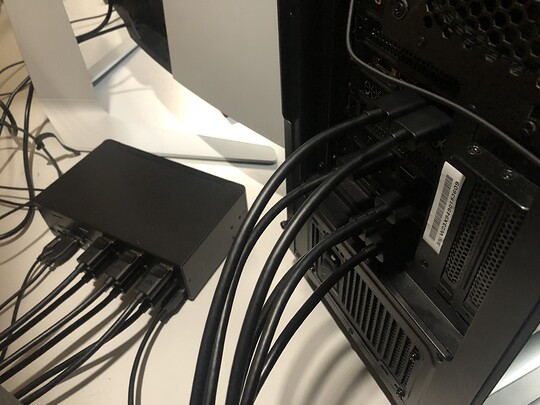

Yes, I passed all four of them.

Anyone know of a PCIe 1x riser cable that would fit under a GPU? I could use the single 1x slot to connect to a USB add in card and mount it on the vertical GPU mount in the case. That would be perfect! (Until I resolve the passthrough issue on the GPU)

I’m using this for a PCIe USB controller card myself (a photo of it in action here: Asrock X570d4u, x570d4u-2L2T discussion thread - #187 by lae):

Though that one is a bit expensive. While waiting for it to ship (it took over 3 weeks) I had also bought this one to try out:

I needed to actually sort of fold this one underneath the bottom GPU through a slot near the exhaust side because it would otherwise get in the way of the GPU fan sometimes (actually wasn’t an issue the first time I installed it, but later did when I installed it a second time when I was rebuilding it).

They both worked fine for my case (which was passthrough). I would recommend the ADT-Link one for peace of mind, though.

All done, folks

In the end, a UEFI/AGESA update did the trick.

I now have working:

Host (Manjaro Linux) with AMD W7100 GPU

Guest (Ubuntu Linux 20.10) with 2080 ti (using USB port on the GPU for peripherals)

Gues (Windows 10 Pro) with 3090 (using USB host controller passed through got peripherals)

Both guests are UEFI secureboot, Windows is encrypted with bitlocker

All of these are now connected via Level1 KVM with dual monitors.

Please excuse this late reply, but do you have a link with instructions how to do this?

Sorry also for my late replay  as far as I know there is nothing special to it, I just have this in the xml for the guest:

as far as I know there is nothing special to it, I just have this in the xml for the guest:

<os>

<type arch="x86_64" machine="pc-q35-4.2">hvm</type>

<loader readonly="yes" secure="yes" type="pflash">/usr/share/edk2-ovmf/x64/OVMF_CODE.secboot.fd</loader>

<nvram>/var/lib/libvirt/qemu/nvram/win10_VARS.fd</nvram>

<bootmenu enable="no"/>

</os>

The loader line is the same for all guests, nvram is guest-specific. Then enable a TPM device in the guest config:

<tpm model="tpm-tis">

<backend type="emulator" version="2.0"/>

<alias name="tpm0"/>

</tpm>

That did it for me. Why did I want this: I assigned an NVME drive to each guest, so I wanted encryption. If you just use a qcow2 for guest disk it would be ok (for me) to just encrypt that drive where the qcow2’s reside.