I see no reason to hold out when Kingston KSM32ED8/32ME RAM is available in a number of stores right now.

FYI: Both variants are in stock at Newegg as of this writing. Base is $359.99 + $8.75 shipping, 10GBe @ $439 .99 + free shipping and says on sale but doesn’t seem to be discounted.

On Asrock Rack X570D4U I have a problem with NICs speed. Download performance is poor - 450 mbps (in iperf is even less, about 300 mbps). The issue is related only to incoming traffic. Outgoing traffic works well.

I tested on latest proxmox, xen-ng 8.2 and pfsense (nic passthrough).

After conncet Intel 82575EB Gigabit (PCIe) all works fine.

Seem to recall someone mentioning a similar issue that was resolved by stopping the IPMI from sharing the NIC - worth having a look for that setting and disabling the nic sharing if you haven’t already (and using the dedicated management network port).

So I’ve just gotten two of these boards for a VMware ESXi homelab project, but at the installation process they just fail when trying to load vmc_ahci. Anyone have any ideas why that might be the case?

Edit: I’ve tried installing ESXi 7.0.1 onto an M.2 and booting from that rather than trying to install from a 6.7u3 or 6.5u2 ISO image USB stick, but this also fails to boot properly. Starting to wonder if it’s just VMware drivers being crap and not wanting to work with non-QVL drivers. If no one has any suggestions, may try RAID mode vs AHCI for SATA devices as I will be just connecting a single M.2 to boot ESXi from and running VMs via iSCSI

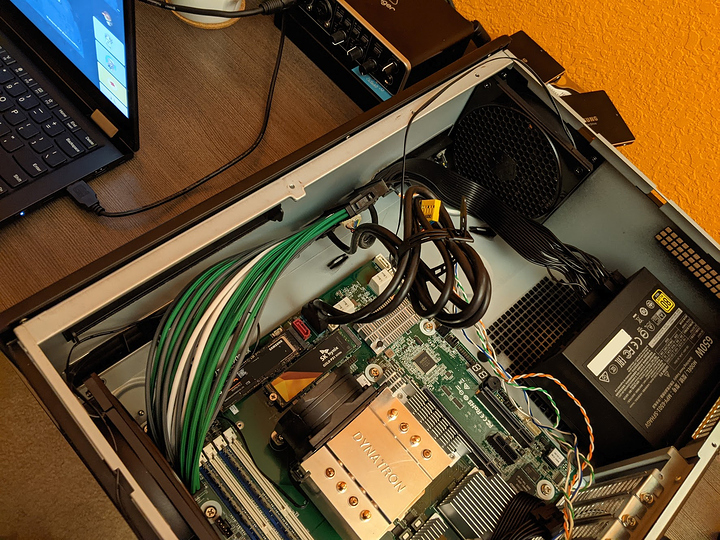

I just finished rebuilding this workstation from scratch since I got all the new hardware I was waiting on. Cooling-wise, I swapped the case fans in the GD05 with Arctic P12 fans, and then a Noctua NH-L12S for the CPU.

The case fans did not do much to help temps with the Dynatron A-24 fan here. I think the dust filters are not really letting the fans do their job, but I’m not sure if it’s worth it to remove the filters at this point (basically have to remove everything, including motherboard, to get the fans out).

Yeah, that’s an SFX PSU on an ATX mount. Small hardware is cute.

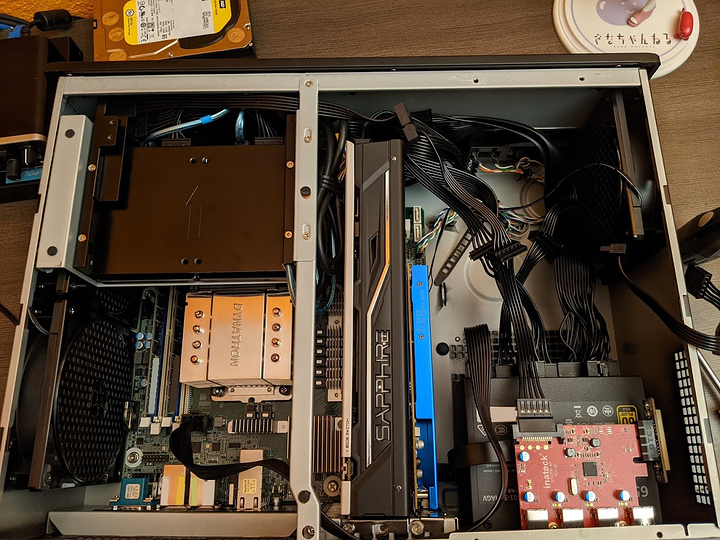

This is what the final layout looks like, mostly.

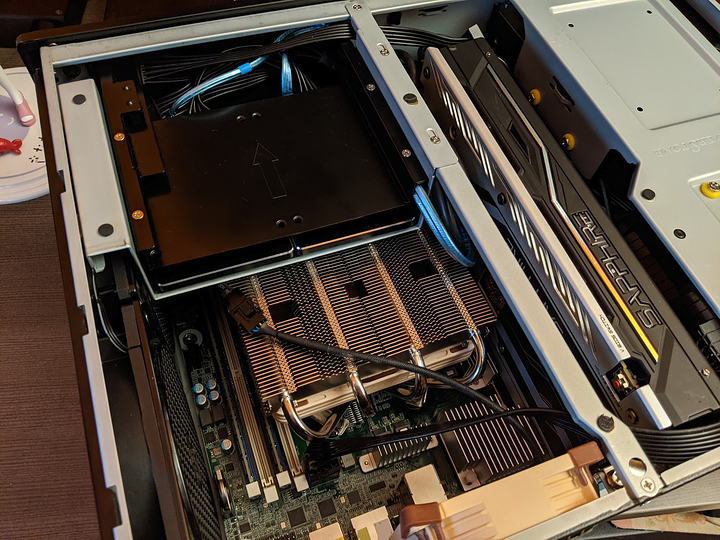

The NH-L12S fits pretty nicely. I didn’t have to take the motherboard out here (thank goodness), as this cooler mounts perfectly fine with the stock backplate on this X570 board. Since I wanted to try using the fan to cool the chipset as well, I reversed the fan (airflow downwards) and oriented it so that it covers more of the chipset.

I didn’t quite perform strictly scientific tests, but with the stress utility I managed to get the Dynatron setup up to 6400RPM and 85℃ on CPU, and the Noctua setup up to 1200RPM and 80℃. Chipset temp was about the same for both. In gaming workloads with and without VFIO, I saw a 10-15℃ improvement (65℃ to 50℃). Chipset would reach 70℃ with both coolers, however I noticed that the Noctua cooler never really went above 1200RPM. If I manually set the CPU fan duty on the Noctua cooler to 90% (1600RPM) I could maintain a 60℃ temperature on both the CPU and the chipset. So the downward airflow from a CPU cooler does seem to help a bit with chipset temps. Doesn’t seem like I can set a fan curve via IPMI though, which would be nice.

I feel like this should be in a separate thread but…oh well. Hope this info proves useful to someone (cc @BYRONICS).

Yes - Very helpful! THANK YOU!!!

I am building 4 of this for a vsan test i want to perform for a client and i am pretty disappointed now with your results and the dynatron. I don’t care for the chipset temps because i will change the heatsink of the chipset with copper or i will put above 40mm noctua fans.

The 85c with a fan that reaches at least 6400rpm tells me that a24 is bad. You are sure that the heatsink was seated correctly? I suppose yes and can you tell me what thermal paste you used?

My problem is that unlike you, i have ordered 2U cases, this ones here

https://www.servercase.co.uk/shop/server-cases/rackmount/2u-chassis/in-win-iw-rs208-02m---2u-server-chassis-with-8x-35-12gbps-hot-swap-bays---includes-550w-crps-redundant-psu-and-rail-kit-iw-rs208-02m/

So my heatsink options are pretty limited. I will use 5900x in this servers.

Either way thank you for your info.

Interesting 2U - You should be able to fit a Noctua NH-L9a-AM4 heatsink/fan - It only stands 37mm tall (this is what I’m using)

Are you installing to or from a USB? There are issues with certain USB drives that cause the problem you are describing. Please let us know what solution you find (as I was going to use ESXi7 as well.)

In a 2U case it’s expected that there is strong airflow coming in from the front, directly into the Dynatron fan. The case you linked fits this description to a T.

In my case (which I’m using mainly for the fact that it fits in a carry-on suitcase), airflow comes perpendicular to the fan and is partially blocked by the power cable connected to the motherboard’s EPS socket, so I’m working with less than ideal conditions for the Dynatron fan. I didn’t exactly have any stability issues with the Dynatron fan after 2 weeks of typical use with the pre-applied thermal paste, so I think the fan will totally work more optimally (temps-wise) in your typical server case. The temps I provided in my last post were after I re-applied my own Noctua thermal paste (though I think I put like 5x more than I should’ve…dunno if I should bother retesting the Dynatron cooler with the same amount I used with the Noctua cooler).

Also, the 85℃ value was just a sort of upper limit, not an average. During a video encoding load I was seeing temps in the upper 70s, and idle/low load was around 40℃. These were actually the same for the Noctua cooler, too, in my limited testing.

Thank you for your extra info, i feel better now. Consider also that this 4 servers will be in the company server room where i have 22c set in the 2 AC units and also noise is not a problem. I think it will be ok.

When i will finish the project i will post here info, also because this are not planned to be in production at least for 2-3 months, i will melt them in testing. Each will have 128gb samsung ecc, 4x1tb sas mixed use enterprise ssd and 1 optane for write cache in vsan.

Hey all!

I don’t fully grasp all the concepts at play here, so i’d like some input before i go ahead and throw money at it!

I plan on settings up a server with this motherboard in the very near future. I wish to run Proxmox, virtualize a gaming PC with GPU passthrough, MergerFS, Snapraid, a plex setup, home assistant, and more. I’ve tried putting some components together based on reading through this thread, and the one over at servethehome.

Motherboard:

Asrock Rack X570D4U

It seems to me the 2L2T is just for the 10gbit LAN, which i don’t believe i need.

Ram:

x2 Kingston DDR4 3200MHz Micron E ECC 32GB (KSM32ED8/32ME)

PSU: (Owned)

Corsair RM850X 850W ATX 80+ Gold

CPU:

Ryzen 9 5950x

GFX: (Owned)

Asus GTX1070 Strix

Windows SSD: (Owned)

Intel m.2 SSD 512 GB

System SSD:

Some decently performing 1TB ssd.

3x 8TB Seagate Archive i already own

I’l be buying another 2x 18TB HDDs

I believe this should all be working together nicely. Let me know if not!

I’ve been looking for a chassis for a while… But haven’t really found anything, so figured i might as well stick with my Fractal Design R5 for now. So far i’ve only been using the default 2x 140MM, but i plan on exchanging them with 5x Noctua NF-A14 PWM. 2 front intake, 1 bottom front intake, 1 rear exhaust, and 1 top rear exhaust.

As the computer is situated in my living room, I’d very much like it to be as silent as possible.

In regards to a CPU cooler… Based on this thread it seems like a NH-D15 will not fit, if i wish to put the GTX 1070 in there. I saw someone mention they had been told the NH-U9S was the biggest that would fit. So i guess i’d be going with that… Unless any of you might have any other suggestions?

Normally i’ve just been assuming everything would fit… But reading about problems with the CPU cooler blocking and such… I’d like your input!

I’d love to hear you all’s thoughts if anything should be exchanged / can be improved. Thanks a lot!

I have been leaning on getting one of these along with a 5600x for my Unraid server.

I was thinking of virtualizing my gaming PC as well but I have heard that some anti-cheat doesn’t play well with it.

For the moment I am leaning on keeping them separate, but I might virtualize an “everyday use” pc and keep my gaming rig a physical box.

The main points that spring to mind from your description are:

- It won’t be quiet enough for placing it in the lounge with that many HDD’s. It would be better to repurpose an old PC as a very bare bones seperate build for the lounge that just uses an SSD.

- Data protection is where it gets more complicated in terms of designing something to prepare for drive failure and performance whilst working with the scope of available SATA, M.2 or PCIe slots to add further data ports to.

- As you’ve mentioned Proxmox are you going to use ZFS? If so you should research whether you’re going to want to add an L2ARC.

What I’m not sure of from my own research is whether you could use a couple of decent size M.2 drives (PCIe 4.0) mirrored (raid 1) for both the Proxmox OS and also for your main everyday storage mapped into some of your VM’s such as the gaming PC one.

Then I’m assuming you’d add a few spinning NAS standard HDD’s perhaps in two separate pools depending on what you mean by archive. One pool cached by the L2ARC and the other pool as a seldom accessed archive. That way you keep the L2ARC content more relevant for the additional HDD storage that isn’t cold archive data.

I’d then look into adding a ZIL with a pair of high endurance power loss data protection M.2 drives such as Seagate IronWolf 510’s if you want to speed up writes to the main (non archive) HDD pool. These M.2 drives won’t need to be massive as they’re just catching the writes first before being flushed to the other drives. For this you’d need to find a ribbon cable to extend the PCIe 4.0 x8 slot away from the graphics card and use the ribbon to attach an M.2 expansion card with 2 further M.2 slots. The motherboard BIOS should support bifurcation to allow two x4 M.2 SSD’s to operate in the one x8 slot.

Using all of the above would use 4 M.2 drives, 2 for Proxmox/VM storage and 2 for ZIL, 5 NAS HDD’s and one SSD for L2ARC so you’d end up with 2 spare SATA ports.

Ideally you should also use a UPS too.

I read sometime back that Nvidia graphics cards could be problematic with GPU passthrough, I think this may have been solved but it’s worth checking. It may just mean you have some extra steps to take to get it to work in comparison to using AMD.

There’s also no sound card on this motherboard so if you want speakers then it depends whether they have their own DAC or if their passive speakers then you could add a USB DAC. Similar for headphones, they may have their own DAC or you’d need to add a headphone amp to a DAC. Something like a Schiit Modi 3+ DAC and a Magni 3+ headphone amp is a popular combo.

Hello Justin! I very much appriciate the indepth answer. I can see i’ve described a few things poorly though.

The place isn’t that big, so i don’t have a basement or something similar, i could put the server in.

I was planning on backing up the media using SnapRaid. The windows machine does’nt need backup. I am still a little undecided on exactly what I will be doing in regards to backup of the system itself. Whether it’s a vdev or nothing. I’m tempted to try ‘infrastructure as code’… So i could easily redeploy it should it die. I kinda like the idea of not having to back it up. Cache drives seem a little overkill for my personal use case. But maybe not?

When i said archive, i actually meant the model. The model of the disk is Seagate Archive  . But yes, all the HDD’s will only be for storing media.

. But yes, all the HDD’s will only be for storing media.

From my understanding nvidia passthrough should be running pretty good these days, otherwise the project of virtualizing the gaming PC is doomed before start.

I am currently using bluetooth headphones, so i believe a soundcard shouldn’t be needed? Thanks for pointing it out though.

I’ll have to read through your message a couple times in the next days, to understand everything, and consider it. Again… Thank you!

I’m using Zen3 CPU.

X570D4U-2L2T BIOS Version 1.28.

How do I disable PCIe 4.0 and change to PCIe 3.0?

Guys, can anyone spot the motherboard in Europe? It disappeared from everywhere.

First of all you should say which of the 2 you are looking for, and secondly… no it didn’t:

FYI, official bios version 1.3 is up on the support site.