So I just joined a startup as their sysadmin, my role is to build the server from scratch both hardware and software. My experience is setting up a single node Proxmox homelab with things like OMV, Emby, nGinx Reverse Proxy, Guacamole, … so I’m a noob but I’m learning.

The use-case is hosting things like git, a monitoring/database web server (Think something like a farm management system with a bunch of sensors everywhere sending data to the server database for logging). The VMs hosting these services will be Windows 10 Server or just bland Windows 10/11 Pro/Home.

I’m having this setup in mind:

3x Proxmox/Ceph Node in HA (identical specs):

Each Node will have:

The boot drives will be installed on ZFS with a 2xNVMe in a mirror

Ceph config:

Pool of NVMes for VMs => Proxmox will use this for their VMs (Mostly Windows VMs)

Pool of HDDs for storage (with either NVMe or Sata SSDs as WAL and cache) => CephFS with samba to allow file access

=> I’m not too familiar with Ceph, haven’t used it yet. But I’m thinking 3x of each drives for redundancy alongside the redundancy from 3 replica from the 3 nodes.

3x 24 ports 10Gbe Switches.

The expected network will be 10Gbe with bonding if I need extra speed.

I’m thinking of doing some type of bonding/aggregation so each Node will connect to all 3 switches

I am a total noob when it comes to more complex networking, my only experience is connecting a bunch of switch together and make sure them work.

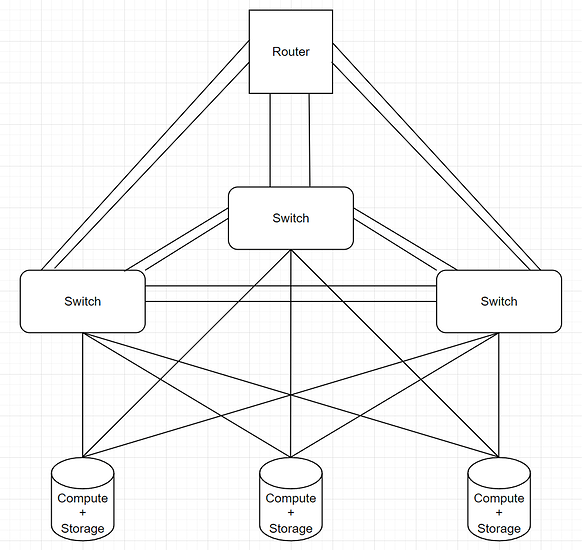

They will be connected like this:

The goal is so that there will be no SPO (Single point of failure) and anything can fail and the system will continue working and be transparent to the end user while I replace the failed component and the system heal/balance itself. Thinking of adding in a second router/ISP but the most important thing is internal network.

From my understanding, in my current setup, if the router fail, everything in the system will fail because the DHCP server will be down. If I replace the 3 switches with Aggregated (Tier 3) switches, that should take care of it? ie if the router is down, only internet access would be lost and everything in the system will continue functioning normally?

Is there anything wrong or possible future issue with expanding, upgrading in the future with the way I set this up?

And what would be a good switches for this tasks?

Hardware recommendation for the Nodes would also be nice. VMs use should be about 2TB and Storage 20TB.

Thanks so much everyone!