Hi!

I’m finally able to build my fancy new big rig. I thought it’d be a great idea to have ONE machine that runs everything, virtualised. A mobo with a lot of PCIe sockets, gen4, with bifurcation, and stuff.

Current spec:

- ASRockRack ROMED8-2T mobo

- AMD Epyc 7402P, 24 core, 2.8GHz (3.35GHz max)

- plenty of octa-channel ECC-RAM, 3200

- Zotac Trinity RTX 3090

- ASRock RX 5500 XT Challenger ITX

- ASMedia ASM1142 USB 3.0 card, SilverStone ECU05

- NEC Renesas uPD720201 USB 3.0 card, SilverStone EC04-E

- ATEN US234-AT USB 3.0 sharing switch - 1 set of KB+Mouse, switching between 2 PCs

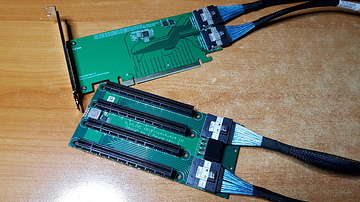

- PCIe bifurcation card, gen3 x16 -> x4x4x4x4, via 2 SlimSAS 8i SFF-8654 cables

- 1600 Watts no-worries kind of power supply, modular, plenty of options

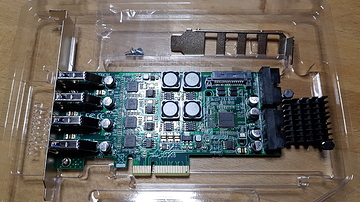

- a bunch of extra goodies, a Dell PERC H310/LSI2008 8-port HBA, plenty of storage, fast SATA SSDs, spinning rust bulkers, NVMe R/W caches for the ZFS pools

Issues:

-

I think my mobo’s UEFI (BIOS) setup does not do a good job regarding PCIe link spec - it lets me choose, but the options are [Auto, gen3, gen2, gen1]! One of the selling points were PCIe gen4 support. I’m not seeing any of it. I’m not quite yet disappointed, but 1 phone call away from invoking my fancy European customer protections. Comments? Which memos did i miss, because i’m not a big SI/System Integrator? (i’m just bumbling around in my home lab)

-

Passing through the ASMedia USB card (spec 6.) (edit: to the Windows 10 Gaming VM) results in recurring HID drop-out - i guess the USB controller resets for a second, then continues working. (?) Really annoying when you’re trying to play a 1st person game. Update / solved: this issue couldn’t be reproduced with the NEC / Renesas USB card (spec 7.). I’ll try and use the ASMedia card when i set-up my Linux daily driver VM.

-

I’ve struggled with the IPMI “KVM” (not the virtualisation KVM - the actual KbVidMouse remote control). There are a bunch of options in UEFI/BIOS setup; i think setting “Video OptionROM” to “Legacy only” fixed the undesired behavior of using any GPU, other than the built-in Aspeed BMC’s. (#TODO: boot into setup and edit this post with exact settings). However, i figured out how to ignore both GPUs (3090 & 5500) in POST & OS boot-up.

-

My Windows VM feels kinda sluggish! I expected an upgrade from a Haswell i7-4790 to a fancy Zen2 Epyc to manifest in perceivable performance gain. I mean, the IPC gains allone should’ve made up for lack of core clocks! Which memo did i miss - is it my CPU/thread-pinning config? I’ve allocated the last 3 CCXs to that VM, 18 vCPUs, taking core-thread-siblings into account, 9 cores and their SMT siblings. (My 24 core Epyc 7402P has 3 cores per CCD,

virsh capabilitiestold me who the siblings are.) -

Unigine Superposition benchmarked at 11005, which is at the bottom for 3090 results, even surpassed by 3080s - i’m running everything stock, though. That’s a sample size of 1, and i didn’t record much, didn’t tune much. No water cooling, yet.

I chose Epyc because that’s how i get A LOT of PCIe sockets, for all my VMs. Despite losing half of the RAM channels, i’d prefer a high clocking Threadripper, but there aren’t any mobos with enough PCIe sockets. It was 4 vs 7 - 4 degraded / some only x8 vs 7 x16 gen4 sockets. Bifurcation is a big deal as well. If you really need to bifurcate the frell out of all of your x16 sockets, no worries!

That’s it, for now. It’s a WIP/Work-In-Progress. I might update/edit/reply later. I’m not done yet - i’m just a bit tired.

Happy new beer!

edit: SOO much storage connectivity - 3 SlimSAS ports, each capable of SATA3 x4 (or PCIe g4 x4) - i woudn’t even need the Dell PERC H310 anymore.

edit: SOO much storage connectivity - 3 SlimSAS ports, each capable of SATA3 x4 (or PCIe g4 x4) - i woudn’t even need the Dell PERC H310 anymore.