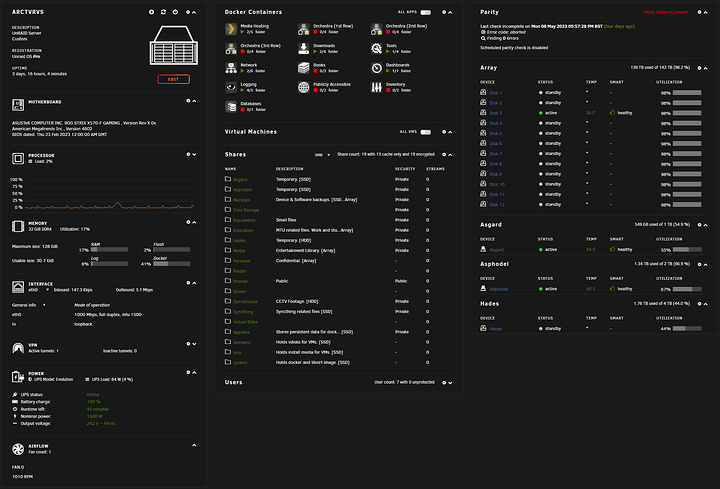

I’ve been focused on power efficiency when it comes to my home server. It’s been the primary reason for using doing a custom build instead of Dell R410s I have laying around, along with the reason I use Unraid. This has been 3 years in the making so here it goes.

The second reason for using Unraid specifically was to allow me to slowly build up a set a disks over time that I could then use a more performant TrueNAS build (should I need to)

--------------Parts--------------

Motherboard: ASUS X570-F

CPU: 5700G

RAM: Corsair 2x16GB 3600MHz

Storage Flash: 1TB NVME M.2, 2TB NVME M.2

Storage Disk: 3x 10TB, 7x 12TB, 2x 14TB

Storage Scratch: 4TB

The UPS provides power to a MikroTik RB4011, ONT, this server, and all the LED lighting in the room. With the lights off the idle power draw is a measly 64-80W. For reference the PoE switch (Netgear - ProSafe GS728TP) that I recently decommissioned ran with and idle draw of 50W with nothing plugged in.

Crazy Talk 01:

Now for the crazy talk, there are no parity disks. Yes I said it. For the array to be written to while having parity protection all drives must be spun up. The drive being written to & parity disk perform a write operation and all other drives perform a read operation. That’s an significant amount of power & wear. Instead I keep off site backups of the important data. (Keep in mind I pay 34c/kWh and these systems are always on). Over the course of a day there aren’t many spin ups as I’ve designed the system to run cache only for the frequently used shares (such as Syncthing, qBittorrent etc).

Crazy Talk 02:

I don’t transcode media. This removes the need for a GPU and its associated power usage. It also allows for much better image quality for client devices. I could spend hours talking about the ins and outs of this but I’ll summarise it as “I’m streaming the data of pre-computed client files which are going to play without issue 99.8% of the time. Instead of transcoding BD-Rips on the fly.” I create and store two versions of said ‘client file’ at different bitrates. Should the larger one fail to play, there’s a fallback for that 0.1%.

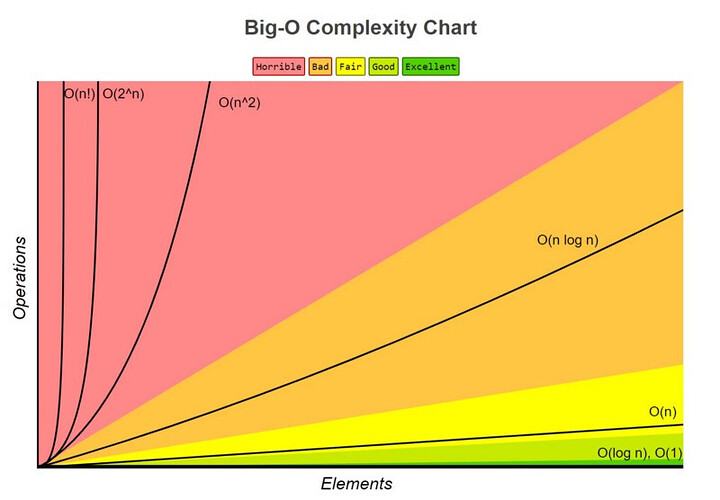

Somehow the Plex community has become wrapped up in the ‘Transcode War’. It makes sense until you take a look at it from a programming mindset (big 0 notation). Both the transcode high bitrate & store multiple versions, in terms of storage required you could round out to being O(n). With ‘n’ being the same for both. However when it comes to power on the fly transcoding makes little sense. The energy it would take to transcode once and keep said transcoded file ends up repeated ad infinitum, ad nauseum. Not only that but there are issues, including added latency, decreased quality, and often increased bandwidth requirements. If I was to compare the two using big O in this respect I would give hosting multiple version O(1) and transcoding O(n log n).

Crazy Talk 03:

Performance is a big part of this too. I’m normally away on a work trip and most of my family are spread around the world, so about 90% of the streaming is done through the WAN. This limits it to 200 Mbps (25 MB/s). The idea of having a highly performant ZFS pool for this situation is nonsensical. (Before you crucify my I do run TrueNAS with Z-pools, just not for this). When it comes to starting video playback, skipping thorough the video, and even buffering the video, direct play by far beats transcoding.

I think as communities we’ve become wrapped up in competing with each other rather than actually finding elegant/appropriate solutions to our hurdles. An honourable mention is Dropbox, who improved their performance by removing SSDs and writing to SMR HDDs.

Increasing Magic Pocket write throughput by removing our SSD cache disks - Dropbox.

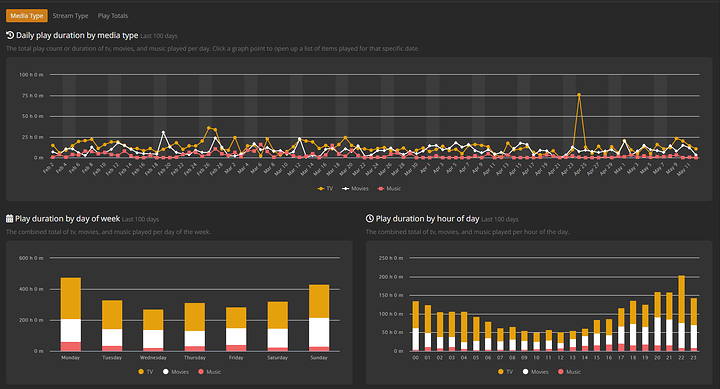

You might rightly assume that this system must not be that functional. I beg to differ. The main use for this guy is Plex and VPN hosting and here are some usage stats.

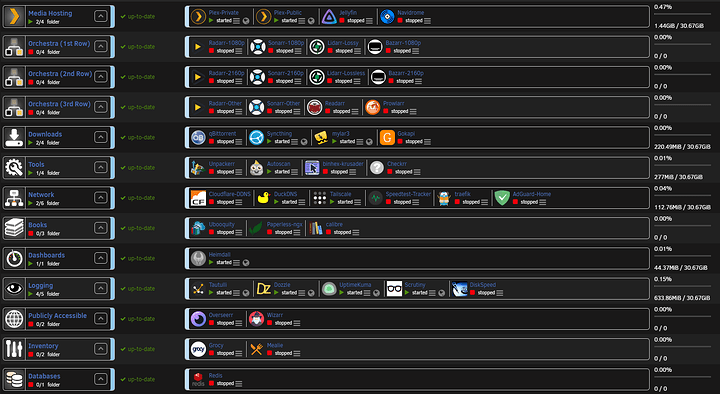

I got tired of manually running the whole show as far as file management so I’ve got quite a few containers handling my very stringent media requirements.

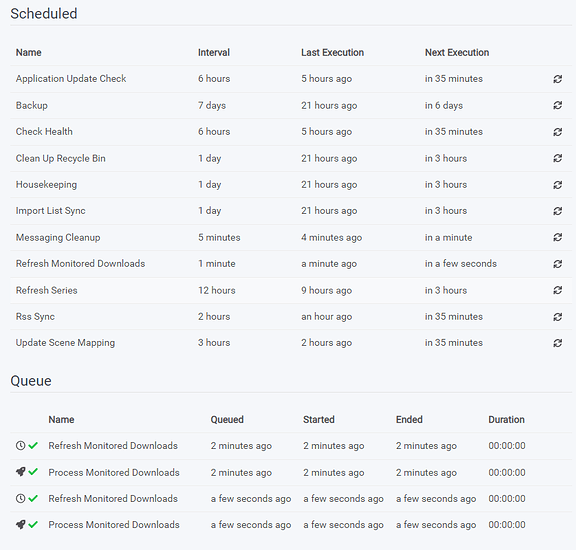

I don’t leave these containers running because, they cause unnecessary disk spin-ups while they perform their tasks. Instead I run them at the end of the week while I’m messing with it anyway. There are a million and one other little details as to how I’ve optimised this system (Curve Optimiser all core undervolt of -13) but this rant has gone on long enough ![]()

Most of the time there’s someone watching so it’s at 80W. For those quiet nights it drops down to 64W.

RB4011 10W, Server 54W

I hope you found this post interesting ![]()