I have some files that are super slow to read (~20MB/s) off my two NVMe SSDs. Both of my drives have this issue, and both are 4TB Corsair MP600 Pro XT.

When I took the same files, after they were fully copied, and transferred them back and forth, I was getting ~5GB/s. Maybe if the files were larger, I’d even get the max read-write of 7GB/s.

Ideas why this is happening

- The data was written as QLC when the drive was filling up. This would’ve occurred when I was moving around files to eventually put them on my NAS. In general, I only have 400-600GB on these SSDs to benefit from their SLC NAND flash configuration.

- The files were randomly written to the drive rather than sequentially, so when read back, I’m doing random reads rather than sequential reads. I thought this didn’t matter for SSDs, but I know the “random read” stat is a legitimate SSD test; although, I’m not sure what it means.

I found someone else’s post with a similar issue here:

Why I’m posting

- How can I identify which files are fragmented in a way that requires reading them with random reads?

- How can I identify which files are written in QLC mode on the drive?

- How can I fix these files in either case?

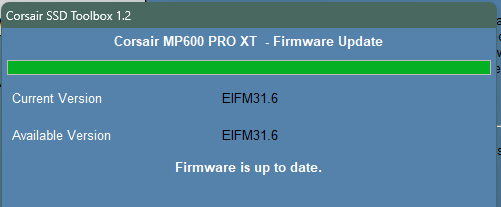

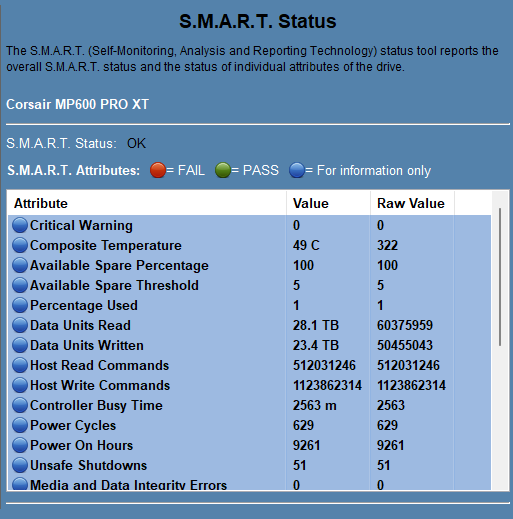

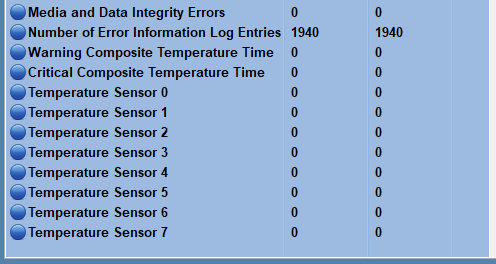

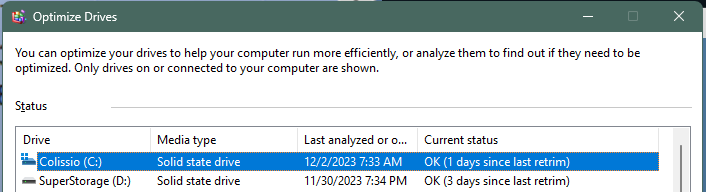

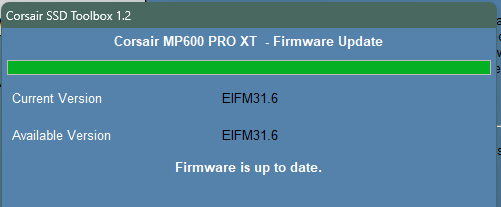

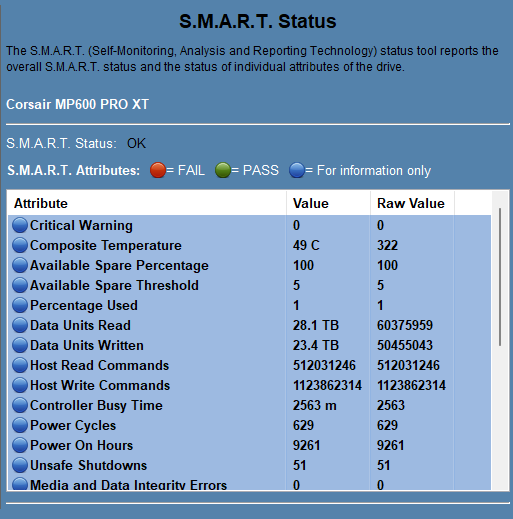

A few sanity checks

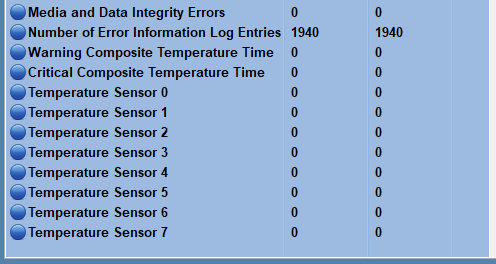

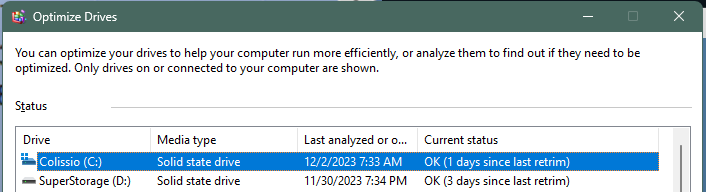

Both drives share these details; although, this C: drive I’ve owned longer, so the numbers are higher.

chkdsk didn’t show any issues on either drive.

how long ago were the specific files that are slow to read written to the ssd?

I did some looking up on these drives: Corsair MP600 PRO XT 4 TB Specs | TechPowerUp SSD Database

They’re TLC, not QLC. They do have SLC, but it’s a 480GB cache, not part of the storage itself. So all data eventually gets written to TLC NAND. They also have 4GB of DRAM cache.

There’s a point at which the SLC cache will fill. At that time, it should drop to 4GB/s writes. At the same time, it will be copying data out of the SLC cache. If it can’t finish doing that while writes are still occurring, then you’re limited to 1.6GB/s writes.

None of this concerns reads though. I can’t imagine why those would be so slow unless they were written to the drive in a way that makes them hard to read back.

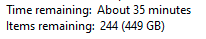

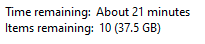

Here are some screenshots from copies I ran yesterday.

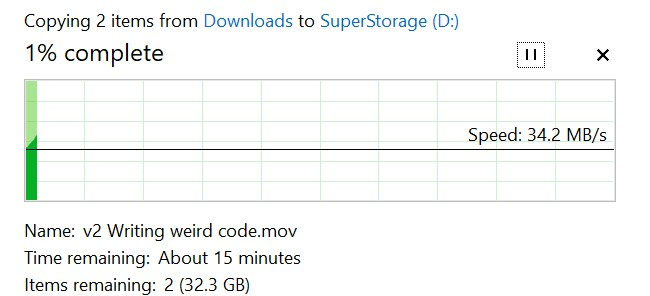

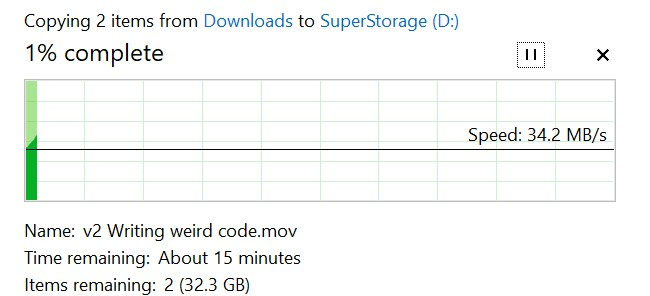

From C: to my NAS (25Gb fiber)

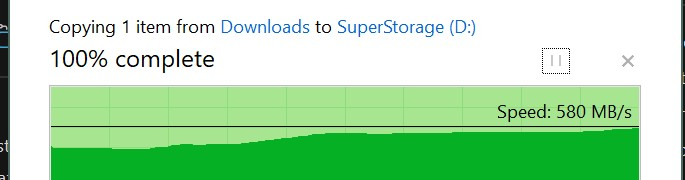

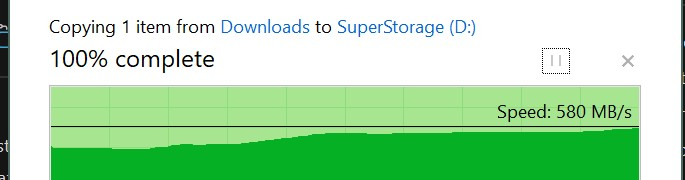

From C: to D: (same drive)

Almost all consumer SSDs will have their read performance to specific cells fade over time (unless cell is rewritten, which despite the common held belief, doesn’t not happen by just having the SSD plugged into power) as the charge decays out of the cell; it is actually a lot more complicated than I make it sound with voltage offset table routines and extra ECC that gets introduced by the controller to read “stubborn” cells. This slow read speed can even bleed over to write speed if flash pages need to coalesce to make a write happen.

Most TLC SSDs will hold out at least a year before this read speed degradation becomes noticeable so I don’t think that is the entire story here. NAND wear will make the cells decay faster as well but you’re total writes are only 23.4TB.

How fast are files of similar size/numbers to transfer that are only a couple days old? By that time they should be written out of the SLC cache and would give a good speed baseline.

2 Likes

2 large files from last year:

Largest file I have from last week (1.6GB):

Re-ran this multiple times.

hmmm… I can’t think of anything else this could be other than cell decay, it’s just it seems really accelerated.

This isn’t exactly a fix, but if you ran hdsentinel’s “surface test” in read+write+read mode on the drive in question it will make a heat map of the specific cells on the SSD according to their read/write performance while refreshing all the cells in the SSD. It will be a temporary fix insofar that eventually it will slow down again, but it should bring the SSD’s performance back up to like new after running, and if you have problems with the SSD later, you can run the utility again and compare the newly generated heat map. If the same exact cells show up as slow it would be tempting to conclude a manufacturing defect in certain areas of the NAND, but its likely the SSD controller’s FLT is reassigning LBAs to completely random cells muddying the waters on that analysis.

I’m not exactly endorsing hdsentinel but I know it’s one of the programs that actually can do a surface test on windows, they should offer a trial version you could run, or alternatively I think its included on some boot cds.

1 Like

I emailed Corsair about it. If they don’t replace these two drives, I’ll go the Hard Disk Sentinel route.