You probably know I love frugality and scaling down to the bare minimum and KISS, so my answer may be a little… unsatisfying.

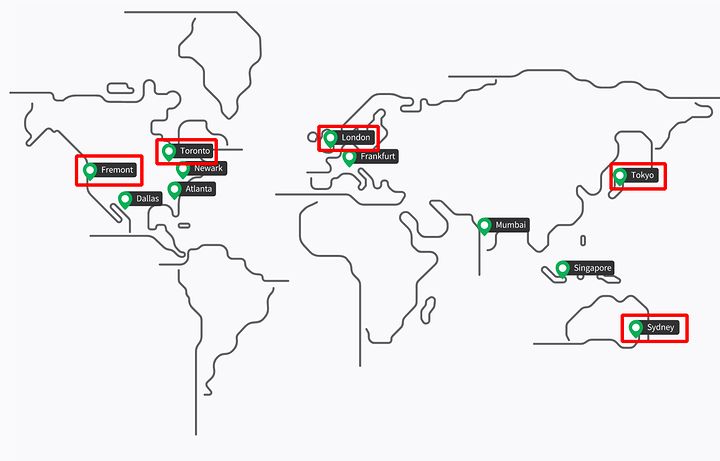

If the NodeBalancers have to make the trip back to your main server, then this will only make sense if Linode has leased lines between their locations. Not sure if that’s the case, but if it is, you will probably see a difference in speed or latency. And traffic has to travel through their leased lines and I doubt the users are allowed this privilege (that’s usually reserved for in-house traffic, like management or really latency-sensitive communications).

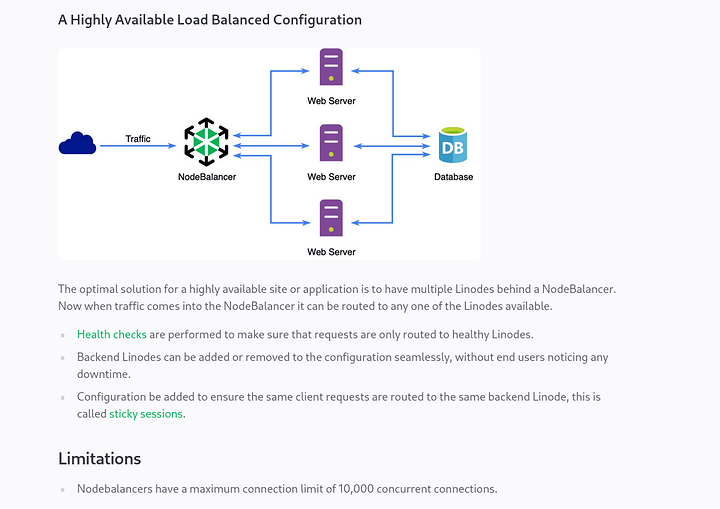

Another case in which it would make sense is if those NodeBalancers are caching webpages and requests and stuff. I have no idea how they work though. Reading a little about it, it appears they are just basic load-balancers. So just like any LB, these things only make sense when you got loads and loads of traffic going through your server (1k+ connection at a time from each NodeBalancer). Are you selling stuff or own a very popular website? I don’t think this makes either technical, nor financial sense for 1 user.

But if security is the issue, well, like any LB or reverse proxy, it is an added security feature. NodeBalancers communicate with the Linode web servers through private IPs (and if you’re on a limited bandwidth in Linode, this will help a little), so the main web server is not directly exposed to the internet.

I read there are some issues with hosting NodeBalancers with SSL on the web servers. You can’t get the real IP of the source, just the IP of the NodeBalancers and some websites don’t show up correctly (the steaming pile of garbage that is WordPress). To avoid this issue, it’s recommended you keep the SSL certificate on the NodeBalancers and have unencrypted traffic from them to your web servers. When it’s your own infrastructure, this should be fine, but I don’t know how it works in Linode, are you the owner of the NodeBalancer, or are they shared among multiple customers? If you want SSL on both the load balancer and on the web servers, HAProxy is the better solution.

And again, it’s certainly a cool factor to add redundancy and load balancing to your services, but if it’s just for you, this doesn’t make much sense. You can play around with VMs / containers in your own home lab and set things up as if it was a large corporate intranet. Paying additional money for 1-100 people doesn’t make much sense.

In the end, most likely you won’t see much of a difference in performance. It might add some privacy if you are connecting via the clearweb to your Linode web server, your traffic will show up as connecting to a regional server, which then goes through Linode’s VPN to your main instance, instead of you going directly to it. But then again, a reverse DNS lookup will show what you were connecting to, so kinda a moot point. If privacy is a concern, you can make a script to spin up Wireguard on new Linode instances and connect to them when you travel (or have them constantly running for ease of use). Bonus points for VPNs is that you can also use the private IP of your Linode instance to connect to it, so you also save on bandwidth costs.

Again not worth the investment. Unless you are serving customers that demand your service not be dog slow and consequently you or both of you losing money, shared resources are better. It’s not often that services are pegging the CPU constantly (and if they do, sometimes VPS providers move the demanding VMs around not really demanding ones).