Passing along the news.

(omg)vflash | Fully Patched nvflash from X to Ada Lovelace [v5.780]

Maybe with this I can now flash the Tesla P100.

Please update here if it’s do able, i’m interest with the p100 for doing Ansys Discovery Live. cheers

Its looking to be in like pre beta stage with the flasher. All info we have passed along has been looked at. He is working on a update/bug fix that could be released next month.

I have tested 5 or 6 different nvflash versions with no luck so far. I’m trying to flash a Quadro P100 to my Tesla P100.

Edit: BTW Welcome to the forum!

nice, finger crossed hope the gp100 bios can be flashed to tesla card. i remember from some video i forgot what card it is, from what i remember they can flashed another vbios with changing some resistor on the board. i’ll try to find the video then go back with the link.

on the other hand, i kind of curious i got Dell T5610 it has pcie 3.0x8 and 2 pcie 3.0x16. i want to put rtx 4060ti on the x8 slot and tesla p100 or v100 and another pcie 3.0x16 for 4x nvme m.2. using plx card. do you think the GPUs is compatible?

from what i read on other thread you use geforce studio driver for the tesla card and what i saw on nvidia website it’s support cards from Maxwell generation to Ada Lovelace is it do able? thanks.

I do not know the Dell T5610 but depending on the CPU in use? I looked it up and from what I saw it looks do-able with the studio driver. With 4x m.2 those might take up some of the pci-e rescorces. I have an Asus x99 MB and couldn’t use the m.2 slot because it shares with the pci-e slots and the P100 would not work with it in. I placed the m.2 2TB in a satalll 6GB internal HDD box and used it that way.

Edit something like this is what I had to use, may be the same one I ordered.

hello again sorry for late reply.

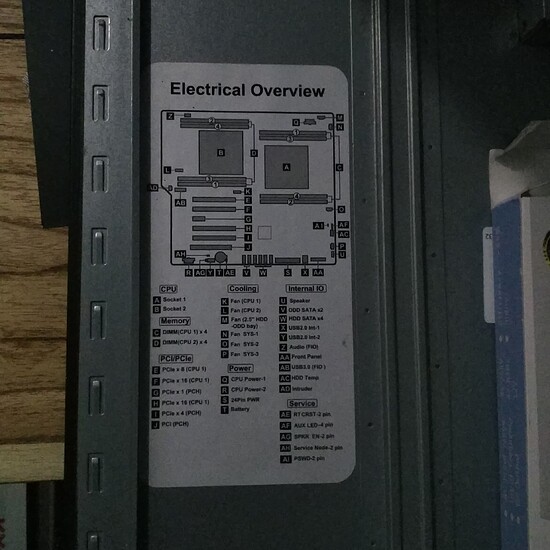

ah i don’t know about sharing pcie lane but i found this on the side panel and after i confirm it on the manual it seems independent to the CPU 1 and other that connect to PCH chipset is pcie gen 2 and old pci card

the reason i wanted to try tesla p100 is the OpenGL performance according to benchmark it surpassed 3090. but i know being 7 years old card i’m not hoping much tho. wanted to try the tesla p40 also. i’m intrigue by the vram capacity of p40

(ref from askgeek io rtx 3090 vs tesla p100)

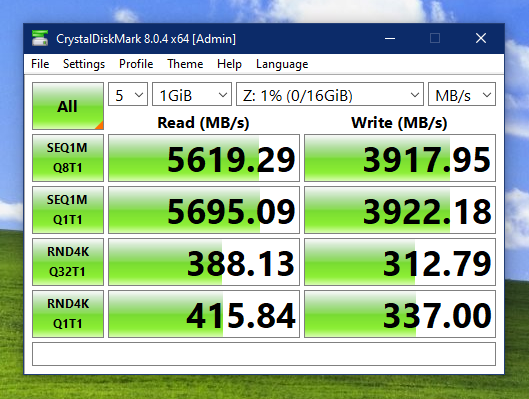

ah yeah i already use sata ssd but the one i use is from aliexpress you can put two m.2 sata to one 2.5 ssd enclosure in jbod mode. i’d try a simulation on solidworks 2022 with that and the drive is so slow, then i’d try using samsung 860 ssd no improvement. after that i’d try using ramdisk to saved the file i want to do simulation with, it’s fast, like from 45minutes for simple bracket to just 11 minutes. even i use a losery single channel 1600 mhz ddr3 tho its slower than gen 4 m.2 nvme it’s still fast. that’s why i think nvme is a must in this system

btw back to the topic, i’m happy to try the vbios mod after the p100 arrived from Ali. cheers mate

Keep me up to date, sounds like a fun project. cheers

Hey man, i just remember the mod name. It is this repo GitHub - bmgjet/ShutMod-Calculator: Work out what shunt values to use easily.

Based on what i saw the TDP for tesla p100 is 250w and the gp100 is 300w. With the shunt mod we can trick the gpu to draw more than they actually are. We can use power limit on msi afterburner for adjusting the power later. No need for changing bios etc. tonight i’ll try to open my p100 and see if this mod possible.

Good luck and lets us know how it goes. Cheers

This topic was automatically closed 273 days after the last reply. New replies are no longer allowed.