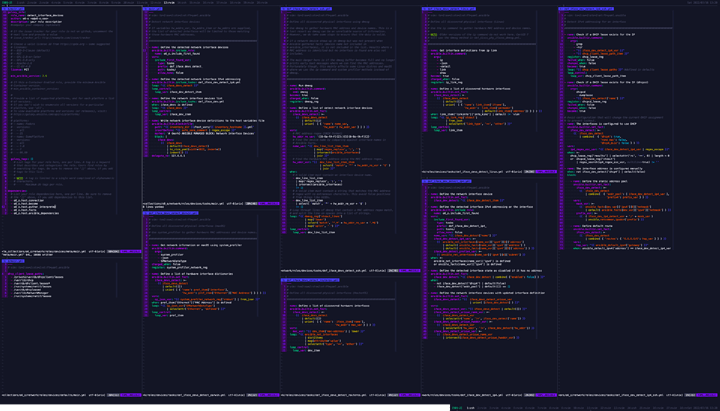

It's a lot

Dhcp Failover

This version of the ISC DHCP server supports the DHCP failover protocol as documented in draft-ietf-dhc-failover-07.txt. This is not a final protocol document, and we have not done interoperability testing with other vendors' implementations of this protocol, so you must not assume that this implementation conforms to the standard. If you wish to use the failover protocol, make sure that both failover peers are running the same version of the ISC DHCP server.

The failover protocol allows two DHCP servers (and no more than two) to share a common address pool. Each server will have about half of the available IP addresses in the pool at any given time for allocation. If one server fails, the other server will continue to renew leases out of the pool, and will allocate new addresses out of the roughly half of available addresses that it had when communications with the other server were lost.

It is possible during a prolonged failure to tell the remaining server that the other server is down, in which case the remaining server will (over time) reclaim all the addresses the other server had available for allocation, and begin to reuse them. This is called putting the server into the PARTNER-DOWN state.

You can put the server into the PARTNER-DOWN state either by using the omshell (1) command or by stopping the server, editing the last peer state declaration in the lease file, and restarting the server. If you use this last method, be sure to leave the date and time of the start of the state blank:

failover peer name state {

my state partner-down;

peer state state at date;

}

When the other server comes back online, it should automatically detect that it has been offline and request a complete update from the server that was running in the PARTNER-DOWN state, and then both servers will resume processing together.

It is possible to get into a dangerous situation: if you put one server into the PARTNER-DOWN state, and then *that* server goes down, and the other server comes back up, the other server will not know that the first server was in the PARTNER-DOWN state, and may issue addresses previously issued by the other server to different clients, resulting in IP address conflicts. Before putting a server into PARTNER-DOWN state, therefore, make sure that the other server will not restart automatically.

The failover protocol defines a primary server role and a secondary server role. There are some differences in how primaries and secondaries act, but most of the differences simply have to do with providing a way for each peer to behave in the opposite way from the other. So one server must be configured as primary, and the other must be configured as secondary, and it doesn't matter too much which one is which.

Failover Startup

When a server starts that has not previously communicated with its failover peer, it must establish communications with its failover peer and synchronize with it before it can serve clients. This can happen either because you have just configured your DHCP servers to perform failover for the first time, or because one of your failover servers has failed catastrophically and lost its database.

The initial recovery process is designed to ensure that when one failover peer loses its database and then resynchronizes, any leases that the failed server gave out before it failed will be honored. When the failed server starts up, it notices that it has no saved failover state, and attempts to contact its peer.

When it has established contact, it asks the peer for a complete copy its peer's lease database. The peer then sends its complete database, and sends a message indicating that it is done. The failed server then waits until MCLT has passed, and once MCLT has passed both servers make the transition back into normal operation. This waiting period ensures that any leases the failed server may have given out while out of contact with its partner will have expired.

While the failed server is recovering, its partner remains in the partner-down state, which means that it is serving all clients. The failed server provides no service at all to DHCP clients until it has made the transition into normal operation.

In the case where both servers detect that they have never before communicated with their partner, they both come up in this recovery state and follow the procedure we have just described. In this case, no service will be provided to DHCP clients until MCLT has expired.

Configuring Failover

In order to configure failover, you need to write a peer declaration that configures the failover protocol, and you need to write peer references in each pool declaration for which you want to do failover. You do not have to do failover for all pools on a given network segment. You must not tell one server it's doing failover on a particular address pool and tell the other it is not. You must not have any common address pools on which you are not doing failover. A pool declaration that utilizes failover would look like this:

pool {

failover peer "foo";

pool specific parameters

};

Dynamic BOOTP leases are not compatible with failover, and, as such, you need to disallow BOOTP in pools that you are using failover for.

The server currently does very little sanity checking, so if you configure it wrong, it will just fail in odd ways. I would recommend therefore that you either do failover or don't do failover, but don't do any mixed pools. Also, use the same master configuration file for both servers, and have a separate file that contains the peer declaration and includes the master file. This will help you to avoid configuration mismatches. As our implementation evolves, this will become less of a problem. A basic sample dhcpd.conf file for a primary server might look like this:

failover peer "foo" {

primary;

address anthrax.rc.vix.com;

port 647;

peer address trantor.rc.vix.com;

peer port 847;

max-response-delay 60;

max-unacked-updates 10;

mclt 3600;

split 128;

load balance max seconds 3;

}

include "/etc/dhcpd.master";

The statements in the peer declaration are as follows:

The primary and secondary statements

[ primary | secondary ];

This determines whether the server is primary or secondary, as described earlier under DHCP FAILOVER.

The address statement

address address;

The address statement declares the IP address or DNS name on which the server should listen for connections from its failover peer, and also the value to use for the DHCP Failover Protocol server identifier. Because this value is used as an identifier, it may not be omitted.

The peer address statement

peer address address;

The peer address statement declares the IP address or DNS name to which the server should connect to reach its failover peer for failover messages.

The port statement

port port-number;

The port statement declares the TCP port on which the server should listen for connections from its failover peer.

The peer port statement

peer port port-number;

The peer port statement declares the TCP port to which the server should connect to reach its failover peer for failover messages. The port number declared in the peer port statement may be the same as the port number declared in the port statement.

The max-response-delay statement

max-response-delay seconds;

The max-response-delay statement tells the DHCP server how many seconds may pass without receiving a message from its failover peer before it assumes that connection has failed. This number should be small enough that a transient network failure that breaks the connection will not result in the servers being out of communication for a long time, but large enough that the server isn't constantly making and breaking connections. This parameter must be specified.

The max-unacked-updates statement

max-unacked-updates count;

The max-unacked-updates statement tells the remote DHCP server how many BNDUPD messages it can send before it receives a BNDACK from the local system. We don't have enough operational experience to say what a good value for this is, but 10 seems to work. This parameter must be specified.

The mclt statement

mclt seconds;

The mclt statement defines the Maximum Client Lead Time. It must be specified on the primary, and may not be specified on the secondary. This is the length of time for which a lease may be renewed by either failover peer without contacting the other. The longer you set this, the longer it will take for the running server to recover IP addresses after moving into PARTNER-DOWN state. The shorter you set it, the more load your servers will experience when they are not communicating. A value of something like 3600 is probably reasonable, but again bear in mind that we have no real operational experience with this.

The split statement

split index;

The split statement specifies the split between the primary and secondary for the purposes of load balancing. Whenever a client makes a DHCP request, the DHCP server runs a hash on the client identification, resulting in value from 0 to 255. This is used as an index into a 256 bit field. If the bit at that index is set, the primary is responsible. If the bit at that index is not set, the secondary is responsible. The split value determines how many of the leading bits are set to one. So, in practice, higher split values will cause the primary to serve more clients than the secondary. Lower split values, the converse. Legal values are between 0 and 255, of which the most reasonable is 128.

The hba statement

hba colon-separated-hex-list;

The hba statement specifies the split between the primary and secondary as a bitmap rather than a cutoff, which theoretically allows for finer-grained control. In practice, there is probably no need for such fine-grained control, however. An example hba statement:

hba ff:ff:ff:ff:ff:ff:ff:ff:ff:ff:ff:ff:ff:ff:ff:ff:

00:00:00:00:00:00:00:00:00:00:00:00:00:00:00:00;

This is equivalent to a split 128; statement, and identical. The following two examples are also equivalent to a split of 128, but are not identical:

hba aa:aa:aa:aa:aa:aa:aa:aa:aa:aa:aa:aa:aa:aa:aa:aa:

aa:aa:aa:aa:aa:aa:aa:aa:aa:aa:aa:aa:aa:aa:aa:aa;

hba 55:55:55:55:55:55:55:55:55:55:55:55:55:55:55:55:

55:55:55:55:55:55:55:55:55:55:55:55:55:55:55:55;

They are equivalent, because half the bits are set to 0, half are set to 1 (0xa and 0x5 are 1010 and 0101 binary respectively) and consequently this would roughly divide the clients equally between the servers. They are not identical, because the actual peers this would load balance to each server are different for each example.

You must only have split or hba defined, never both. For most cases, the fine-grained control that hba offers isn't necessary, and split should be used.

The load balance max seconds statement

load balance max seconds seconds;

This statement allows you to configure a cutoff after which load balancing is disabled. The cutoff is based on the number of seconds since the client sent its first DHCPDISCOVER or DHCPREQUEST message, and only works with clients that correctly implement the secs field - fortunately most clients do. We recommend setting this to something like 3 or 5. The effect of this is that if one of the failover peers gets into a state where it is responding to failover messages but not responding to some client requests, the other failover peer will take over its client load automatically as the clients retry.

The Failover pool balance statements.

max-lease-misbalance percentage; max-lease-ownership percentage; min-balance seconds; max-balance seconds;

This version of the DHCP Server evaluates pool balance on a schedule, rather than on demand as leases are allocated. The latter approach proved to be slightly klunky when pool misbalanced reach total saturation...when any server ran out of leases to assign, it also lost its ability to notice it had run dry.

In order to understand pool balance, some elements of its operation first need to be defined. First, there are 'free' and 'backup' leases. Both of these are referred to as 'free state leases'. 'free' and 'backup' are 'the free states' for the purpose of this document. The difference is that only the primary may allocate from 'free' leases unless under special circumstances, and only the secondary may allocate 'backup' leases.

When pool balance is performed, the only plausible expectation is to provide a 50/50 split of the free state leases between the two servers. This is because no one can predict which server will fail, regardless of the relative load placed upon the two servers, so giving each server half the leases gives both servers the same amount of 'failure endurance'. Therefore, there is no way to configure any different behaviour, outside of some very small windows we will describe shortly.

The first thing calculated on any pool balance run is a value referred to as 'lts', or "Leases To Send". This, simply, is the difference in the count of free and backup leases, divided by two. For the secondary, it is the difference in the backup and free leases, divided by two. The resulting value is signed: if it is positive, the local server is expected to hand out leases to retain a 50/50 balance. If it is negative, the remote server would need to send leases to balance the pool. Once the lts value reaches zero, the pool is perfectly balanced (give or take one lease in the case of an odd number of total free state leases).

The current approach is still something of a hybrid of the old approach, marked by the presence of the max-lease-misbalance statement. This parameter configures what used to be a 10% fixed value in previous versions: if lts is less than free+backup * max-lease-misbalance percent, then the server will skip balancing a given pool (it won't bother moving any leases, even if some leases "should" be moved). The meaning of this value is also somewhat overloaded, however, in that it also governs the estimation of when to attempt to balance the pool (which may then also be skipped over). The oldest leases in the free and backup states are examined. The time they have resided in their respective queues is used as an estimate to indicate how much time it is probable it would take before the leases at the top of the list would be consumed (and thus, how long it would take to use all leases in that state). This percentage is directly multiplied by this time, and fit into the schedule if it falls within the min-balance and max-balance configured values. The scheduled pool check time is only moved in a downwards direction, it is never increased. Lastly, if the lts is more than double this number in the negative direction, the local server will 'panic' and transmit a Failover protocol POOLREQ message, in the hopes that the remote system will be woken up into action.

Once the lts value exceeds the max-lease-misbalance percentage of total free state leases as described above, leases are moved to the remote server. This is done in two passes.

In the first pass, only leases whose most recent bound client would have been served by the remote server - according to the Load Balance Algorithm (see above split and hba configuration statements) - are given away to the peer. This first pass will happily continue to give away leases, decrementing the lts value by one for each, until the lts value has reached the negative of the total number of leases multiplied by the max-lease-ownership percentage. So it is through this value that you can permit a small misbalance of the lease pools - for the purpose of giving the peer more than a 50/50 share of leases in the hopes that their clients might some day return and be allocated by the peer (operating normally). This process is referred to as 'MAC Address Affinity', but this is somewhat misnamed: it applies equally to DHCP Client Identifier options. Note also that affinity is applied to leases when they enter the state be moved from free to backup if the secondary already has more than its share.

The second pass is only entered into if the first pass fails to reduce the lts underneath the total number of free state leases multiplied by the max-lease-ownership percentage. In this pass, the oldest leases are given over to the peer without second thought about the Load Balance Algorithm, and this continues until the lts falls under this value. In this way, the local server will also happily keep a small percentage of the leases that would normally load balance to itself.

So, the max-lease-misbalance value acts as a behavioural gate. Smaller values will cause more leases to transition states to balance the pools over time, higher values will decrease the amount of change (but may lead to pool starvation if there's a run on leases).

The max-lease-ownership value permits a small (percentage) skew in the lease balance of a percentage of the total number of free state leases.

Finally, the min-balance and max-balance make certain that a scheduled rebalance event happens within a reasonable timeframe (not to be thrown off by, for example, a 7 year old free lease).

Plausible values for the percentages lie between 0 and 100, inclusive, but values over 50 are indistinguishable from one another (once lts exceeds 50% of the free state leases, one server must therefore have 100% of the leases in its respective free state). It is recommended to select a max-lease-ownership value that is lower than the value selected for the max-lease-misbalance value. max-lease-ownership defaults to 10, and max-lease-misbalance defaults to 15.

Plausible values for the min-balance and max-balance times also range from 0 to (2^32)-1 (or the limit of your local time_t value), but default to values 60 and 3600 respectively (to place balance events between 1 minute and 1 hour).

Another reason I am putting off supporting redundant Linux gateways and sticking with OpenBSD.