Hello hello friends I believe I had made a post about my previous server, but as the skills raise and the checkbook says yes more and more it was finally time to get rid of this 230 watt TDP monster in my room. with it’s busted ass power button and a southbridge so damn hot it would actually cause a full system shutdown. Had to tape a fan to the PSU or leave floating in a nest of cables.

But that all ended some time ago, and in this post I’ll go a bit more in depth about how I run my network. And some hot tips for anyone thinking “Is Ryzen a good choice for a home server?”

The overall design of the two servers software is roughly the same, just with better parts. We’ll start with the specs.

THE PARTS

Old server

- $230 used hitachi 4x2 TB drives running Zraid 10

- 1TB drive for other things that I had laying around

- $150 56 GBs of various used 8GB samsung ECC dimms

- $10 2x Xeon X5550s with some LOUD AS SHIT cpu fans. It was a goddamn vacuum cleaner

- $110 for a supermicro dual socket 1366 board

- $70 EVGA 80+ gold 850 watt PSU

- $40 dell LSI raid controller formatted to be an HBA in JBOD

- $12 a shit ton of fans

- $40 some case.

Later on after a BSD kernel update killing my PFsense box I slapped in the 1 and 4 port nics I had gotten into and virtualized proxmox as well. times were tough and I had no money a year ago.

Here it is at present, wholly and truely dead. Rest easy my favorite vacuum cleaner. You were the best $600 dollars I ever spent.

The new server is sporting the same core and thread count but the TDP and IPC is just so drastically better. Even on the old server it rarely went over 25% usage when really in use from me accessing video files and running a minecraft server in a VM and virtualizing pfsense.

- $220 Ryzen 2700x

- $200 (If memory serves right) Asrock X370 Taichi from my old rig

- $436 64GB (4x16GB) of crucial CT16G4XFD8266 low profile DDR4 ECC memory (I know, holy shit that’s a lot of money for memory)

- $600 4x8 TB WD easystore portable harddrives shucked (have to tape off the 3 volt connection on the sata power connector. It’s the first three pins next to the notch, thanks for the masking tape Gran.) These are also running in zraid10

- $70 honestly not sure where I got it but another 80+ gold 750 watt evga psu. probably

- $120 4u istar chassis donated to me from one of our favorite members. There was a machine inside it before, but I had no luck getting that sucker to turn on. Doesn’t have dedicated drive sleds but you should know me by now ghetto servers are my thing. Drive bay is held in with one screw and a zip tie.

- $310 cyberpower CPS1500AVR UPS. Fucking no more brown outs.

- Stole the nics out of the old server.

And here she is.

The GPU has been taken out and replaced with ethernet cards.

WHY THOUGH?

Now first I’ll explain my reasoning for a build like this. For most people you probably build out your separate machines and have them running around the house doing whatever for you. One thing this does is mean there’s a METRIC FUCK TON of cables going everywhere and you need about two billion powerstrips. Not fun. I’m always going to have ethernet cables running around everywhere but I move every year pretty much and making sure you have every machine every time and making sure it’s safe with all their various accessories and cables sucks.

Then you also run into the limitations of each system. The old Pfsense box was a great little trooper, sipped power, but it was also slow as sin and I only had two ram slots and squid will eat the hell out of whatever ram you give it. Then there is also the issue with something like a kernel update breaking something like…I dunno, the sata controller on the board so it couldn’t read anything from the disk after initial boot? yeah, great.

Lastly, so I’m not chained to any cloud services or some shit company. All my own stuff.

also it’s just validation of my own skills when it comes to tech. I KNOW WHAT I’M TALKING ABOUT, CODY. CALL YOUR BROTHER TO GET YOU ANOTHER JOB WHY DON’T YOU?!

THE PROS AND CONS

The cons of a build like this

- If this box dies everything dies. ZFS is a great saving grace here as I’ll get into shortly in the actual setup of the machine

- Higher energy usage in general. It’s never going to idle, things are always going. not a big deal imo with the hardware I’m running but if you did something like a threadripper instead you might want to factor for that.

- high initial investment. Especially for modern parts.

- Limited documentation on compatibility and things being tested depending on your pocket book or simple desired hardware.

- An inordinate amount of elbow grease.

but the pros outweigh it by a lot in my opinion

- instead of having 4-5 various hundreds of dollar machines you can build a really great machine instead. With so much flexibility you’ll have pet projects forever.

- If you’re in the IT industry you can get a lot of really good experience with a lot of different tech. You’ll get an idea down the line.

- software compatibility. OH MY GOD this one cannot be understated. If it runs anywhere it will run virtualized. Sure, if you need some kind of bare metal functionality it’s harder to setup, but passthrough is just tedious at worst, and in the case of things like USB it’s trivial (there’s a goddamn button for it in pretty much any virtualization interface you use on linux.)

- You can build out as many containers and VMs you want so that you don’t have to worry about, again software compatibility for your various desired setups.

- only a one time cost. No recurrent spending for a VPS and can be massively more powerful.

SYSTEM DESIGN

First and foremost, anyone wanting to use ZFS. Get ECC. You could totally get away with running it all with a hardware raid, or not at all, just a jbod with ext4 or something. I really don’t recommend either though.

- Why you shouldn’t run a hardware raid.

As I was moving configurations for PF and ubuntu over and getting ready for the promised time of having the new server run at least pfsense so I could have internet and then just do a data dump from one storage VM to another. Nice. I take down the old server, plug in the new server, get everything powered on and confirm internet access and start the boot up of the old server so I can start the transfer.

Except when it powered on I couldn’t see that it’s dhcp lease was online in pfsense. I end up moving it to the living room and slapping a gpu in there so I can get a display, everything posts and booted up fine, but it would hang during boot.

Had I been running a hardware raid my only option would be to try and move the raid to the new machine, and depending on what software the raid card ran and how it behaved on a different system could add a ton of time. The other option to troubleshoot this decade old machine and make it run. Ew, I have a new machine I want to make run no, not now I have work in the morning.

As my previous posts have shown, I was running ZFS on that too. So instead I was able to just take all the drives of the zraid out, plug them in to ANY of the open ports on the new servers motherboard, and running a simple zfs list showed the pool, and a zfs import -o altroot=/newmountpointand all of my data was there, and all the normal tools available to my new zpool worked for the old one. If I wasn’t stupid I could have even saved myself the time of doing a zfs send | recv and just attached the old storage VM disk to the new VM.

- Why you shouldn’t run JBOD

While I actually do this partially. I have a few SSDs in there as well. But this means you’re playing with fate with, at least for me, my major storage. If a single disk fails you’re shit out of luck and now have to do a whole bunch of bullshit to try and save your data.

With ZFS you get a new drive and slap it in, attach it and resliver the thing. Done deal.

Harddrives fail. Always and equally to all people.

- What about just using virtual box on a windows server?

Excuse me? NTFS? Next you’ll tell me I should start using RDP to manage my servers instead of SSH. BEGONE HEATHEN!

Second, for those of you wanting to build ryzen and are concerned about memory compatibility here are your go to rules

-

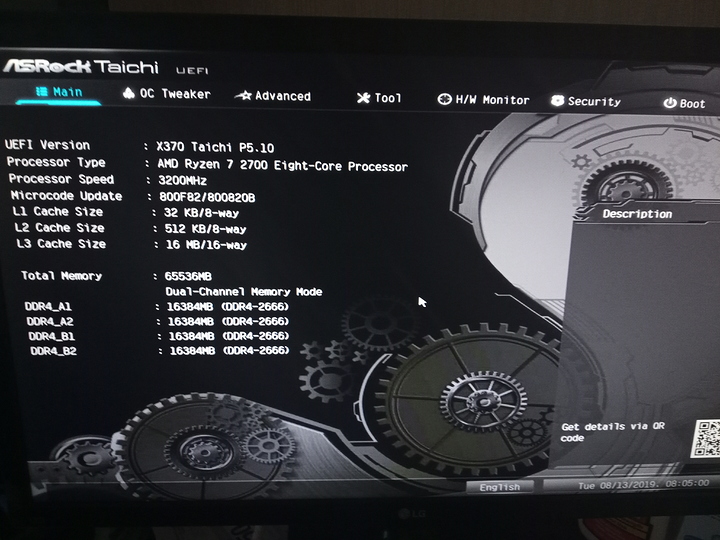

Get a board that shows ECC options. Assume ECC is nonfunctional otherwise. Both of my X chipset boards, Asus x570 Pro and Taichi x370 show options for enabling ECC. So it seems like x series boards are the way to go.

-

If possible get a 2000 series cpu or higher. The 1000 series had those pro line up CPUs that advertise full ECC functionality, and I’m not sure if it was because of busted pins or uefi updates but my 1700x was saying no to all of that shit, and dual channel was impossible to get. If you have a fully functional 1000 series chip it’s still worth a shot and will probably work, but there’s been nothing about the more recent series withholding anything in terms of ECC compatibility.

-

Get ram with timings and speeds you have seen confirmed from the board’s QVL. If you only check for the ones that have ECC tested you will find maybe two kits and they will be INORDINATELY expensive.

For intel builders, fork out the money for a xeon, what do you want from me?

Here’s the server’s uefi showing all clear. Be careful to set everything back to auto. I left my ram speed at 3200Mhz and wondered why it was only posting every three times I booted it.

lshw -c memory | grep ecc outputWith all of that covered I think it’s time to just dig in to how I have things setup for VMs.

My main reasons for going ryzen was really just because I wanted to upgrade to the 3000 series chips and already had the Taichi, and that to me, none of the used xeons looked very appealing over what I already had. I wasn’t really looking for a fuck ton more power as much I was looking for better efficiency.

That and the simplicity of ryzen chipsets over intel chipsets. AMD, you got three choices, depending on which one you get you get more PCI-e lanes and better overall power delivery. Not much else changes.

INTEL, GOOD LORD. This socket goes here, but it has that chipset so you need X series of cpu and the PCIe lanes are all over the place, and ECC functionality is relegated to the really expensive parts, or in general really hot loud old parts.

I think that’s it for the overall system design. Hot tip, you don’t need drive bays for SSDs. They’re sitting there dangling around inside the case right now.

*The VMS

At present it is running 3 VMs. It will be running likely another 2 in future. One for secret things (literally, for my storage and backups of things I want only me to see.) and one for nextcloud.

- PFsense

- Ubuntu 18.04 LTS running a minecraft server

- A debian VM that’s just there to be separate entity to store data. Might need to rethink this, but possibly not.

My methodology is to have each VM and the host itself have just 1 goal. Proxmox goal is to run the VMs and containers (although I might actually just make a VM to run docker, it seems really cool, not sure.) PFsense is obviously just PF.

I could just make the host itself run minecraft and be the storage server as well, but I also open up ports for my brother to remote into the ubuntu server, and that means having open ports to the OS running all of my shit. I don’t like that. Instead I can open it up for just ubuntu and since it’s only running minecraft it can be really really tiny.

It also means I can more tightly control what kind of resources the servers will use. Of course you can do that at a configuration level, but it’s so damn quick and easy to just say “you get no more than this.” and be done with it.

PFsense is the real odd ball here. typically you wouldn’t really want the box connecting to the internet to also be storing things albeit inside of another OS running on the host, but the initial decision to virtualize it was an act of desperation.

What made me stay on it was just how much easier it was with interface configurations. No more of that “SHIT! I botched the interfaces and now my entire network is down! GUHHHHHHHHHHHHHHH! Time to get the old VGA monitor and plug it to the back!” or even more of a pain in the ass getting a serial connection to it. none of that, either write down the IP address of your server or put it in your hosts. Even if you have to give your rig an IP address manually you can still get to it without having to move or plug in ANYTHING extra. Dog bless my boys.

I can also have UNLIMITED interfaces. Want a separate Lan for the roommate but don’t have enough ports? Make another bridge in proxmox! BOOM your traffic is treated as different entities entirely and you can go to town on that firewall to lock down your shit and leave it as open as the roommate desires. That said I don’t passthrough anything for PFSense even though I have enough ports. I just make a bridge, simpler, easier.

Another thing is that compared to the ultra small form factor low power versions it just runs better. Changes are applied faster, No real hiccups in my internet connection ever. Nice.

The minecraft server isn’t really anything special. exactly as you would expect. Just a basic install with only the requirements for minecraft.

The coolest thing about it is that my registrar finally supports dynamic DNS and I was able to create a custom dyn dns entry on pfsense to properly update the IP address automatically. Not even about ubuntu vm. Feels good to not have to use no-ip free edition anymore.

Previously on my storage VM i gave plex a shot, and by the end I had it working but video playback sucked a fat dick. Video itself was fine but the audio would go out at regular intervals, the subtitles got fucked, and it ran slow as molasses. I would probably give it another shot if NFS+Samba wasn’t just so easy to configure and totally painless to setup on the clients.

On linux it’s sudo mount -t nfs server:/path/to/share/ and just attaching a network drive in windows. With Samba you can specify the permissions of a share under it’s share entry, and with nfs it’s apart of the /etc/exports.conf configuration.

that’s about all on my brain for now, covered a lot of stuff already. There’s a whole metric fuck ton of shit I could talk about with pfsense so if you got any questions about that hit me up. I’ll leave you all with a piece of advice.

Update your systems regularly. The old server’s proxmox hadn’t been updated in ages, and at that point I was worried an update would just fucking destroy everything. Onwards to a healthier server life from here on.