I’ve seen some messages here that kinda go both ways. There’s merit to criticizing the design, but in fairness to OP this isn’t a terrible design either.

If you’re on a tight budget but want to experiment and learn “clustering” buy some used corporate desktops. Paying the premium on something new from Minisforum or elsewhere is…probably not advisable for this use case?

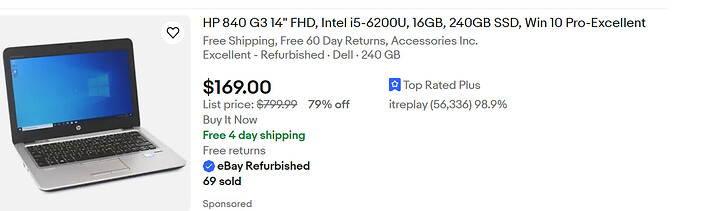

As an example:

that bad boy is $100 cheaper than the Minisforum thing OP was looking at. The Core i5 8500 still has gas left in it’s tank, and you get 16GB of RAM and a 512GB SSD as part of the deal. Sure the CPU isn’t as fast, but if you don’t have hard requirements for your workload, or you don’t really even know what your workload is, save the money.

I’ve done some interesting stuff with these things:

Guide to Turning a Project TinyMiniMicro Node into a pfSense Firewall (servethehome.com)

STH Project TinyMiniMicro the Plex Server Setup Guide - ServeTheHome

I am a huge fan of what Patrick at STH has dubbed “TinyMiniMicro”. The fact you can grab pretty beefly little computers for sub-$300 used on eBay is fantastic. I futzed around doing something similar to this over on the TrueNAS forums early this year and learned some hard lessons.

Cluster Relative SMB Performance | TrueNAS Community

There are definitely some “gotchas”. USB mGig adapters are…finicky at best. They work fine for their intended usecases (ie, docking a laptop where you may want to access files off a NAS), but when you consistently load them up with client traffic in a server use-case YMMV. Plus the very fact that they are USB represents some stupid challenges to overcome.

Also FWIW: Don’t worry about power in this general area. Small 1L PCs sip power, and modern (anything Kaby Lake onward reall) processors have VERY LOW idle power consumption. Quabbling over a 65 watt vs 45 watt variant is really like quibbling over a couple euro a month. Until you get into the wonderful world of graphics cards, you honestly shouldn’t see a massive difference in your electrical bills. Think about the money spent like an investment in your own future.

It’s alot cheaper than college, and I’d bet you’ll learn just as much but in different ways.  Textbook knowledge is not nearly as valuable unless you have the practical experience to back it up. I’ve had guys working for me straight of college. I’ve also had retired guys work for me looking to kill time. I’ll take the old greybeard telco technicians over an “up and coming” programmer any day if the work requires any sort of out-of-the-box thinking.

Textbook knowledge is not nearly as valuable unless you have the practical experience to back it up. I’ve had guys working for me straight of college. I’ve also had retired guys work for me looking to kill time. I’ll take the old greybeard telco technicians over an “up and coming” programmer any day if the work requires any sort of out-of-the-box thinking.

However you end up doing it, remember to have fun. Also, please document your journey here. Learning from folks’ successes and failures is how we all grow together.

EDIT: Also, I’ve seen quite a few folks (quite successfully!) cut their teeth on home labbing with crappy old laptops with broken screens.

You make some sacrifices in I/O but you get a “free UPS”.

![]()