Hi all,

I need a solution for routing a domainname to an external ip on an offsite vm, routed in the offsite vm via either iptables or ufw, into an openvpn server installed on the offsite vm, then on to a pfsense local openvpn client which will then forward/nat the traffic to a nextcloud vm and of course then send it back out the same way.

Thanks for reading.The Long Story

I have a pfsense router put together in a kvm scenario with an internal openvpn/samba vm and a nextcloud vm. 3 vm's on a host with 3 physical adapters, one adapter is dedicated to the pfsense wan, one is a local bridge for host, vm and network comms and the 3rd adapter is used for macvtap internal connections using bridge mode instead of vepa.

pfsense lan is on the main host bridge [br0] which provides all network connections for the host, other vm's and all the computers and devices in the house so a total of 36 dhcp leases give or take. all the computers, host and vms have no issues talking to each other via the lan and all have direct inet access via the pfsense wan.

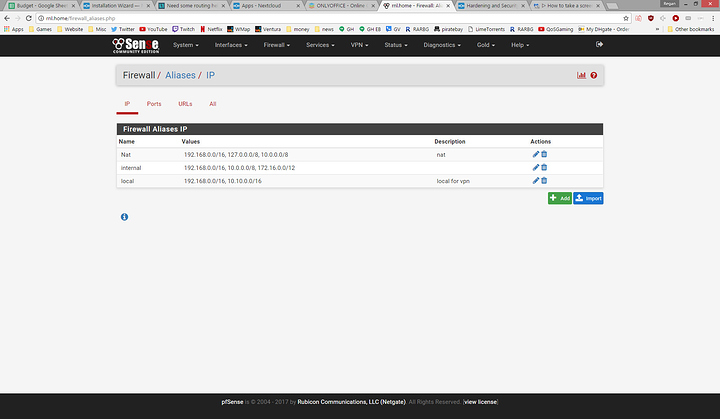

openvpn/samba NAS vm server has 2 vnets, one connected to br0 and the other set up on a separate subnet vlan via macvtap for the internal vpn, macvtap accepts internal vpn client connections then dumps the traffic to pfsense and out to a liquid vpn pfsense client connection. the br0 connection can also connect to the internal vpn and handles the samba nas. there may be an easier way of doing things but the only sure thing is when i am connected to my internal vpn the traffic either goes out via the liquidvpn connection or it doesn't go anywhere. so i can choose where i want the traffic to go, out via wan or liquidvpn, as can all other users/devices in the house.

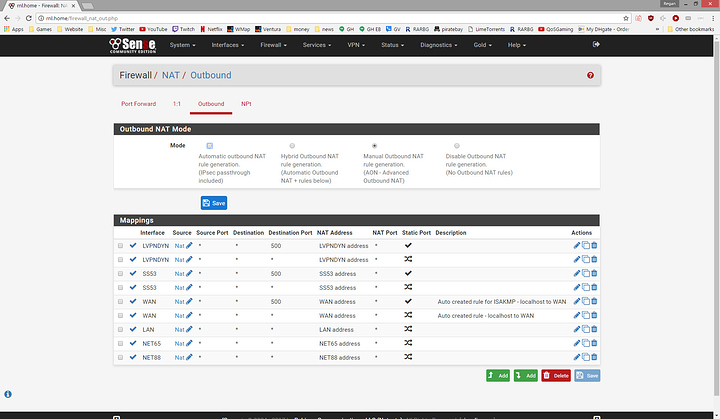

last vm is a nextcloud install with apache and some other stuff. this is where the routing gets to be a problem that i have not been able to solve. i have an offsite/bare metal server that i want to use as an access point from the world back into the nextcloud for file sharing and other cloud type services. I do not want to use my wan ip or my liquidvpn ip's to drop traffic from outside to nextcloud so i have set up a vm on the offsite server with 2 ips and have connected it to pfsense via openvpn. the offsite server is the openvpn server and I am using pfsense as the openvpn client. the nextcloud vm has 2 vnets one on the common br0 and the other a macvtap subnet vlan set up in pfsense. I have internet from the nextcloud vm out to the world but I have not been successful in getting tcp 80/443 traffic from the outside world back into the nextcloud vm. the ufw has postrouting nat for the vpn ip range to one of the vnets [ens3 on the offsite vm] i have allowed forwarding from both ips and adapters to tun0 i have set up postrouting nat for both ips to tun0 and nearly every combination i can think of but still can not get traffic back to pfsense via the openvpn connection.