Fedora small-office, home-office (SOHO) server

This thread will be long and hopefully full of sources and easy to read.

There is no reason to be intimidated as this will allow you to turn your computer into a reliable host. You can replace this whole thing by buying a Synology. If you are a power-user this will be cheaper and will give you more practice in managing systems. It will not make you an knowledge-able outside of SOHO. Be nice to me, English is my second language  .

.

Motivation

This is my thread of going through setting a new home server. We got a significant power upgrade (Skylake) so it will be ready for anything Level1 will release on the channel. @wendell has announced upcoming de-google videos and I need to clear up my old CentOS 7 system anyway.

Thread statement

My quick draft for VFIO and Plex was quite useful for people so I will introduce those here for a clean install. This will include interesting points from the channel and recommendations from others.

Rolling distro for a server? Have you gone mad?

I strongly disagree with the RHEL 8 policy on removing perfectly functional SATA3 RAID/HBA cards and moved to Fedora for simple integration of new ideas. My first choice was Debian, but that would not fit L1Forums.

Current concepts:

- VFIO enabled

- Docker for playthings

- SnapRAID, Software-RAID, LVM

- Subliminal for subtitles

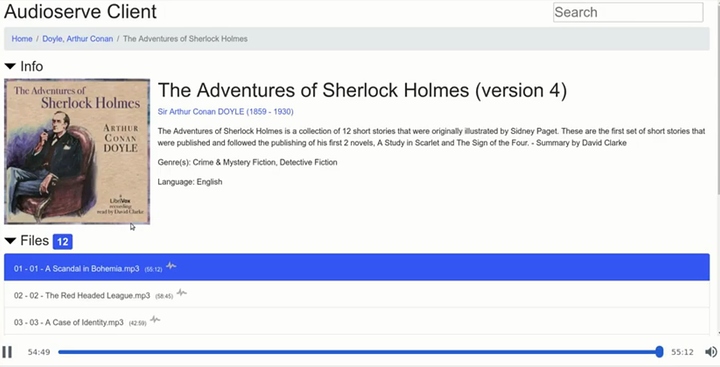

- Plex

- WireGuard for my family

- Syncthing / Rsync daemon

Controversial points:

Why not just install FreeNAS or UNRAID?

The basic idea of Linux and sysadmin work all across the globe:

Layers of simplicity - each task can be incredibly complicated, but provides just simple outcome.

While this setup can take longer - your system is likely to survive upgrade s and distro switching. It will also give you an idea why we do things we do.

LVM or not

I highly recommend LVM for users who are not regular sysadmins. It does have caveats, but not even remotely as bad as ZFS or BTRFS.

Users who have rigid systems can appreciate ignoring even LVM and running just regular ext4 or XFS.

- The benefits are: Scale-ability, Snapshots, Containing projects into logical volumes.

- Small benefit is also drive flexibility - LVM does offer you software raid to work of mismatched drives and weird scaling. Synology is using the same idea.

- The main problem is backup - you need to backup metadata of the LVM on regular basis. This data is necessary for partial or full recovery. system does do backups by itself. It is just a matter of configuration.

Design: Logical and physical devices

My idea for a home server is to have two sets of data: Simple, Robust

The you can add any process or device specific sets of data.

- Simple: Regular filesystem (XFS for me), no redundancy, semi-cold-storage.

This means that the data can be stored slowly and accessed mainly for reading. This is perfect for your home-video archive.

- Robust: Redundancy is key, support for snapshots is a plus, flexibility.

This for your VMs, containers, personal data, your girlfriends cloud

- Example for a device specific data pool: SSD cache for transcode

- Example for a process specific data pool: mdadm design for mismatched speed devices. Digest of photo and video media to a fast SSD with a slower HDD backup.