So, how did this come about?

Well, we were using a 1950X with 128GB RAM at work as a build server, to speed up our local builds, before sending stuff to the BCI, as everybody uses laptops. It served one team, then two, then 3, 4…

Then it started crashing. And we’re all at home. And I was 300km away, so I had help with the kids. Sometimes it crashed and the security had already left and no one had a key to the server room.

Then they took half the DIMMs because something was failing, and with 64GB we no longer had enough RAM to compile at -j32 (had to max at -j24).

And then I started ordering an Epyc server, except that now, in a biggish company, it was no longer a matter of picking up the phone and talking to the supplier, but rather a long chain of emails, taking more than a month to complete the order.

And we got sacked pretty badly, being asked for 5400€ for something which I could get equivalent Supermicro parts on the Internet for 3300€. So, I gave up on the 7502P, on the 7402P and settled for the 7302P. Welcome to Portugal!

And then it would take 2 weeks to deliver, but it actually took 4, I guess because of COVID.

So, I decided I wanted one for myself. This Threadripper is all I every wanted!

All that I could never get in the 90s, when I used to read about the MIPSes and the Alphas and multi-processor programming. I remember doing a multithreaded program in college, then testing it on a big SPARC and it crashing immediately because of a race condition which wouldn’t happen on a single CPU. Then I built a dual Pentium III in 1999 with Supermicro case and motherboard.

Then for some time we had “multi” cores to play on, which allowed for some testing, but they were never the real thing, how could you plot your Speedup over multiple cores if you only had 2-4? Or your multi-processor efficiency? Observe super-linear speedups?

Then I started learning about auto parallelizing compilers, and Xeon Phi came along, we could test some idea on 32 very lousy cores, but would never buy one of those things for myself.

This is the real thing. Threadripper is the first accessible real multi-processor.

Worn out with the 1950X experience (but it had nvidia drivers, vmware kernel modules…) I though about building an Epyc with a Supermicro motherboard. By chance I found Level1Techs videos on YouTube, “hey, people actually do this, it actually makes sense”, reliability over clock speeds, etc, ECC memory, even Supermicro chassis seemed better to me at the time. Then I saw there were videos about ZFS, which even mentioned FreeBSD passing by, and I thought “I’m not alone in the world!”

But then, I would only receive Supermicro’s H12 motherboard in October, and I couldn’t wait. Then I also found Gamers Nexus, and came to find that Supermicro’s chassis layouts are a bit outdated and old fashioned (I had to let go of the hot-swappable bays…). So I went for the 3960X.

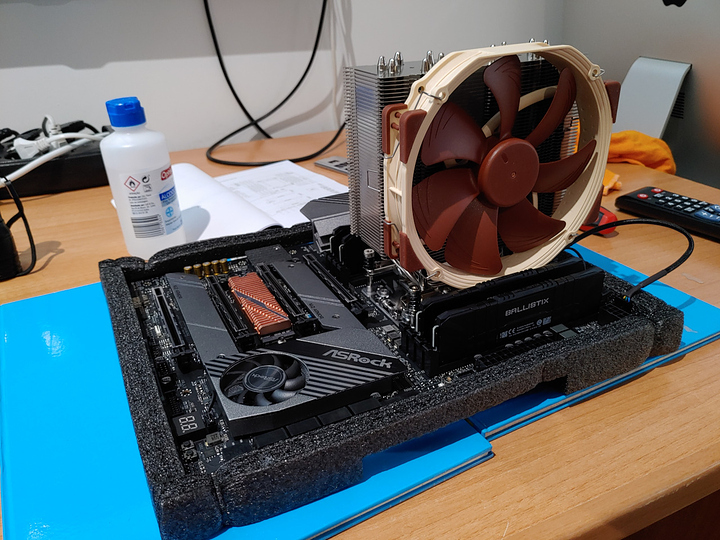

The motherboard was an easy choice. How could it be that the cheapest motherboard I could find in my local store had 10GbE and the more expensive ones didn’t? How could they justify those high prices? It had to be the ASRock Creator.

Nothing was easy, though. I got the CPU and memory in two days. I could buy the memory directly from a global Crucial store cheaply (first time I purchase something that I feel I’m almost on a similar stand as an american buyer).

The motherboard was out of stock in ASRock Portugal. I managed to find the last one in stock of a retailer, and switched my order.

The PSU wasn’t easy. I tried ordering a Seasonic but they couldn’t give me a delivery date. I couldn’t find an efficient and powerful PSU below 200€. I bought an FSP 1200 for 219€, but was unimpressed, it appeared to be a 2014 model… then I found out it had lots of cables missing and returned it. After repeating searches many times I found a less efficient Gold 1000W, a Phanteks Revolt Pro, for only 160€.

First I ordered a NXTZ X63 AIO. Then I saw the GN video that shows only Enermax was better than Noctua U14S on the Threadripper and switched my order to the Enermax. Then I found the other videos about Enermax and switch to the Noctua…

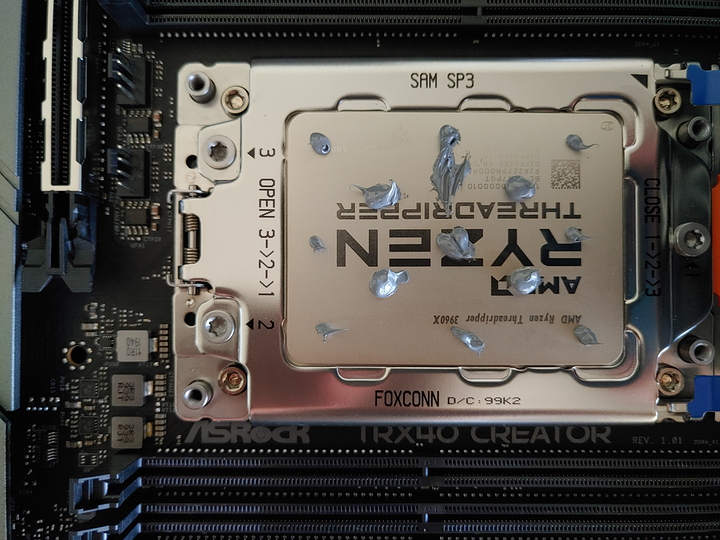

Finally, when the motherboard finally came (it delayed in the delivery!), I mount the CPU on the board, put the paste and when I try to screw the cooler, it won’t screw. It turned out that one of the screws had no thread!

I left home again, went to several stores, and what’s more, the cooler was out of stock at the distributor too! Fortunately, the last store I visit, the one closest to home, just at closing time, had one Noctua U14S TR4… and I could finish my initial build on September 14th.

To be continued with practical usage results, and airflow improvements…

Also requesting advice for improvement… it seemed to me better to use the own heatsink of the Gigabyte NVMe…

Total cost: CPU+memory=2k, chassis+PSU+NVMe+cooler=1k, total 3k

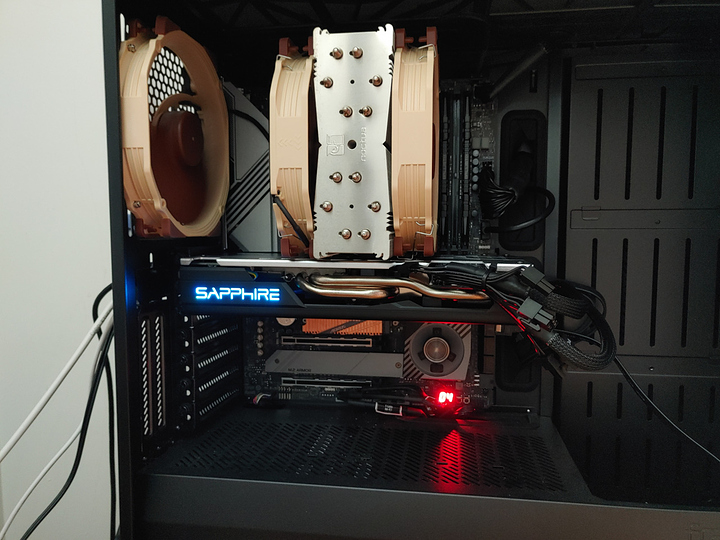

No GPU yet! Was waiting for a 3080 or 3070, then maybe a Biggish Navi, now I don’t know any longer… it’s using a spare Sapphire R7 250 low profile with a fan that is whinning…