I see now, thats actually pretty cool. TY : )

I am thinking about getting an MI25 - I used to own a few VEGA 64 LC Models and simply loved the HBM and FP16 Compute vapabilities back then (still having a FURY X with HBM lying around).

Since I have a 3060 12GB and an ARC 770 16GB currently in the system - I was wondering what the speed differences to a MI25 would look licke. But its not easy to find comparable numbers which are reliable… There are a lot of tests done indivudally but almost every test is either using different paramters, frameworks, sometimes its a DirecML Implementation… And the results are all over the place.

What I found so far is that ARC is not fully supported, so here still is room for improvmement - but Nvidia is well suported for stable diffusion and RTX Cards with tensor cores do outperform VEGA Architecture Cards easily in other inference tasks. I had this experience with upscaling or video interpolation inferences - as long as the Tensor cores were not in the game, the VEGA crushed a lot of mid range RTX GEN 20 and 30 cards - but as soon as development progressed and the backends fully supported the Tensor cores - the VEGAs fell behind.

Did anybody benchmark the Mi25 against a 3060?

So RTX series cards have tensor cores for acceleration, so even without doing the benchmarking its already the winner, the cycle diffrence is night and day basicly.

Also your correct about the software support on Intel and if you haven’t seen the benchmarks, this is a good point in time measurement.

Thanx,

I figured that RTX Cards would be ahead. I am wondering what the price/performance ratio would be:

comparing one used RTX 3060 vs two MI25 for around the same price - which one would come out ahead in stable diffusioN ?

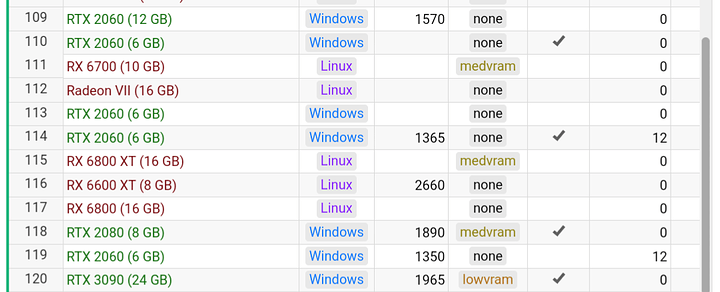

Have a review of the numbers here

Thanx for replying.

Thats what I was refering to… These Benchmarks only show numbers of the VEGA Architecture. Odly, the FP16 Numbers on the MI25 are slower than the FP32 ones - VEGA 10 has rapid packed math, the FP16 numbers should not only show lower VRAM usage (which the do), but also give faster runtimes (which they don´t).

The comparison then shows a MI210 and then continues to a A6000 with no numbers in the chart .

Thats why I wrote I had a hard time finding reliable benchmark numbers. Of course I read a whole bunch of reddut posts (most of them refering this post and tom´s hardware bench), the tom´s hardware article/bench (which lacks vega64 numbers and is using lower batch sizes)…

Bottom Line: IF a RTX3060 would come out clearly ahead of the MI25 and 12GB are enough for most cases - why bother about the MI25 (aside from 16GB which might help in some cases and the fact that its a fun project).

I love these kind of projects, i had several M40s, K10, K80, S7150, S9xx… and always had a lot of fun turning them into something usefull, 3d printing fan shrouds, modding bioses…

But here I am a little puzzeld - the numbers which I can gather from the web seem to indicate that an RTX 3060 is way ahead of a MI25 … BUT: The numvbers are all from different scnearios - one was using smaller resolutions, the next one ran Linux, etc…

So to clear this up - I was looking for a clear, reliable benchmark, comparing the 3060 to a MI25 in this scenario (other benchmarks are not comparable - i know that pure shader performance of a VEGA10 chip in FP16 scalar shader loads is ahead of a rtx 3060)…

Various user submitted benchmarks here

And also here

Thank you, this is very helpful.

From the data provided in these charts, a 3060 12GB outperforms even a Radeon VII by a factor 2 to 4, depending on the settings/resolution/model etc…

Unless I am missing some performance tweaks for the VEGA10 architecture that didn´t find the way into these benchmark charts, it seems to me that an 3060 is the better bang for the buck… Or is there some hidden potential to the MI25s ?

Don´t get me wrong, I love projects like these and I loved my VEGA64 back then - but if I look at the performance of a used 150$ RTX 2060 12GB or 200$ RTX 3060 - two VEGA 16GB HBM don´t seem to be able to catch up to this performance…

Some people report creating higher resolutions on M40 24GB Models - slow, but the huge amount of VRAM there has its benefits. 12Gb vs 16GB does not seem to make a lot of a difference…

In the charts, I spot some measurements for the A770 16GB, which seems en par with RTX 3060…

Not sure how reliable these numbers are, one chart even states that some numbers could be incorrect (bad user reporting…)…

Hm… Unless I am missing something - it seems 200 bucks are better invested in a 3060… (Of course I would be missing out on the fun of tinkering with the MI25 …)

I wouldn’t put too much stock in something that says a 3090 would lose to a 2060

You would have to use the EXACT same sampler, model positive and negative prompts to be comparable

From my experience the mi25 with wx9100 bios is about half as performant as a 2080ti at 2ghz core

There are weird bugs in pytorch where the AMD cards don’t get full benefits from fp16

Don’t know what the deal is

The sad true is that CUDA is a first class citizen on these things. And then there are stuff like Xformers · AUTOMATIC1111/stable-diffusion-webui Wiki · GitHub that increase speed and lower memory usage (at the cost of some seed variation) which are exclusive to Nvidia.

AMD, Intel (and even Apple) are catching sending patches to Pytorch. To be honest I’m not a big fan of the whole translation layer thing that is ROCm. Something like GitHub - nod-ai/SHARK: SHARK - High Performance Machine Learning Distribution which seems to work on top of Vulkan might be a cleaner approach.

Number 120 has setup --lowvram on webui-user.bat as you can see on fourth column. For wathever reason he did this (training maybe?) it capped the performance.

Those are user submitted results. Mostly from 4chan /g/ I reckon. they might have been submitted in different times with different variations.

List of params here: Command Line Arguments and Settings · AUTOMATIC1111/stable-diffusion-webui Wiki · GitHub. lowvram does:

enable stable diffusion model optimizations for sacrificing a lot of speed for very low VRM usage

Which is usually meant for 4GB or less graphic cards.

If anyone has a 3/60 feel free to use my prompts with the the same sampler and model and post them

Similar questions about perf/dollar when compared to a 3060. I’d like to know if it makes sense to pick this up for non tinkering purposes especially given that it seems rocm support for this card is probably going to be actually dropped soon (I think it’s officially dropped but still ‘works’). Don’t mind tinkering around to get it working, but if the 3060 completely eclipses it in performance and efficiency the extra 4GB doesn’t seem to make it worth it. I was looking for a cheapish GPU with ~16GB of vram to run long ML jobs on and ran into the Mi25. (I do dev & testing on my laptop with a 3070, I’ll run the benchmark above on it - which should be approximately equal to a 3060ti ) , if the P100 drops in price a bit more I’d probably opt for that though since I believe it’s still backed by CUDA so it pretty much ‘just works’ with everything.

With wx9100 bios you have display output so you can have yourself a vega64 gaming perf for 75$

Which is a lot better than rx580 perf for 90$ and you get double the VRAM and way better than rx6400

The 75-100$ price is less about being best bang for buck and more of a low cost entry point into AI that isn’t a terrible deal

Is the 48 pack of mountain dew a better deal, probably but do I want to buy a 48 pack to try the new flavor, probably not

Yeah, some numbers don´t add up there…

Thank you, thats actually a better figure than I was expecting, looking at the charts.

I used VEGAs for Compute Stuff via DirectML and got Double FP16 Numbers compared to FP32… There is a chance this might be simply a software issue and hopefully will clear up in the future…

Cooling: Given that it will run at a lower wattage than maximum and that the VRMs on the back get some cooling via Airflow - would a blower style fan like on the vega 64 reference cards work? Cutting a round whole into the front of the card should be doable for me on the CNC or with a roundcuttertool. Is there some space behind the cover? I did see you mounted a radialfan on the back part - did you have to take out some of the coolingfins under the cover or is there anough room already? As far as I´ve followed this and other threads, the connector is a 4 Pin - so it should be PWM with a sensor. If its 12V - might this work:

[edit] can´t post links… Wante do link a " BFB0712HF 65mm 12V 1,8A" from Aliexpress for around 5 bucks which is for a GTX 980TI…[/edit]

Closing the back would be necessary of course.

Its probably not enough to cool the card at more than 150 or so watts limit - but I´d probably run it on lower wattage anyways, thats what I did back when I had my VEGA 64 LC cards - the efficiency was so much better when lowering the power target on them, they mostly ran at around 150 Watts…

good point ![]()

I would definitely put some airflow on the back of the card with any cooling solution

Lots of unknowns when rigging up your own solution

yes, I read about the airflow on the back and mentioned it above. It´s on the todo list ![]()

was your radial-blower able to keep up at around 150 to 180 Watts?

Could you evaluate if the 12V Connector onboard of the MI25 for the Fan was able to deliever up to 2A for your blower?

the big Big fan was able to keep it cool at 180 watt but I also liquid metal’d

unsure if it could deliver 2 amp, I can try my smaller fan that fit enclosed now that its liquid metal’d, it does work off of Pwm, its a dell optixplex fan, rewired to match standard pinouts

Which components on the back do you think needs to be kept cool? Would it even matter if I intend on water cooling the gpu?