Greetings all,

I recently got a heavy discount on a MI100 that was being excised. I am trying and currently failing to get it to be able to be used as… well anything currently.

Before I get into the annoying sob and beg for assistance.

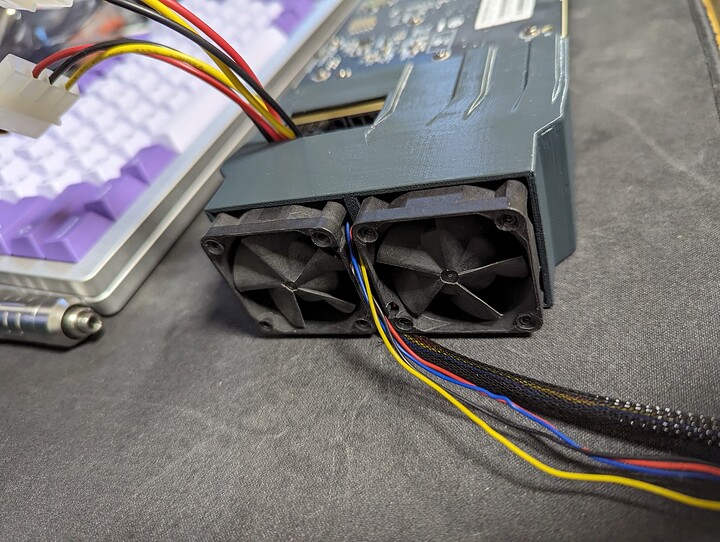

I modeled up and printed a cooling shroud for the unit that uses dual 40mm server fans.

Also uploaded to Printables.com

shroudV4.zip (6.6 MB)

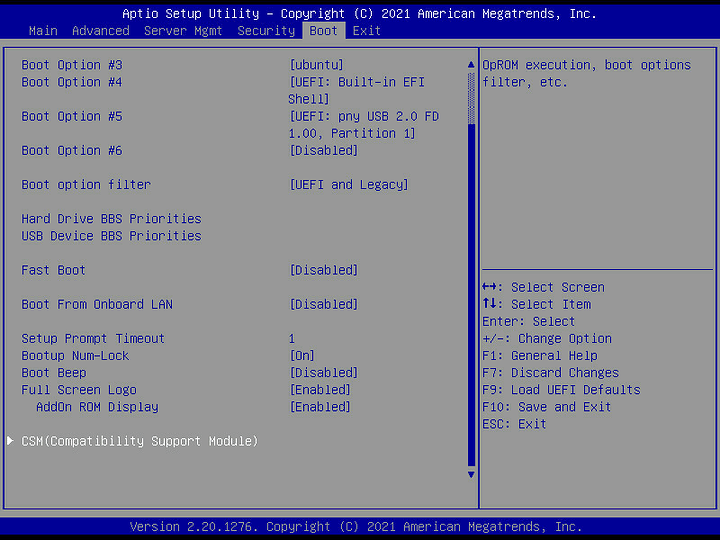

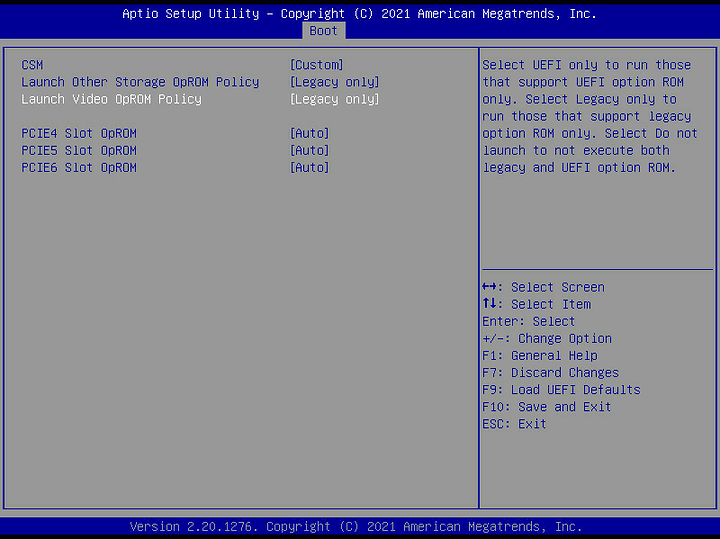

Now, down to business. Currently I am trying to use this card without vflashing it like the thread about the MI25.

I am running into many troubles.

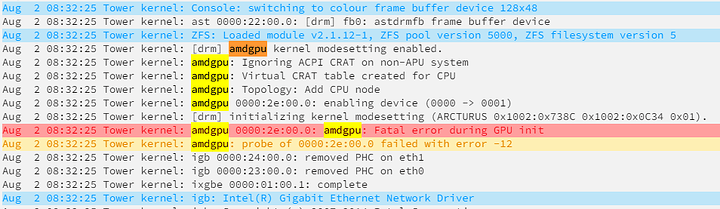

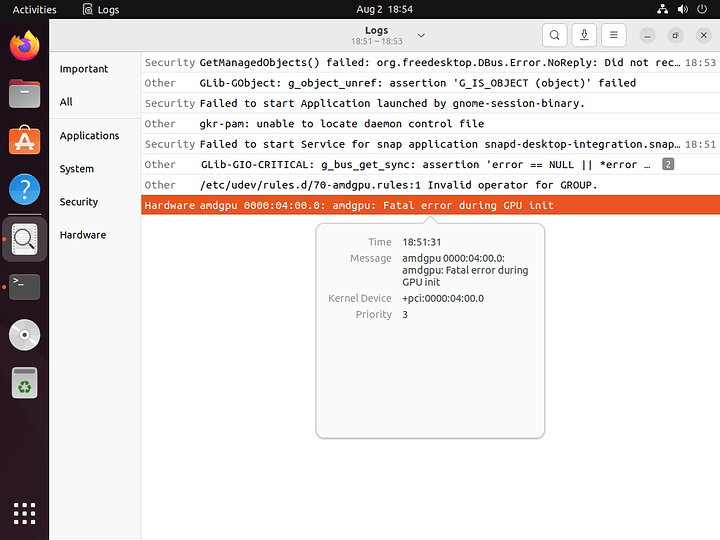

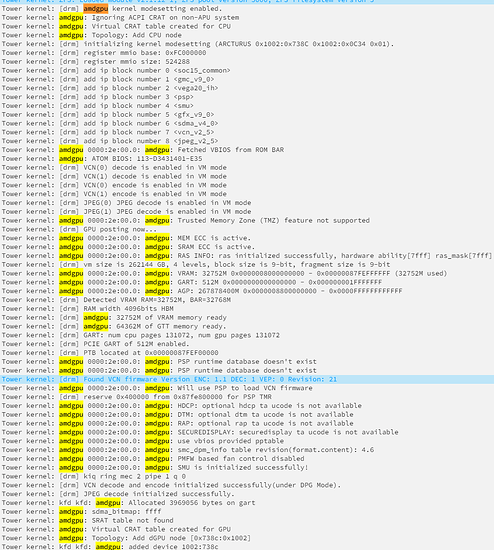

- amdgpu probe fails to init card with a -12 fatal error

- Passed to a VM it still init fails

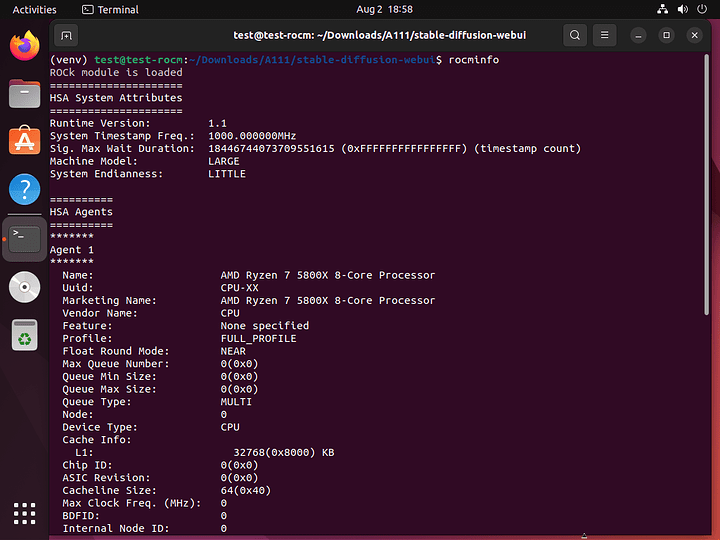

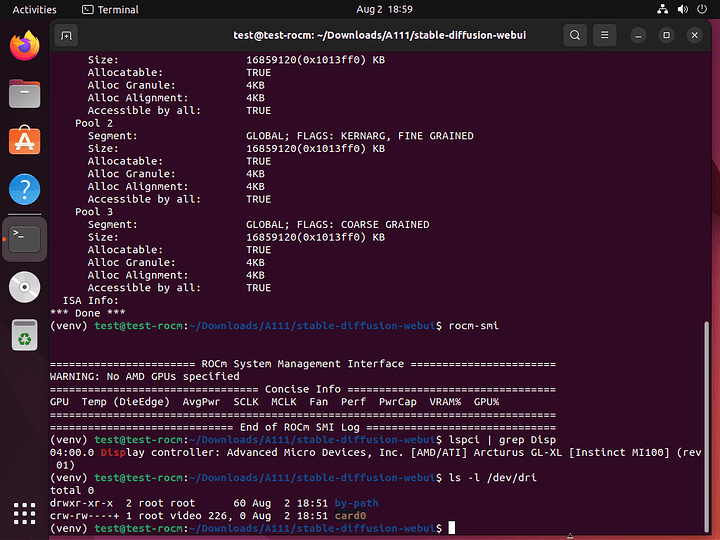

- ROCm installs but does not detect any GPU let alone any render card

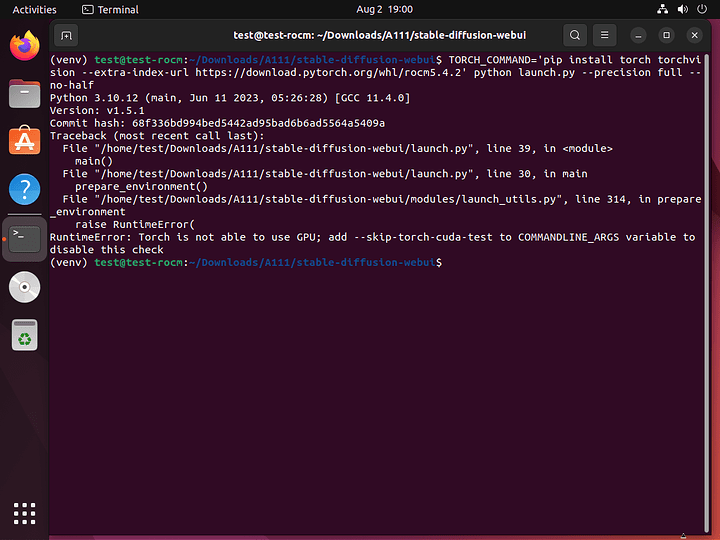

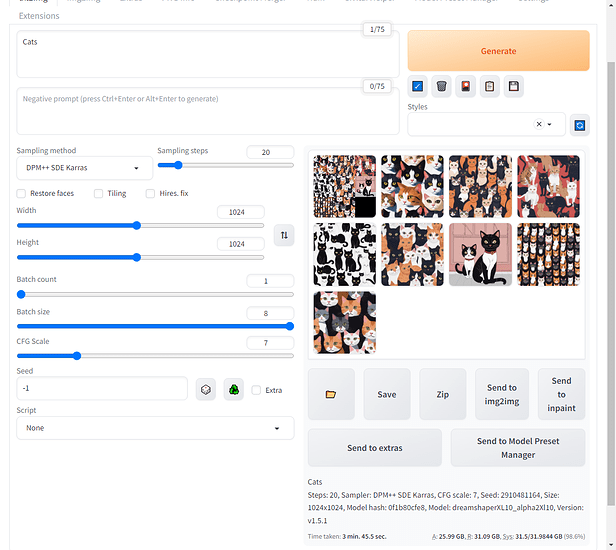

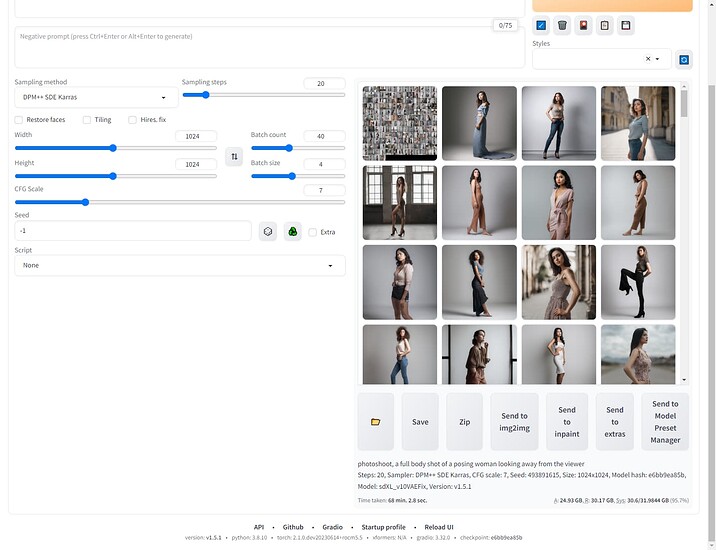

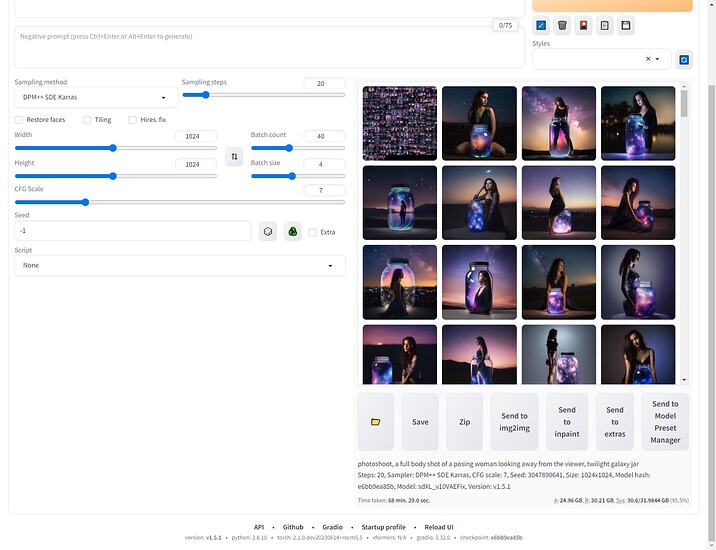

- A1111 (and other pytorch builds) sees that there is an amd thing there and installs/builds for ROCm

- many other misc items (man I need to change to proxmox or truenas-scale)

Here are some interesting snapshots of errors I am collecting. I can make slogs available to those that would like them.

Unraid (base system) just errors out so I changed to passing it to an Ubuntu VM instead.

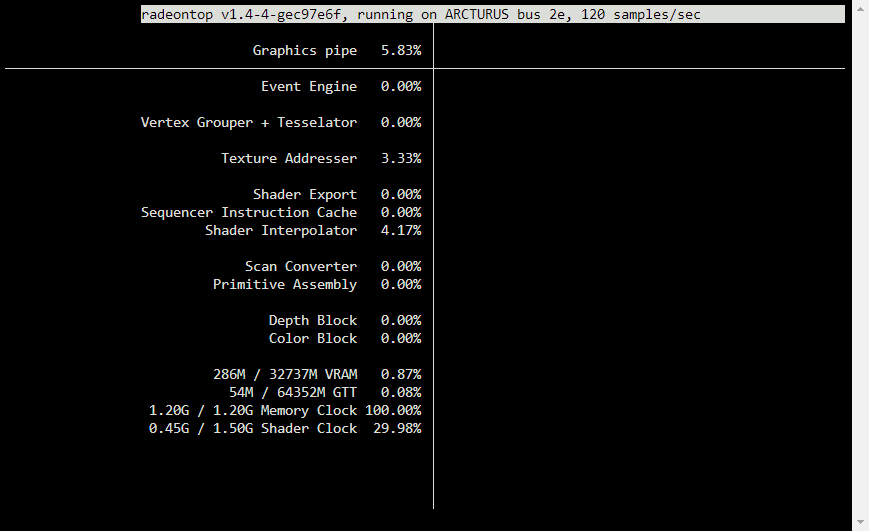

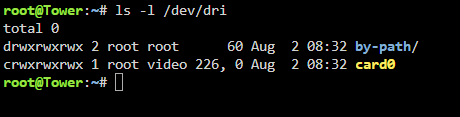

I can see something though

here is where I am at in the VM

I have tried the last two ROCm builds and neither work. I also thought maybe the kernel was giving me guff so I tried many other kernels (5.2.4, 6.2.9, 6.4.6) with no luck either.

SO… maybe someone can help me get this working? I am open to trying most anything though I am not the codemonkey I once was.