I thought I’d document this real quick, since I’ve spent a bit of time researching due to a lack of readily available info.

Preface

First thing’s first, this assumes you’ve already got a passing knowledge of Passthrough concepts. (If not, you can read my (slightly outdated) guide, found here)

For this guide, I’ll be assuming you’re using a 1950x or 2950x, with 2 numa nodes. I’m sure this guide can be applied to the 2990wx or other 4 node systems, but I don’t have access to that hardware to test. If someone wants to touch on how to apply that, I’d be happy to chat about that.

Goals

In order to get the most performance out of the system, it’s helpful to reduce the amount of Infinity Fabric communication, which comes at a latency cost. To do this, we want to tell the VM to use cores, memory and PCI devices that are all attached to the same numa node.

Understanding your hardware topology

First thing when setting up a passthrough VM on a multi-node system is that you need to understand where all your hardware is showing up. while lspci is helpful for identifying what’s what, you can’t really see where it’s all showing up, and which node your devices and memory are attached to. To do that, you need lstopo. In the RHEL world, that’s provided by the hwloc-gui package.

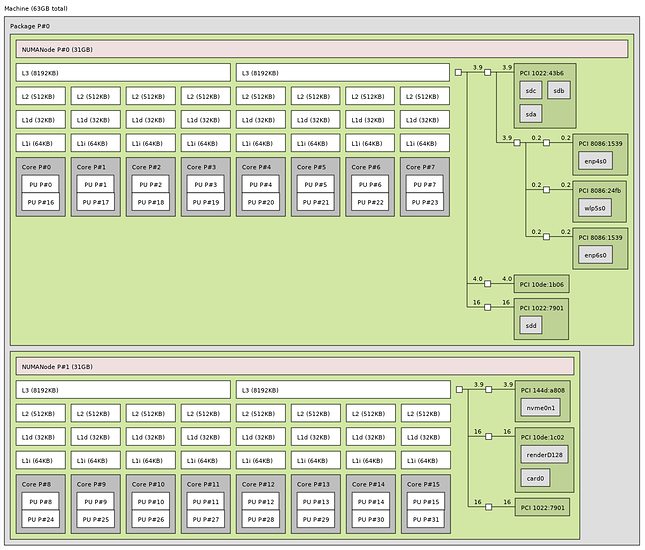

Running lstopo on my system gives me the following:

So let’s talk a bit about understanding the topology diagram we’re looking at.

First thing’s first, we have the NUMANode. Think of this as the die itself. If you were to delid your threadripper, you’d see 4 of them. Only two of them (on the non W skus) are active.

From there, you can see the L3 cache. Each CCX (Compute Complex, or set of 4 cores on the Zeppelin die) has 8MB of L3 cache, that’s how you can identify your CCX. Just below your L3, you have L2 and L1 cache.

Below all the cache are your cores. Each core has two Process Units, or PUs. Those are your threads. If you want to pass through both threads, you need to use those numbers.

Next is memory. Memory is a bit complex because the OS doesn’t share the individual DIMMS or DRAM modules with you, it just shows you how much you have attached to each core. In the pink NUMANode object, you have the amount of memory attached to each node in parentheses. We’ll talk about how to specifically target this memory later.

On to IO devices, PCI Express. To the right side of the Cache and Cores, you can see a tree diagram. The nodes on the tree have PCI IDs on them and if relevant, a device identifier as well. Network devices have an en or wl identifier, storage has a sd or nvme identifier and video cards that are attached to valid drivers have a renderD identifier. At least that’s how it works on my system.

As you can see by the 10de PCI devices, I have two Nvidia GPUs on my system. the 10de:1c02 GPU is a 1060, the 10de:1b06 GPU is a 1080 ti. Obviously, we’ll be attaching the 1080 to the VM. That means we want to use cores and memory from node 0.

At first, I thought the numbers next to the lines indicated PCIe lanes, but after doing some investigating, that can’t be right. I’ll do more research and figure this one out. (or if someone knows, holla at a brotha!)

Core configuration

CPU core configuration is pretty simple. All you need to do is configure pinning. I use the following configuration. You may change this configuration to fit your needs:

<vcpu placement='static'>16</vcpu>

<iothreads>4</iothreads>

<cputune>

<vcpupin vcpu='0' cpuset='0'/>

<vcpupin vcpu='1' cpuset='16'/>

<vcpupin vcpu='2' cpuset='1'/>

<vcpupin vcpu='3' cpuset='17'/>

<vcpupin vcpu='4' cpuset='2'/>

<vcpupin vcpu='5' cpuset='18'/>

<vcpupin vcpu='6' cpuset='3'/>

<vcpupin vcpu='7' cpuset='19'/>

<vcpupin vcpu='8' cpuset='4'/>

<vcpupin vcpu='9' cpuset='20'/>

<vcpupin vcpu='10' cpuset='5'/>

<vcpupin vcpu='11' cpuset='21'/>

<vcpupin vcpu='12' cpuset='6'/>

<vcpupin vcpu='13' cpuset='22'/>

<vcpupin vcpu='14' cpuset='7'/>

<vcpupin vcpu='15' cpuset='23'/>

<emulatorpin cpuset='0-1,16-17'/>

<iothreadpin iothread='1' cpuset='2,18'/>

<iothreadpin iothread='2' cpuset='3,19'/>

<iothreadpin iothread='3' cpuset='4,20'/>

<iothreadpin iothread='4' cpuset='5,21'/>

</cputune>

All pretty simple. We pin the vCPUs to physical cores, set up iothreads that share those physical cpus and threads, and tell the emulator to run on specific cores as well. Notice that I’ve set everything to run on threads 0-7 and 16-23? That’s because of the topology above, where it shows threads 0-7 and 16-23 on node 0, which is where my GPU is attached.

Memory configuration

Memory configuration was a beast to figure out. If you want to use hugepages, you need to somehow tell the system to assign those hugepages to the specific numa node you want to use. You can’t just sysctl -w vm.nr_hugepages=8192 to a specific numa node, but there’s another way.

/sys/devices/system/node is a system run config directory for that holds your numa nodes. Within each node, there’s a hugepages/hugepages-2048kB/nr_hugepages parameter file which you can write a number to.

In my case, I do the following. Replace node0 with whatever node you’re using.

echo 8400 > /sys/devices/system/node/node0/hugepages/hugepages2048kB/nr_hugepages

sidenote: I have docker containers running on this system that like to claim approx 150 hugepages for themselves. If you’re running into issues with your VM not starting due to hugepage availability, you might need to add a few more. That’s why I allocate 8400

I have this command in a /usr/local/bin file called by a systemd unit file on boot.

Once this command is done, you can cat /proc/meminfo | grep Huge to verify that the system has allocated your pages. Should look something like this:

$ cat /proc/meminfo | grep Huge

AnonHugePages: 2048 kB

ShmemHugePages: 0 kB

HugePages_Total: 8400

HugePages_Free: 177

HugePages_Rsvd: 175

HugePages_Surp: 0

Hugepagesize: 2048 kB

Hugetlb: 17203200 kB

Now that you’ve got your memory configuration setup, configure your VM to use hugepages:

<memory unit='KiB'>16777216</memory>

<currentMemory unit='KiB'>16777216</currentMemory>

<memoryBacking>

<hugepages/>

</memoryBacking>

Once that’s done, you should have a system that runs everything on the same numa node and you shouldn’t suffer from the latency issues of cross-node communication.

There are many other optimizations that can be done as well. If you’re interested in that, let me know and I’d be happy to write a guide on that as well.