Which bios update method do you use?

Good old DOS USB stick as these don’t support BIOS upgrades through IPMI.

There’s a magic file name that will install automatically on a “bootable usb” but idk if that still means freedos or just a fat32 partition with the one file on it.

TIL wasn’t aware that was even a thing.

Caused myself some headache by forgetting ZFS on boot needs some special treatment. So I’d happily replaced my boot drives without manually taking care of the EFI partition.

On reboot, after I added the extra memory I’d ordered, the machine didn’t come back up as it was lacking its boot partition.

Whoops…

Thankfully I still had one of the old drives around so I added it back to the pool and then synced up the new boot drives using the Proxmox instructions. Wasted quite a bit of time but oh well, everything’s back to working now

Looks like a hostile takeover of the Freenode IRC network is ongoing and most of the sysops quit.

-NickServ(NickServ@services.)- Registered : Dec 24 12:42:44 2002 (18y 21w 4d ago)

Sigh, it was a good run…

After deciding to just throw everything in Docker Compose rather than k3s I got kind of stuck on how I wanted to handle Docker volumes.

I don’t want them in /var/lib/docker/volumes, as a matter of fact I don’t want them on that system at all. Looking into “simple” options didn’t really leave a lot of choice: either move that directory onto NFS (strongly discouraged), or use iSCSI, and iSCSI kinda sucks for restoring data from snapshots (not to mention potential issues with whatever filesystem is on the iSCSI “disk”)

The other option seems to be to just create an NFS export for every volume, so that’s what I ended up doing.

Eg for diun (which is a pretty nifty tool to notify of docker image updates):

version: '3.5'

services:

diun:

image: crazymax/diun:latest

container_name: diun

restart: unless-stopped

volumes:

- diun-data:/data

- "/var/run/docker.sock:/var/run/docker.sock"

environment:

- "TZ=Europe/Brussels"

- "LOG_LEVEL=info"

- "LOG_JSON=false"

- "DIUN_WATCH_WORKERS=20"

- "DIUN_WATCH_SCHEDULE=0 */6 * * *"

- "DIUN_PROVIDERS_DOCKER=true"

- "DIUN_NOTIF_MAIL_HOST="

- "DIUN_NOTIF_MAIL_PORT=587"

- "DIUN_NOTIF_MAIL_USERNAME="

- "DIUN_NOTIF_MAIL_PASSWORD="

- "DIUN_NOTIF_MAIL_FROM="

- "DIUN_NOTIF_MAIL_TO="

labels:

- "diun.enable=true"

- "diun.watch_repo=true"

volumes:

diun-data:

driver_opts:

type: "nfs"

o: "addr=192.168.1.152,nolock,soft,rw,rdma,port=20049"

device: ":/mnt/docker/volumes/diun"

I think this is a common use case for gluster.

Would that make any sense with only a single NAS, rather than a storage cluster?

Gluster is on my to-do list, but I think you could have a single store with clients, it’s just not a typical configuration. Don’t quote me on that though.

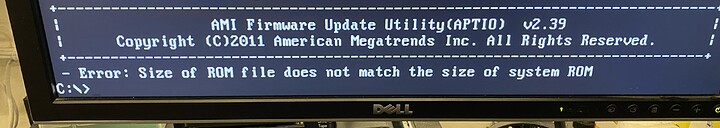

You ever run into this on a supermicro board? Trying to update the bios on an x9scm-f to fix the 2021 bug but am getting this when running the bat file in freedos.

I have quadruple-checked that it’s the right bios…

You ever run into this on a supermicro board? Trying to update the bios on an x9scm-f to fix the 2021 bug but am getting this when running the bat file in freedos.

I have quadruple-checked that it’s the right bios…

Afraid I can’t be of any help here. I have, thankfully never had any issues with BIOS flashing on Supermicro boards.

Well, been a while since the last update. So got an Ender 5 Pro 3D printer, has been working a treat so far.

Couple of upgrades I printed:

- hot end strain relief: Ender 5 Print Head Cable Strain Relief Support by joshkennedycouk - Thingiverse

- control panel back cover: Ender-5 Control Panel Back Cover by kilroy01 - Thingiverse

- bed supports: Ender 5 bed support_flat by freudi85 - Thingiverse

The strain relief for the bed heating element power cable seems unnecessary on the Pro version as there’s a hole in the mounting plate and you can just zip-tie the cable to that to keep it from pulling on the solder points.

The bed supports I’m not so sure about, the rightmost one had some issues staying in place, the advantage of these is that they don’t require mounting hardware, only printed parts. But if these don’t work out I might look into a version attached with bolts.

Been poking at Ansible as well, would recommend the series by Jay Lacroix as a primer, probably want to speed it up to 1.25% speed though.

Initial goal is to set up OpenBSD, through Ansible, for WireGuard (so I can upgrade pfSense as that requires getting rid of the WireGuard support…).

While poking at ansible I started upgrading some more neglected systems.

I have a ThinkPad T430 that I occasionally use but that got woefully out-of-date. Given that it runs Gentoo and isn’t exactly the fastest anymore I figured I’d set up distcc on my Gentoo VM. As usual getting distcc to work quite right is proving to be a pain (seems to only be using 4 of the 8 cores on the server for…reasons).

In my research for a solution I came across this promising project: GitHub - icecc/icecream: Distributed compiler with a central scheduler to share build load

Haven’t tried at it yet, but it sounds good, and it’s available in portage so that just moved up to a pretty high spot on the ToDo list.

Initial goal is to set up OpenBSD, through Ansible, for WireGuard (so I can upgrade pfSense as that requires getting rid of the WireGuard support…).

Oh let me know how that goes…

Update on the PC build, for reference:

The drive cage in the front right doesn’t exactly get any airflow. There’s no fans, nor any fan brackets and the PSU pulls the air from the CPU “compartment”. So when I bothered to check SMART (not sure if I set something up wrong, or if not critical enough to get an alert) I saw a bunch of warnings about drive temperatures (on both disks)

So I printed a fan bracket to get some air over the drives. Temperatures seem to be way down now, by about 10 degrees (Celcius). Yeah…

Bit surprised it was as big of an issue as it turned out to be as I did monitor temps initially, so maybe issues only occurred during heavy gaming sessions when the GPU also dumps all the heat into the chassis…

Oh well, live and learn, long term this box is not supposed to have any HDDs in it anymore but well, we’re not quite there yet…

After reading a hint I discovered most of my SAS drives didn’t have writeback cache enabled, so I fixed that with sdparm:

# sdparm --set=WCE --save /dev/sdn

/dev/sda: HGST HUS724030ALS640 A1C4

Verifying:

# sdparm --get=WCE /dev/sda

/dev/sda: HGST HUS724030ALS640 A1C4

WCE 1 [cha: y, def: 1, sav: 1]

Guess that might help performance a bit on the SAS drives (SATA drives already had it enabled by default)

Upgraded from Proxmox VE 6.4 to 7 without much trouble, the only issue I had was that the CA name of the Mellanox Infiniband adapter in the one server changed to be the same as the device name, Which was kind of odd. Didn’t happen on the other server, likely because it uses the Mellanox drivers and not the kernel provided ones.

For clarity, this path:

/sys/class/infiniband/mlx4_0/device/net/ibp2s0/mode

turned into this:

/sys/class/infiniband/ibp2s0/device/net/ibp2s0/mode

Did not really affect functionality, but it broke my Prometheus setup since it relied on the CA name being “standard” and starting with mlx4_

Opened up one of those (firmware) failed Samsung CLAR100 SSDs to check whether they had a supercap, did not expect to find, well, this:

Not sure if “normal”, certainly wasn’t able to dig up anything looking like this using search engines, but that could just be me, of course…

Had some trouble setting up Foundry VTT (Virtual TableTop)'s Docker container behind an Apache reverse proxy. Unlike most I didn’t want to use a subdomain, but rather a sub-path, so mydomain.com/vtt rather than vtt.mydomain.com.

To get that to work I set the route_prefix option on the Foundry VTT Docker image:

FOUNDRY_ROUTE_PREFIX=vtt

However that kept redirecting me to the domain root for some reason. Since basically the same reverse proxy configuration works fine for other applications I started poking at the container configuration and trying different permutations of options.

After a while I gave up and just used the root, and then things worked just fine, leading me to believe there was something up with how the application handles pathing behind a proxy.

Eventually the curlprit turned out to be these proxy-related options:

- FOUNDRY_PROXY_PORT=443

- FOUNDRY_PROXY_SSL=true

When these are set it seems to break the route prefix. Not 100% confident that was what was going on but removing them fixed the issue.

So the working config is now:

version: "3.2"

services:

foundry:

image: felddy/foundryvtt:release

container_name: foundry-vtt

hostname: myhost.org

restart: "unless-stopped"

volumes:

- fvtt-data:/data

environment:

- FOUNDRY_USERNAME=username

- FOUNDRY_PASSWORD=password

- FOUNDRY_UID=1000

- FOUNDRY_GID=100

- TIMEZONE=Europe/Brussels

- CONTAINER_CACHE=/data/container_cache

- FOUNDRY_ROUTE_PREFIX=vtt

- FOUNDRY_HOSTNAME=myhost.org

ports:

- 30000:30000/tcp

volumes:

fvtt-data:

driver_opts:

type: "nfs"

o: "addr=192.168.1.152,nolock,soft,rw,rdma,port=20049"

device: ":/mnt/docker/volumes/foundryvtt"

With the following Apache proxy settings:

# Foundry VTT

ProxyPass "/vtt/socket.io/" "ws://docker.internal:30000/vtt/socket.io/"

ProxyPass "/vtt" "http://docker.internal:30000/vtt"

ProxyPassReverse "/vtt" "http://docker.internal:30000/vtt"

There’s still some wonkiness going on which I attribute to Foundry VTT, like myhost.org/vtt/ redirectint to myhost.org, but myhost.org/vtt working correctly. I’d expect both to work, but the application seems to be doing something funky there.