Hey Guys,

So I’m using oVirt to create a brick and then add a cache to the LVM after.

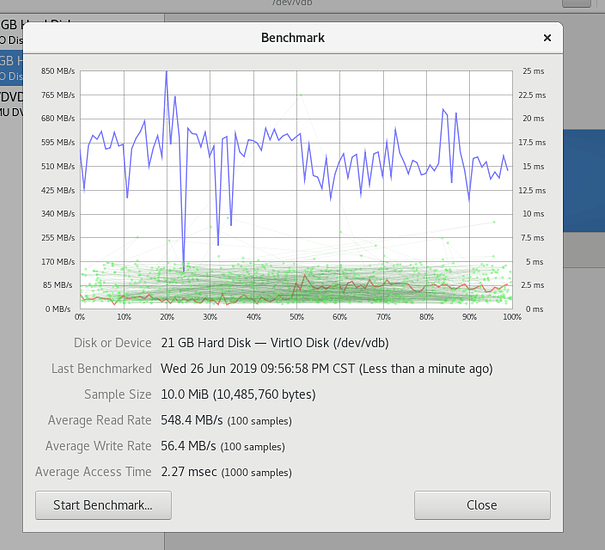

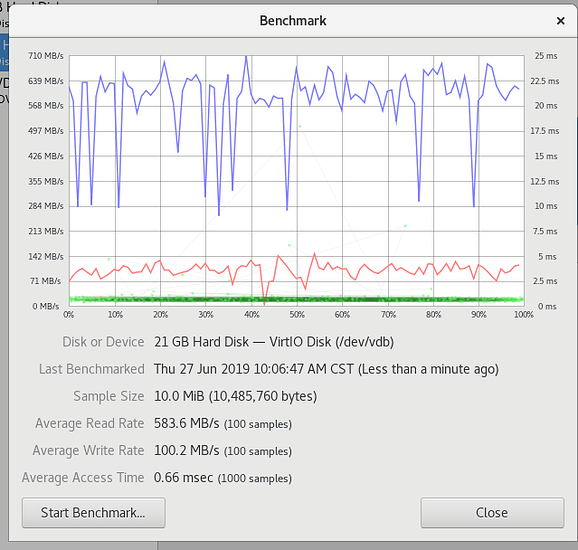

I’m doing the folllowing commands but i’m not seeing an improvement on my benchmarks.

pvcreate /dev/md/VMStorageHot_0

vgextend RHGS_vg_VMStorage /dev/md/VMStorageHot_0

lvcreate -L 1500G -n lv_cache RHGS_vg_VMStorage /dev/md/VMStorageHot_0

lvcreate -L 100M -n lv_cache_meta RHGS_vg_VMStorage /dev/md/VMStorageHot_0

lvconvert --type cache-pool --cachemode writeback --poolmetadata RHGS_vg_VMStorage/lv_cache_meta RHGS_vg_VMStorage/lv_cache

lvconvert --type cache --cachepool RHGS_vg_VMStorage/lv_cache RHGS_vg_VMStorage/VMStorage_lv_pool

Okay, first thing’s first: what benchmarks are you trying to see improvements on? What are your realistic expectations?

What is your physical storage setup?

1 Like

The cold pool is 5 HDD’s in Raid6 with a 65kib chunk size.

On the hot pool using dd i’ll get around 250MB/s and on the cold I get 40MB/s. When adding the cache I still get 40MB/s.

The benchmark i’m using is

Robert4049:

gluster-bricks

Ah, gluster. Didn’t realize that’s what you’re using.

I have no experience with gluster, but O_DIRECT is a very cache-unfriendly operation type. Lots of tiered storage solutions do not support O_DIRECT going into the cache.

Using DD, you’re doing sequential writes. DD on sequential writes to rust should net you closer to 100-150MB/s on a single drive. RAID6 should get you slightly higher performance than that, but not by much. (RAID5/6/7 sucks for performance)

I think what’s happening is LVM is ignoring the cache due to O_DIRECT and is sending it straight to the rust. Gluster is also likely killing some of your performance.

See what happens if you do:

dd if=/dev/zero of=/gluster-blocks/VMStorage/test-2 bs=4M count=1000 oflag=direct

#AND

dd if=/dev/zero of=/gluster-blocks/VMStorage/test-3 bs=4M count=1000

Larger blocks might be required. In addition, I’d like to see if my O_DIRECT suspicion is correct.

[root@131-STR-02 VMStorage]# dd if=/dev/zero of=/gluster-bricks/VMStorage/test-2 bs=4M count=1000 oflag=direct

1000+0 records in

1000+0 records out

4194304000 bytes (4.2 GB) copied, 75.6966 s, 55.4 MB/s

[root@131-STR-02 VMStorage]#

[root@131-STR-02 VMStorage]# dd if=/dev/zero of=/gluster-bricks/VMStorage/test-3 bs=4M count=1000

1000+0 records in

1000+0 records out

4194304000 bytes (4.2 GB) copied, 1.94241 s, 2.2 GB/s

nx2l:

lvs -a -o+cache_mode

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert CacheMode

VMStorage_lv RHGS_vg_VMStorage Vwi-aot--- <21.82t VMStorage_lv_pool 0.04

VMStorage_lv_pool RHGS_vg_VMStorage twi-aot--- 21.80t 0.04 0.25

[VMStorage_lv_pool_tdata] RHGS_vg_VMStorage Cwi-aoC--- 21.80t [lv_cache] [VMStorage_lv_pool_tdata_corig] 0.36 7.78 25.33 writeback

[VMStorage_lv_pool_tdata_corig] RHGS_vg_VMStorage owi-aoC--- 21.80t

[VMStorage_lv_pool_tmeta] RHGS_vg_VMStorage ewi-ao---- 15.81g

[lv_cache] RHGS_vg_VMStorage Cwi---C--- 1.46t 0.36 7.78 25.33 writeback

[lv_cache_cdata] RHGS_vg_VMStorage Cwi-ao---- 1.46t

[lv_cache_cmeta] RHGS_vg_VMStorage ewi-ao---- 100.00m

[lvol0_pmspare] RHGS_vg_VMStorage ewi------- 15.81g

home onn Vwi-aotz-- 1.00g pool00 4.79

[lvol0_pmspare] onn ewi------- 232.00m

ovirt-node-ng-4.3.4-0.20190610.0 onn Vwi---tz-k <426.27g pool00 root

ovirt-node-ng-4.3.4-0.20190610.0+1 onn Vwi-aotz-- <426.27g pool00 ovirt-node-ng-4.3.4-0.20190610.0 2.07

pool00 onn twi-aotz-- <453.27g 2.56 2.35

[pool00_tdata] onn Twi-ao---- <453.27g

[pool00_tdata] onn Twi-ao---- <453.27g

[pool00_tmeta] onn ewi-ao---- 1.00g

[pool00_tmeta] onn ewi-ao---- 1.00g

root onn Vri---tz-k <426.27g pool00

swap onn -wi-ao---- 4.00g

tmp onn Vwi-aotz-- 1.00g pool00 4.93

var onn Vwi-aotz-- 15.00g pool00 3.73

var_crash onn Vwi-aotz-- 10.00g pool00 2.86

var_log onn Vwi-aotz-- 8.00g pool00 4.41

var_log_audit onn Vwi-aotz-- 2.00g pool00 5.23

[root@131-STR-02 VMStorage]#

nx2l

June 27, 2019, 9:09am

10

Is the md device still building?

nx2l

June 27, 2019, 11:37am

11

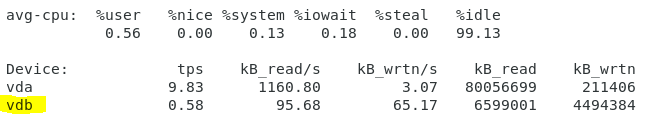

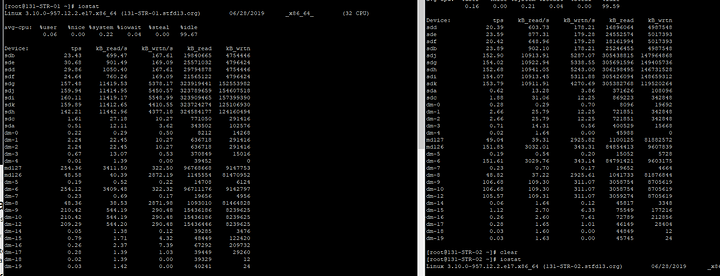

It would also be worthwhile to do a few write tests while saving iostat output so you can analyze it later.

The slow cache is still building,

nx2l

June 27, 2019, 4:31pm

13

run it again but capture the output of iostat while it runs

Here’s the output from the Storage Nodes themselves

nx2l

June 28, 2019, 8:32pm

16

You need to watch the values while you are running your benchmark tests